The holiday season often transforms casual family gatherings into impromptu AI policy debates. From whispers of "chatbot psychosis" and concerns about electricity prices driven by data centers, to urgent questions about children’s unfettered access to AI, the technology’s pervasive presence has sparked widespread alarm. As these conversations invariably pivot to the future, the question arises: if AI is already creating such significant ripples, what lies ahead as it continues to advance? Many look to me for a forecast, a glimpse of either a utopian future or a dystopian descent. However, I often find myself disappointing, not out of unwillingness, but because the very nature of predicting AI’s trajectory is becoming increasingly challenging.

Despite this inherent difficulty, MIT Technology Review has consistently demonstrated an impressive ability to navigate and articulate the evolving landscape of artificial intelligence. Our recent publication, "What’s Next for AI in 2026," offers a sharp analysis of the coming year’s trends, building upon a track record where our predictions for the previous year largely materialized. Yet, each holiday season underscores the escalating complexity of forecasting AI’s impact. This challenge stems from three fundamental, as yet unresolved, questions that cast a long shadow over any attempt at definitive prediction.

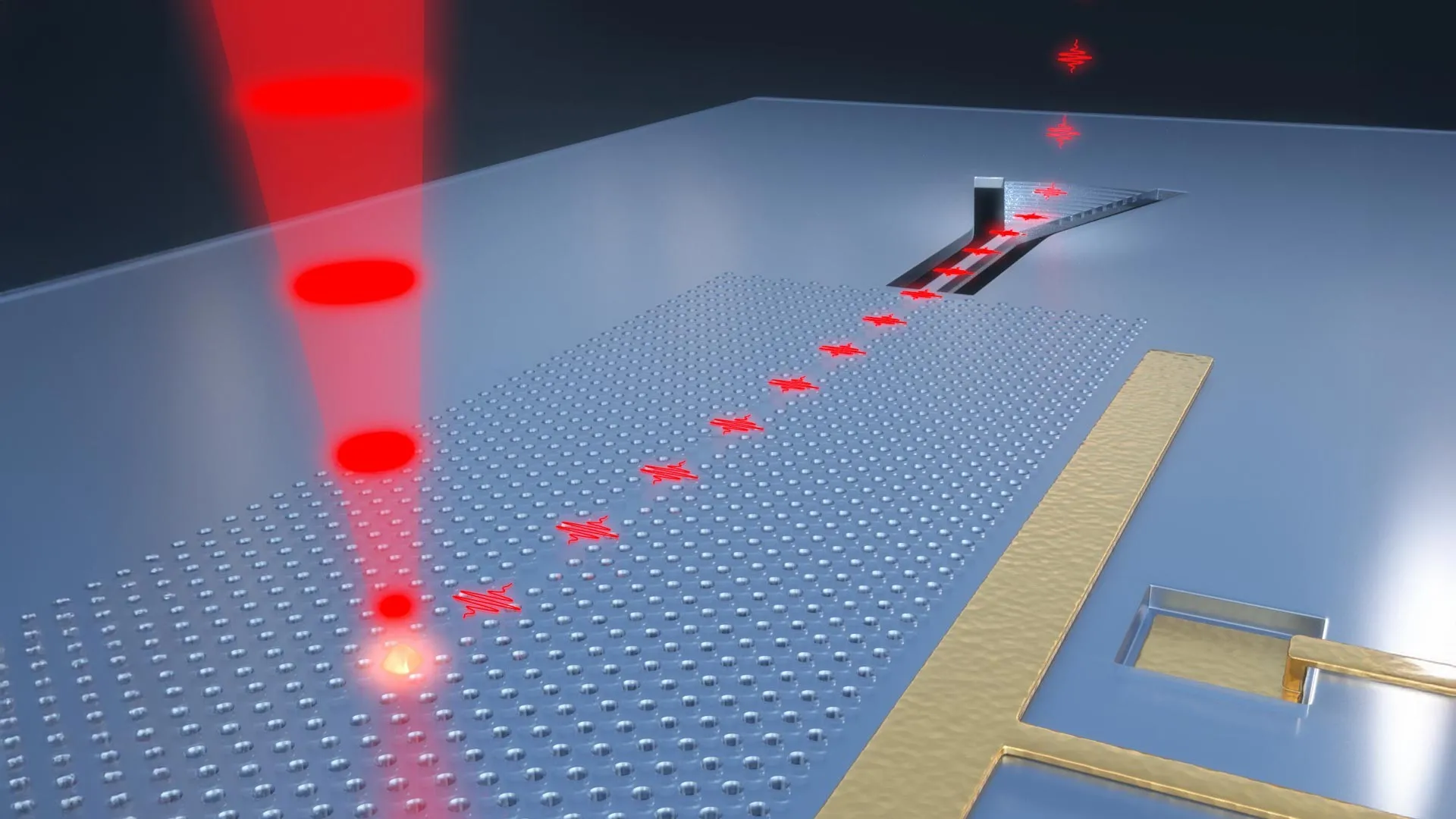

Firstly, the future trajectory of large language models (LLMs) remains uncertain. These powerful models, currently the engine behind much of the excitement and apprehension surrounding AI – from sophisticated AI companions to automated customer service agents – are the bedrock of current AI discourse. A plateau or slowdown in their incremental improvement would represent a monumental shift. Such a development would necessitate a profound reevaluation of the AI landscape, a topic we explored in depth through a series of articles in December, contemplating the emergence of a post-AI-hype era and its potential implications. The question of whether LLMs will continue their rapid ascent or reach a plateau is paramount, as it directly influences the pace and nature of AI’s integration into society.

Secondly, public sentiment towards AI is strikingly, and perhaps surprisingly, negative. This sentiment is not an abstract concern but manifests in tangible opposition. Consider the ambitious $500 billion proposal by OpenAI CEO Sam Altman and former President Trump to construct vast data centers across the United States to fuel the training of increasingly massive AI models. This announcement, made with considerable fanfare, seemingly overlooked or underestimated the strong public resistance many Americans harbor towards the establishment of such facilities in their communities. A year later, Big Tech is engaged in a strenuous uphill battle to sway public opinion and secure the necessary approvals for continued expansion, raising the critical question of whether they can successfully win over the public and continue their build-out plans. The political response to this public frustration is a complex and often contradictory tapestry. On one hand, President Trump has aligned with Big Tech by advocating for federal oversight of AI regulation, a move that would consolidate power and streamline development processes, potentially paving the way for codifying this into law. However, a disparate coalition, ranging from progressive lawmakers in California to the increasingly Trump-aligned Federal Trade Commission (FTC), is expressing alarm over AI’s potential impact, particularly concerning the protection of children from sophisticated chatbots. The FTC, in a notable move, launched an inquiry into AI chatbots that are acting as companions, highlighting the diverse motivations and approaches of these groups. The crucial question is whether these disparate factions can overcome their differences and effectively implement measures to rein in the rapid, and sometimes unchecked, advancement of AI firms.

The third, and perhaps most nuanced, unanswered question relates to the tangible, positive applications of AI. In the midst of gloomy holiday dinner conversations, a common refrain emerges: "Isn’t AI being used for objectively good things? Improving health, driving scientific discoveries, and deepening our understanding of climate change?" The answer, while not a simple "yes," contains elements of truth. Machine learning, an established precursor to modern AI, has indeed been instrumental in a wide array of scientific endeavors. Deep learning, a subfield of machine learning, forms a critical component of AlphaFold, a Nobel Prize-winning tool that has revolutionized protein prediction and significantly advanced the field of biology. Furthermore, advancements in image recognition models are demonstrably improving their accuracy in identifying cancerous cells, offering promising avenues for early diagnosis and treatment.

However, the track record for chatbots, built upon the more recent advancements in large language models, is considerably more modest. While technologies like ChatGPT excel at analyzing vast repositories of research to synthesize existing knowledge, their claims of genuine scientific breakthroughs, such as solving previously intractable mathematical problems, have often proven to be unsubstantiated or, as some high-profile reports suggested, outright bogus. These AI models can indeed serve as valuable assistants to medical professionals in diagnostic processes. Yet, they also carry the significant risk of encouraging individuals to self-diagnose health issues without consulting qualified medical practitioners, a practice that can lead to perilous outcomes. The potential for AI to empower individuals with information must be carefully balanced against the imperative of professional medical guidance.

As we look ahead to this time next year, it is highly probable that we will possess more definitive answers to the questions that preoccupy my family and countless others. Simultaneously, a fresh set of entirely new questions will undoubtedly emerge, reflecting the dynamic and often unpredictable evolution of AI. In the interim, we encourage you to delve into our comprehensive analysis, "What’s Next for AI in 2026," which features insightful predictions from the entire AI team at MIT Technology Review, offering a valuable guide through the unfolding AI landscape.