In an industry characterized by relentless evolution, predicting the future of Artificial Intelligence may seem like a daring endeavor. However, for several years running, MIT Technology Review has embraced this challenge through its "What’s Next" series, offering an early glimpse into emerging trends and technologies across various sectors. This year, we delve into the most significant developments anticipated for AI in 2026.

Reflecting on our predictions for 2025, several key trends have indeed come to fruition. "Generative virtual playgrounds," now commonly referred to as world models, have seen significant advancements, exemplified by Google DeepMind’s Genie 3 and World Labs’ Marble, which enable the on-demand generation of realistic virtual environments. The rise of "reasoning models" has established a new paradigm for sophisticated problem-solving, becoming the benchmark for advanced AI capabilities. The prediction of a "boom in AI for science" has also materialized, with organizations like OpenAI establishing dedicated teams to focus on scientific applications, mirroring Google DeepMind’s initiative. Furthermore, the trend of "AI companies becoming cozier with national security" has been starkly illustrated by OpenAI’s pivot to collaborate with defense-tech firm Anduril, assisting in counter-drone operations. While "legitimate competition for Nvidia" remains an ongoing narrative, with China heavily investing in advanced AI chips, Nvidia’s current dominance in the market appears robust, for the time being.

With this backdrop, MIT Technology Review presents its top predictions for the AI landscape in 2026:

More Silicon Valley Products Will Be Built on Chinese LLMs

The past year witnessed a significant surge in the prominence of Chinese open-source large language models (LLMs). In January, DeepSeek’s release of R1, its open-source reasoning model, stunned the AI community, demonstrating the remarkable capabilities achievable by a relatively small Chinese firm with limited resources. The term "DeepSeek moment" quickly became a common refrain among AI entrepreneurs and observers, signifying an aspirational benchmark for performance achievable without relying on established Western giants like OpenAI, Anthropic, or Google.

This accessibility to top-tier AI performance without the constraints of proprietary systems has made open-weight models like R1 an attractive option. These models can be downloaded and run on personal hardware, offering greater flexibility for customization through techniques such as distillation and pruning. This stands in stark contrast to the "closed" models from major American firms, where core functionalities remain proprietary and access is often costly. Consequently, Chinese models have emerged as a pragmatic choice for many developers. Reports from CNBC and Bloomberg indicate a growing recognition and adoption of these models by US startups.

Alibaba’s Qwen family of models, originating from China’s largest e-commerce platform, Taobao, has garnered substantial attention. Qwen2.5-1.5B-Instruct, in particular, boasts 8.85 million downloads, positioning it as one of the most widely utilized pre-trained LLMs. The Qwen series offers a diverse range of model sizes and specialized versions optimized for tasks such as mathematics, coding, vision, and instruction following, solidifying its position as an open-source powerhouse.

Inspired by DeepSeek’s success, other Chinese AI companies, previously hesitant about open-source adoption, are now embracing this strategy. Notable examples include Zhipu’s GLM and Moonshot’s Kimi. This competitive pressure has also prompted some American firms to increase their open-source contributions. In August, OpenAI released its first open-source model, and in November, the Allen Institute for AI launched its latest open-source offering, Olmo 3.

Despite escalating geopolitical tensions between the US and China, the widespread embrace of open source by Chinese AI firms has fostered goodwill within the global AI community and cultivated long-term trust. In 2026, it is anticipated that an increasing number of Silicon Valley applications will quietly leverage Chinese open models, and the gap between Chinese technological releases and their Western counterparts is expected to shrink from months to mere weeks, and sometimes even less.

— Caiwei Chen

The US Will Face Another Year of Regulatory Tug-of-War

The ongoing debate surrounding AI regulation is escalating towards a critical juncture. On December 11, President Donald Trump signed an executive order designed to limit the authority of state AI laws, aiming to prevent individual states from imposing stringent regulations on the rapidly expanding AI industry. This move signals an impending period of intense political contention in 2026. The White House and state governments will engage in a power struggle over the governance of this burgeoning technology, while AI companies will escalate their lobbying efforts to thwart regulatory measures. Their central argument will be that a fragmented landscape of state laws will stifle innovation and undermine the US’s competitive edge in the global AI arms race against China.

Under President Trump’s executive order, states may face the prospect of legal challenges or the withholding of federal funding if they deviate from his vision of a light-touch regulatory approach. Prominent Democratic states, such as California, which recently enacted the nation’s first frontier AI law mandating companies to publish safety testing for their AI models, are likely to pursue legal avenues, asserting that only Congress has the authority to supersede state legislation. However, states facing financial constraints or fearing political repercussions may be compelled to comply. Nevertheless, the trend of state-level legislation on contentious AI issues is expected to persist, particularly in areas where President Trump’s order provides states with the impetus to legislate. With chatbots facing accusations of contributing to teen suicides and AI data centers consuming increasingly vast amounts of energy, public pressure on states to implement guardrails is likely to intensify.

In lieu of state-specific legislation, President Trump has expressed his intention to collaborate with Congress on establishing a federal AI law. However, expectations for such legislation should be tempered. Congress’s repeated failure to pass a moratorium on state legislation in 2025 suggests that the passage of its own comprehensive AI bill in the upcoming year is improbable.

AI companies, including OpenAI and Meta, are expected to continue deploying substantial super-Political Action Committees (PACs) to support political candidates aligned with their agendas and to target those who oppose them. Conversely, super-PACs advocating for AI regulation will also amass significant financial resources to counter these efforts. The midterm elections in the following year will likely witness intense battles waged by these PACs.

As AI technology continues its rapid advancement, the determination to shape its trajectory will intensify, making 2026 another year characterized by a protracted regulatory tug-of-war with no immediate resolution in sight.

— Michelle Kim

Chatbots Will Change the Way We Shop

Imagine having a dedicated, round-the-clock personal shopper—an expert capable of instantly recommending the perfect gift for even the most discerning recipient, or meticulously compiling a list of the best bookcases within a strict budget. This AI assistant could analyze the pros and cons of kitchen appliances, compare them with seemingly identical alternatives, and secure the best deals. Once a decision is made, the chatbot would seamlessly handle all purchasing and delivery arrangements. This is not a distant fantasy, but a rapidly approaching reality driven by AI. Salesforce projects that AI will drive $263 billion in online purchases this holiday season, accounting for approximately 21% of all orders. Experts predict that AI-enhanced shopping will become an even more significant economic force in the coming years, with McKinsey estimating that agentic commerce could generate between $3 trillion and $5 trillion annually by 2030.

AI companies are heavily invested in streamlining the purchasing process through their platforms. Google’s Gemini app now leverages the company’s comprehensive Shopping Graph dataset, encompassing products and sellers, and can even utilize its agentic technology to place calls to retailers on behalf of users. In November, OpenAI introduced a ChatGPT shopping feature designed to rapidly generate buyer’s guides. Furthermore, OpenAI has established partnerships with major retailers like Walmart, Target, and Etsy, enabling direct product purchases within chatbot interactions.

As consumer engagement with AI chatbots continues to rise, and web traffic from search engines and social media experiences a decline, further strategic alliances are expected within the next year, solidifying chatbots’ role in the e-commerce landscape.

— Rhiannon Williams

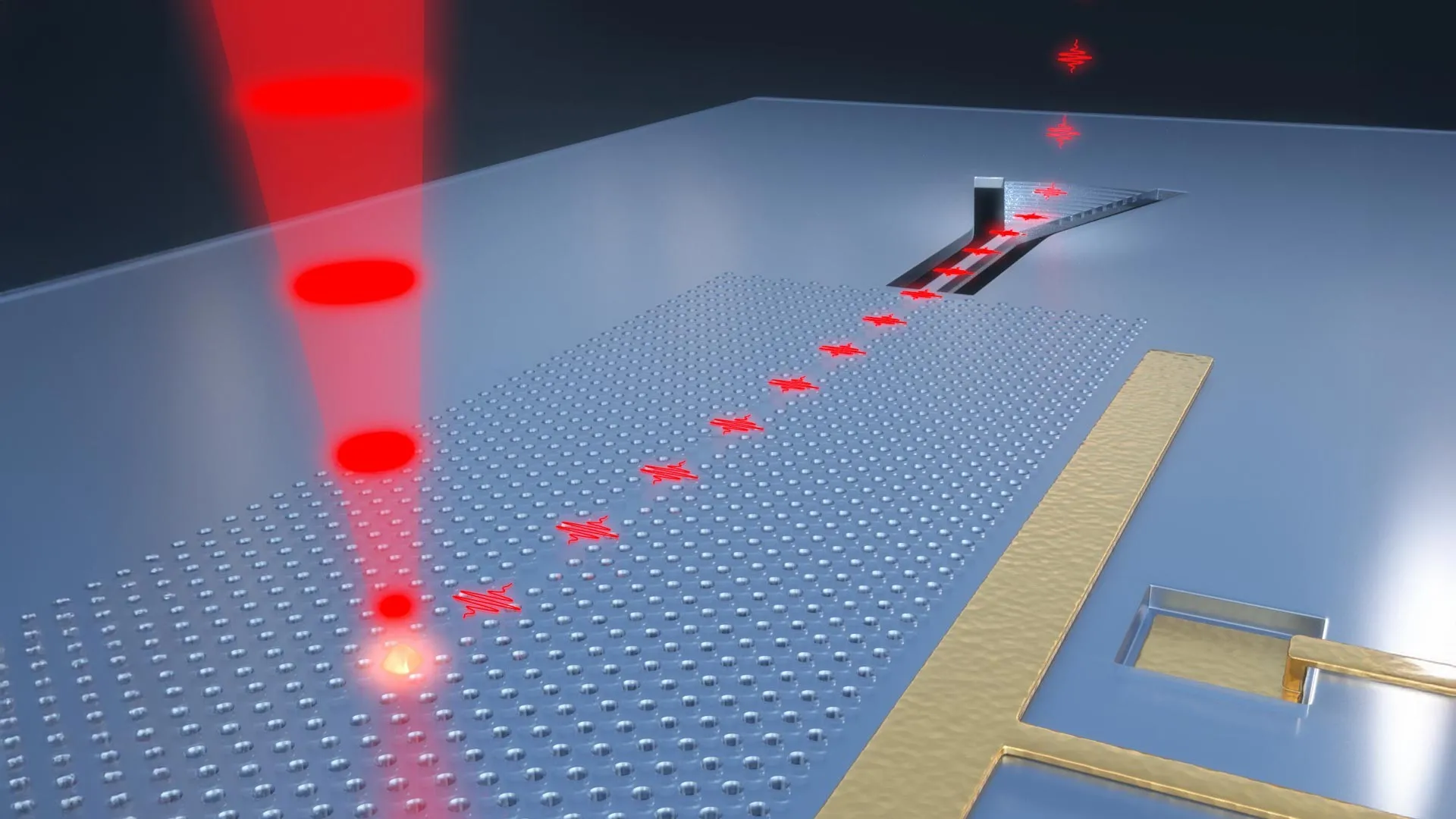

An LLM Will Make an Important New Discovery

While it’s acknowledged that large language models (LLMs) can produce inaccuracies, their potential to expand the boundaries of human knowledge remains significant, even if direct discovery without human guidance is rare. A glimpse into this collaborative future was provided in May with the unveiling of Google DeepMind’s AlphaEvolve. This system employed the company’s Gemini LLM in conjunction with an evolutionary algorithm. The evolutionary algorithm acted as a validation mechanism, evaluating the LLM’s suggestions, selecting the most promising ones, and feeding them back into the LLM to refine its output.

Google DeepMind utilized AlphaEvolve to develop more efficient methods for managing data center power consumption and optimizing the performance of Google’s TPU chips. While these discoveries are notable, they are not yet revolutionary. However, researchers at Google DeepMind are actively pushing the boundaries of this approach to ascertain its full potential.

The innovation has not gone unnoticed, with other entities quickly adopting similar methodologies. A week after AlphaEvolve’s debut, Asankhaya Sharma, an AI engineer in Singapore, released OpenEvolve, an open-source adaptation of Google DeepMind’s tool. In September, the Japanese firm Sakana AI introduced SinkaEvolve, a variant of the software. Furthermore, a collaborative team of US and Chinese researchers unveiled AlphaResearch, which they claim surpasses one of AlphaEvolve’s already superior-than-human mathematical solutions.

Alternative approaches are also being explored. For instance, researchers at the University of Colorado Denver are attempting to enhance the inventiveness of LLMs by modifying the functionality of "reasoning models." They are drawing upon insights from cognitive science regarding human creative thinking to guide reasoning models toward more unconventional solutions, moving beyond their typical predictable suggestions.

Hundreds of companies are investing billions of dollars in endeavors to leverage AI for solving complex mathematical problems, accelerating computer speeds, and discovering new drugs and materials. With AlphaEvolve demonstrating the tangible possibilities of LLMs in scientific discovery, activity in this domain is poised for rapid acceleration.

— Will Douglas Heaven

Legal Fights Heat Up

Initially, lawsuits against AI companies followed a predictable pattern: rights holders, such as authors and musicians, would sue companies for training AI models on their copyrighted material, and courts would generally rule in favor of the tech giants. However, the upcoming legal battles surrounding AI are expected to be significantly more complex and contentious.

These disputes will center on intricate and unresolved legal questions: Can AI companies be held responsible for actions prompted by their chatbots, as in cases where they assist teenagers in planning suicides? If a chatbot disseminates demonstrably false information about an individual, can its creator be sued for defamation? Should companies lose these cases, will insurers begin to disavow AI companies as clients?

In 2026, preliminary answers to these questions will begin to emerge, partly due to notable cases proceeding to trial. For example, the family of a teenager who died by suicide plans to bring OpenAI to court in November.

Simultaneously, the legal landscape will be further complicated by President Trump’s December executive order, which aims to eliminate state-level legislative impediments to national AI policy. This directive, as detailed in Michelle Kim’s analysis, is fueling a brewing regulatory storm.

Regardless of the specific outcomes, a multifaceted array of lawsuits is anticipated across various legal domains. It is even possible that some judges, overwhelmed by the volume of AI-related cases, may turn to AI for assistance in navigating the deluge of legal challenges.

— James O’Donnell