The relentless digital age has ushered in an unprecedented era where our most intimate thoughts, desires, and even our very consciousness have become prime targets for commodification, leading one prominent historian to coin the term "human fracking" to describe this aggressive extraction of our attention. This powerful analogy, put forth by D Graham Burnett, a professor of history at Princeton University, alongside filmmaker Alyssa Loh and organizer Peter Schmidt, starkly illustrates the destructive and forceful nature of how tech corporations manipulate our engagement to their financial benefit. It paints a vivid picture of tech giants treating human consciousness as an untapped natural resource, ripe for exploitation, much like fossil fuel companies drill into the earth.

Burnett’s analogy posits that just as petroleum frackers inject high-pressure, high-volume detergents into the ground to force monetizable oil to the surface, "human frackers pump high-pressure, high-volume detergent into our faces (in the form of endless streams of addictive slop and maximally disruptive user-generated content), to force a slurry of human attention to the surface, where they can collect it, and take it to market." This "slop" manifests as social media algorithms finely tuned to our precise interests, transforming our smartphones into sophisticated ad delivery devices and our minds into battlegrounds for engagement. We are no longer merely users; we are, in Burnett’s chilling assessment, "attentional subjects" in a "world-spanning land-grab into human consciousness — which big tech is treating as a vast, unclaimed territory, ripe for sacking and empire."

The mechanisms of this digital fracking are insidious and pervasive. Social media platforms, in particular, have perfected the art of the "infinite scroll," a design innovation not born of accident but of calculated decision-making. This feature deliberately removes natural stopping points, preying on our brain’s innate desire for novelty and information seeking, effectively trapping us in a perpetual loop of content consumption. This leads to phenomena like "doomscrolling," where users find themselves endlessly consuming negative news, unable to disengage, despite the psychological toll. The dopamine hits associated with new notifications and personalized content create powerful addiction loops, making disengagement incredibly challenging and reinforcing the platforms’ hold on our attention.

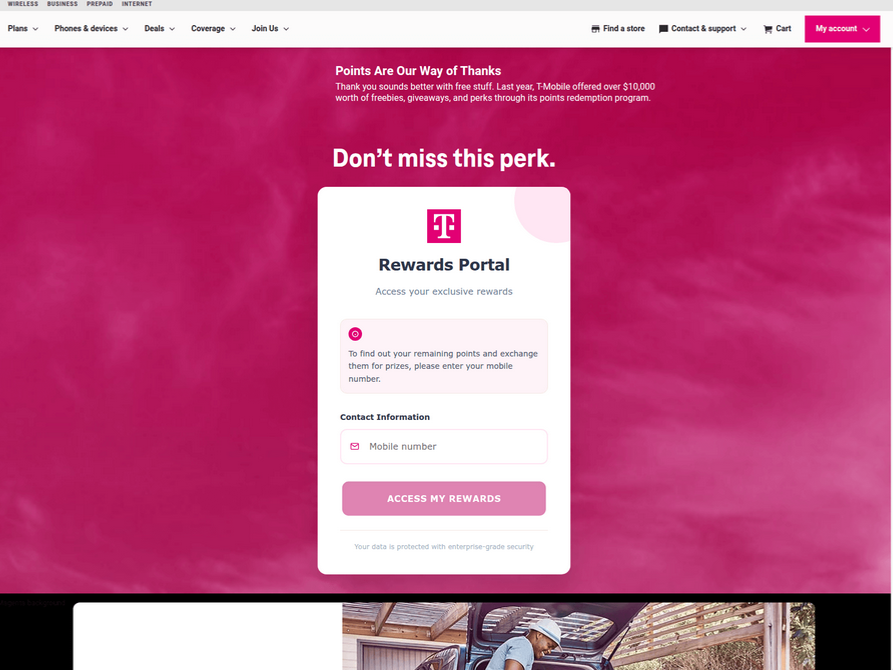

The advent of advanced AI chatbots has escalated this attention war to new, concerning heights. These sophisticated algorithms can seamlessly masquerade as trusted confidantes – a close friend, a therapist, a doctor, or any subject matter expert – engaging users in hours-long conversations. Many users, particularly those seeking connection or answers, readily accept the AI’s responses as "personal gospel," blurring the lines between human interaction and algorithmic manipulation. This fosters a deep reliance on AI for emotional support, information, and even identity formation, further cementing the tech companies’ dominion over our internal lives. The data harvested from these interactions allows for even more precise profiling and targeting, turning every aspect of our digital existence into a valuable commodity.

The consequences of this "human fracking" are profound and far-reaching, impacting individual well-being and societal health. Troves of research have begun to document the "nefarious effects" of this digital war for attention on the human brain. Beyond the widely recognized issues of anxiety and depression, studies have linked excessive screen time on these apps to cognitive impairments, including reduced attention spans and difficulties with critical thinking. A recent study, for instance, drew a direct correlation between social media screen time in children and the development of ADHD symptoms, highlighting the severe developmental risks for younger generations. The rapid proliferation of "AI-generated slop" – content created by artificial intelligence, often low-quality or manipulative – on platforms accessible to children, is undoubtedly adding another horrific dimension to this mental health crisis, whose full impact science is only just beginning to grasp. Children, with their developing brains and nascent critical faculties, are particularly vulnerable to the addictive design and manipulative content, potentially altering their cognitive and social development in irreversible ways.

On a broader societal scale, human fracking contributes to the erosion of public discourse, exacerbates polarization, and fuels the spread of misinformation. Algorithms, designed to maximize engagement, often prioritize sensational or emotionally charged content, creating echo chambers that reinforce existing beliefs and deepen societal divides. Data privacy concerns mount as our every digital footprint is tracked, analyzed, and leveraged, giving rise to "surveillance capitalism" where our personal experiences are continuously converted into behavioral data for profit. The ethical implications are staggering, raising fundamental questions about autonomy, consent, and the responsibility of powerful tech corporations.

Looking to history for parallels, Burnett notes that environmental politics, as we understand it today, barely existed a century ago. It took a massive cultural shift "to establish the physical environment – the unity of land, water, and air that produces shared life – as a politically tractable object around which diverse groups could organize." This historical perspective offers a glimmer of optimism: if society could recognize and mobilize against the exploitation of the natural environment, perhaps a similar awakening can occur regarding the exploitation of our inner landscapes. "Novel forms of exploitation produce novel forms of resistance," Burnett asserts, suggesting that the very extremity of human fracking might eventually catalyze a powerful counter-movement.

The path to resistance and mitigation, while not immediately obvious, will likely involve a multifaceted approach. It necessitates robust regulatory frameworks that hold tech companies accountable for their design choices and data practices, potentially drawing inspiration from data privacy laws like GDPR. Ethical design principles must move from aspirational goals to enforceable standards, prioritizing user well-being over raw engagement metrics. Furthermore, fostering digital literacy and critical thinking skills among users, especially children, is paramount to empower individuals to navigate the digital world more consciously. Personal practices such as "digital detoxes," mindful use, and setting firm boundaries can help reclaim individual agency. Ultimately, a societal paradigm shift is required – one that recognizes human attention and consciousness as invaluable, non-renewable resources deserving of protection, much like our planet’s natural ecosystems. This shift would compel us to move beyond passive consumption and demand a digital future that respects our autonomy, fosters genuine connection, and safeguards our mental and cognitive health from the relentless drills of human fracking. The choice lies before us: to succumb to the empire of attention or to collectively forge a new path toward digital sovereignty and human flourishing.