In a groundbreaking achievement that promises to accelerate the development of reliable quantum computers, an international team of researchers has successfully simulated a specific type of error-corrected quantum computation, a feat previously considered computationally intractable and akin to solving an "impossible" puzzle. This monumental breakthrough, a world-first, was accomplished by scientists from Chalmers University of Technology in Sweden, the University of Milan in Italy, the University of Granada in Spain, and the University of Tokyo in Japan, and marks a critical step forward in overcoming a fundamental hurdle on the path to practical and powerful quantum technologies.

Quantum computers hold the tantalizing potential to revolutionize numerous fields by tackling complex problems that lie far beyond the capabilities of even the most powerful supercomputers today. From accelerating drug discovery and developing new energy solutions to enhancing encryption, advancing artificial intelligence, and optimizing logistics, the transformative impact of quantum computing is projected to reshape our world in profound ways. However, the realization of this immense promise hinges on the ability to overcome a significant challenge: the inherent fragility and error-proneness of quantum computations. Unlike conventional computers, where errors can be efficiently detected and corrected using established methods, quantum systems are far more susceptible to disturbances, making error correction a far more complex and elusive endeavor. Without fault tolerance – the ability to perform computations reliably despite errors – quantum computers remain unreliable for many critical applications.

To ensure the accuracy of quantum algorithms, researchers typically resort to simulating these intricate quantum processes on classical computers. This verification process is indispensable, yet it becomes extraordinarily demanding when dealing with quantum computations designed for error correction. The sheer complexity of these systems means that simulating them, even on the world’s most powerful supercomputers, can require timescales stretching into the age of the universe. This computational barrier has effectively rendered the simulation of certain crucial error-correcting quantum codes impossible, hindering the progress of quantum technology.

The international collaboration, involving researchers from Chalmers University of Technology, the University of Milan, the University of Granada, and the University of Tokyo, has now shattered this long-standing barrier. They have unveiled a novel method that enables the accurate simulation of a specific class of quantum computations that are particularly well-suited for error correction but have historically resisted simulation on classical hardware. This breakthrough directly addresses a critical bottleneck in quantum research, opening new avenues for validation and development.

Cameron Calcluth, a PhD student in Applied Quantum Physics at Chalmers and the lead author of the study published in the prestigious journal Physical Review Letters, expressed his enthusiasm: "We have discovered a way to simulate a specific type of quantum computation where previous methods have not been effective. This means that we can now simulate quantum computations with an error correction code used for fault tolerance, which is crucial for being able to build better and more robust quantum computers in the future."

The inherent difficulty in correcting errors in quantum computers stems from their fundamental building blocks: qubits. While qubits offer the potential for unprecedented computational power through quantum phenomena like superposition (allowing them to exist in multiple states simultaneously), they are also extraordinarily sensitive to their environment. The computational capacity of a quantum computer grows exponentially with each additional qubit, but this power comes at the cost of extreme vulnerability to external disturbances.

"The slightest noise from the surroundings in the form of vibrations, electromagnetic radiation, or a change in temperature can cause the qubits to miscalculate or even lose their quantum state, their coherence, thereby also losing their capacity to continue calculating," explained Calcluth. This fragility necessitates sophisticated error correction mechanisms to maintain the integrity of quantum information.

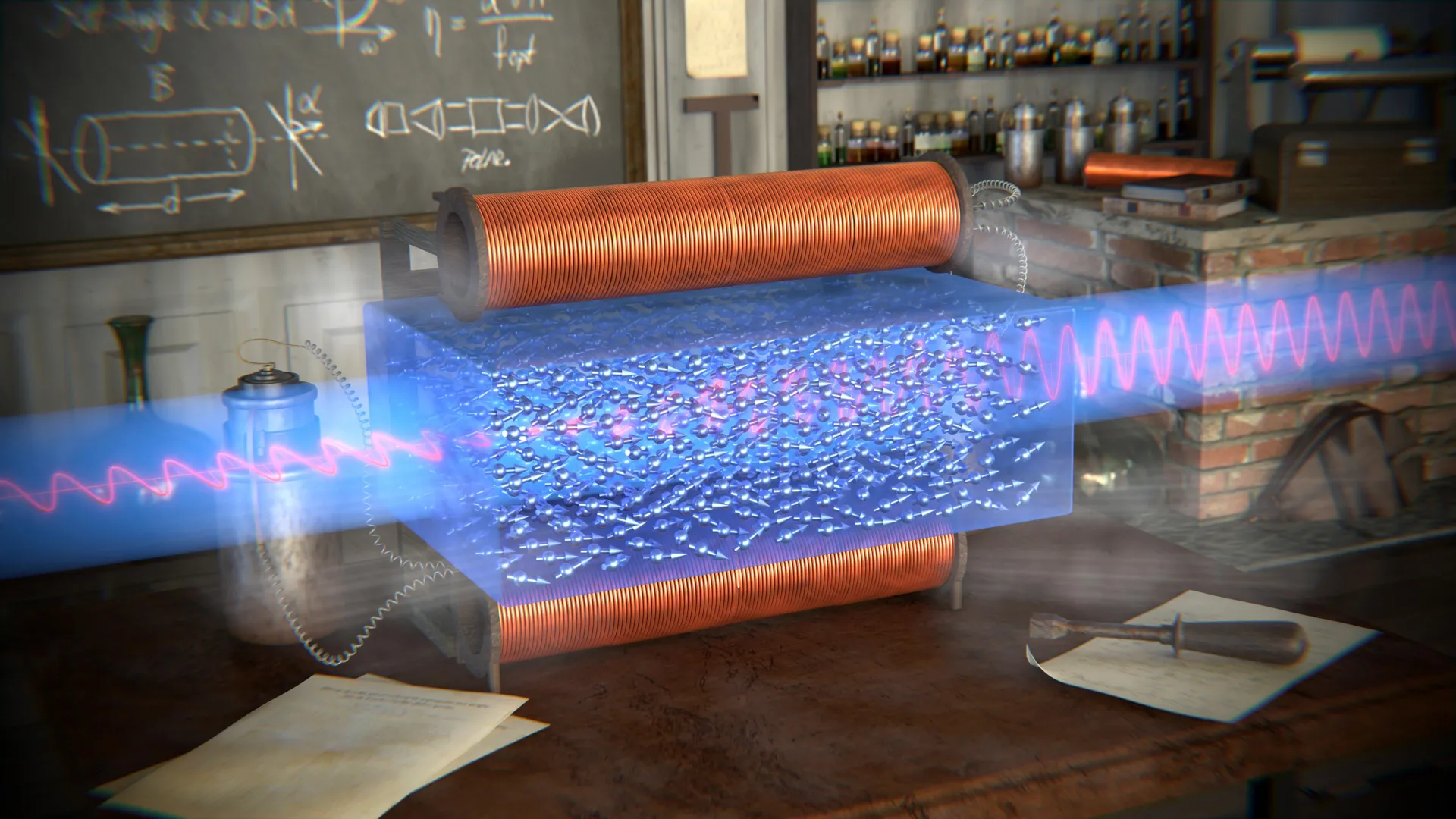

Error correction codes work by distributing quantum information across multiple interconnected systems, allowing for the detection and correction of errors without compromising the delicate quantum state. One particularly promising approach involves encoding the quantum information of a single qubit into the numerous – potentially infinite – energy levels of a quantum mechanical system, such as in a bosonic code. However, the very nature of these multi-level systems makes their simulation on classical computers exceptionally challenging. Until now, reliably simulating quantum computations employing bosonic codes has remained an insurmountable obstacle.

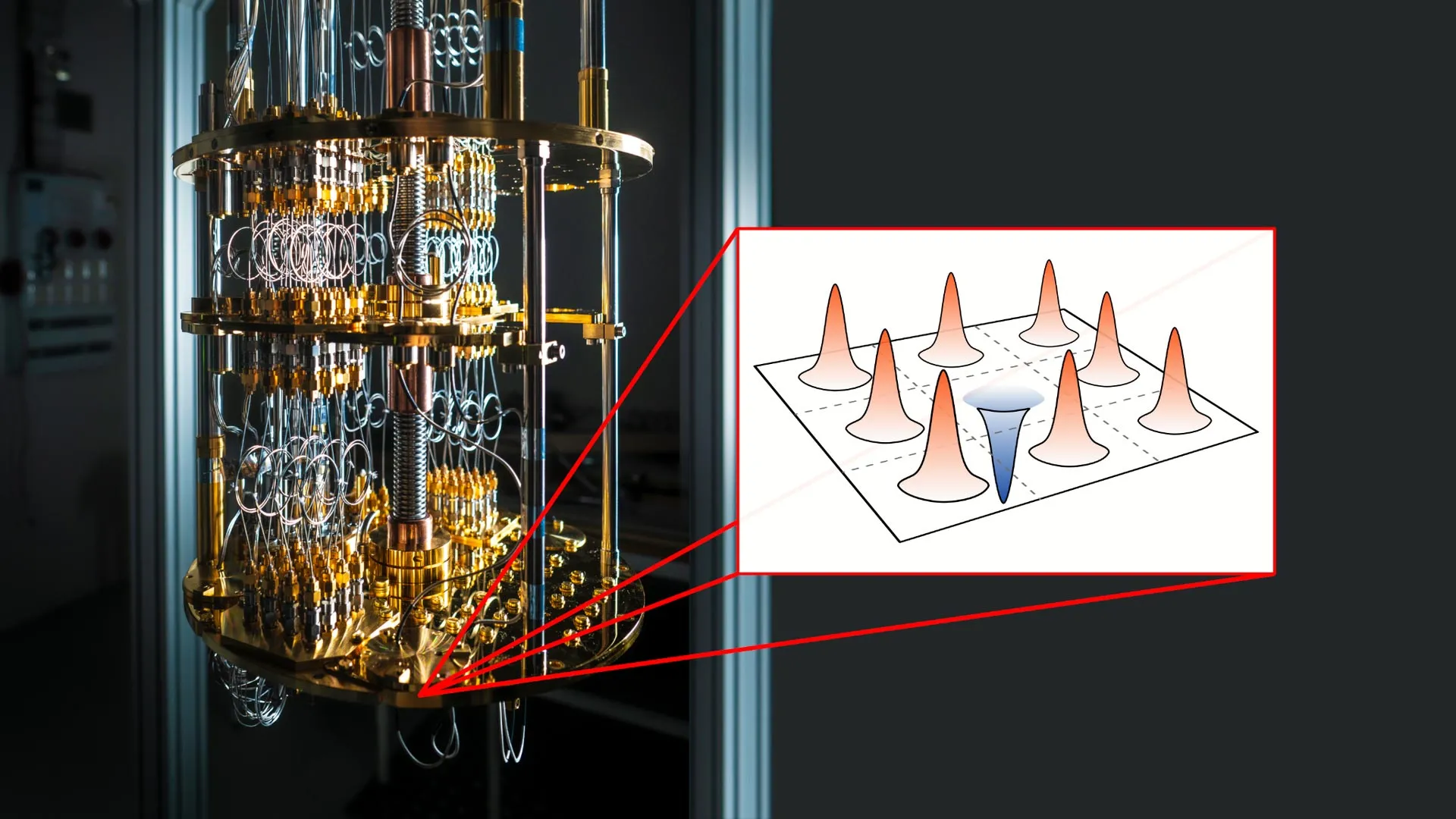

The researchers’ revolutionary method centers on a new algorithm designed to simulate quantum computations that utilize a specific type of bosonic code known as the Gottesman-Kitaev-Preskill (GKP) code. The GKP code is a widely adopted and highly effective code in leading quantum computing architectures due to its inherent robustness against noise. Its sophisticated method of encoding quantum information makes it more forgiving to environmental disturbances, a critical factor for achieving fault tolerance. However, the deeply quantum mechanical nature of GKP codes has historically made them notoriously difficult to simulate using classical computers.

Giulia Ferrini, an Associate Professor of Applied Quantum Physics at Chalmers and a co-author of the study, highlighted the significance of their achievement: "The way it stores quantum information makes it easier for quantum computers to correct errors, which in turn makes them less sensitive to noise and disturbances. Due to their deeply quantum mechanical nature, GKP codes have been extremely difficult to simulate using conventional computers. But now we have finally found a unique way to do this much more effectively than with previous methods."

The key to their success lies in the development of a novel mathematical tool that allows their algorithm to effectively handle the intricacies of the GKP code. This new approach enables researchers to more reliably test and validate the calculations performed by quantum computers, a capability that was previously out of reach for these specific types of error-corrected computations.

"This opens up entirely new ways of simulating quantum computations that we have previously been unable to test but are crucial for being able to build stable and scalable quantum computers," Ferrini added, underscoring the broad implications of their work.

The research, detailed in the article "Classical simulation of circuits with realistic odd-dimensional Gottesman-Kitaev-Preskill states," published in Physical Review Letters, represents a significant stride towards fault-tolerant quantum computing. The authors, Cameron Calcluth, Giulia Ferrini, Oliver Hahn, Juani Bermejo-Vega, and Alessandro Ferraro, are affiliated with Chalmers University of Technology (Sweden), the University of Milan (Italy), the University of Granada (Spain), and the University of Tokyo (Japan). Their pioneering work provides a vital new tool for the quantum computing community, accelerating the journey towards unlocking the full potential of this transformative technology. By making the simulation of robust error-corrected quantum computations feasible, this research lays the groundwork for the development of more stable, scalable, and ultimately, more powerful quantum computers that can address some of humanity’s most pressing challenges.