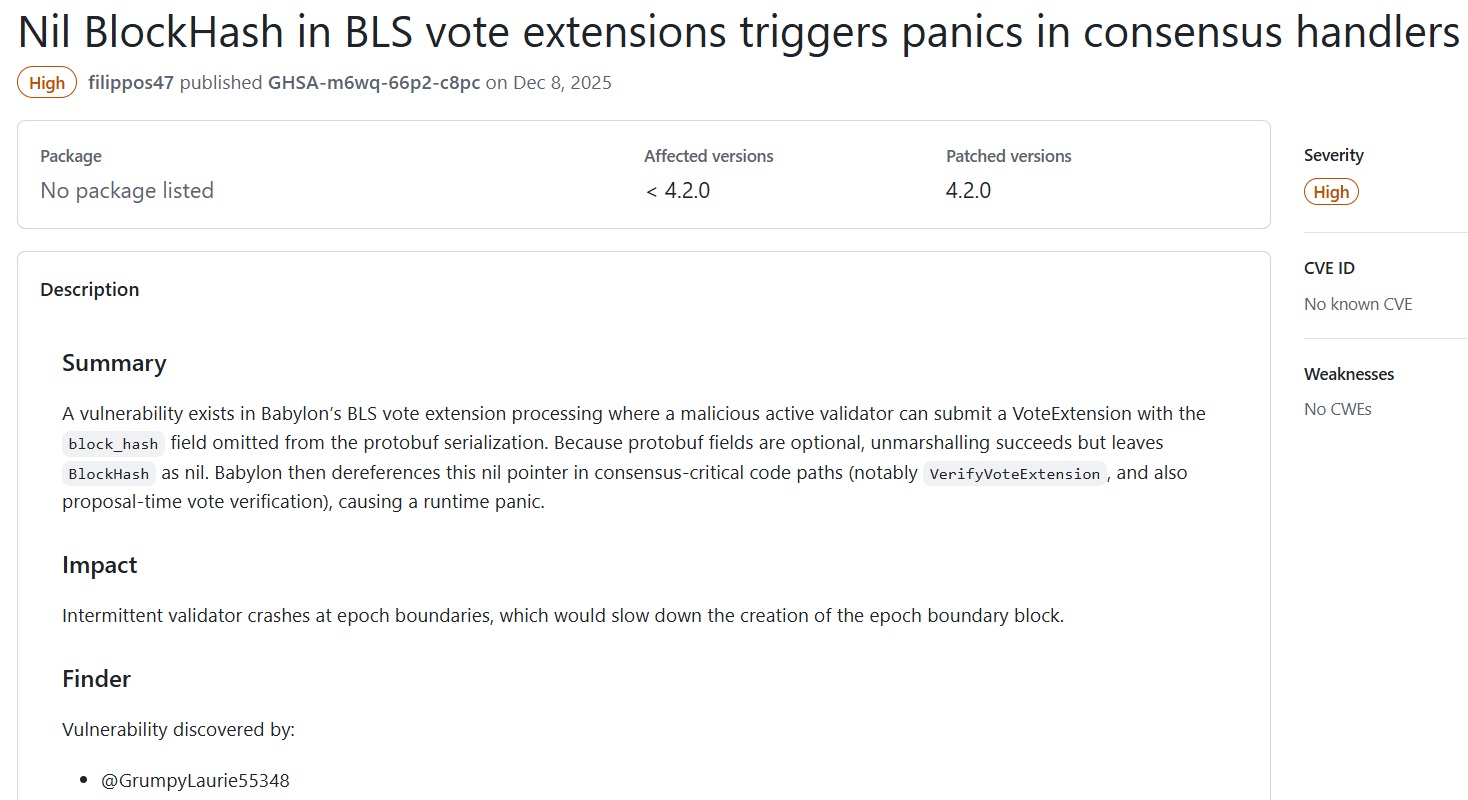

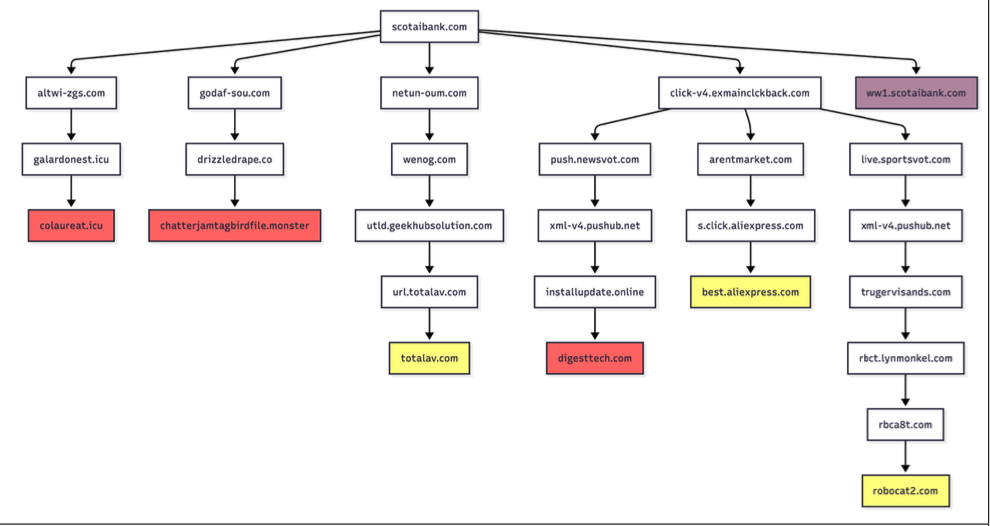

Computing powerhouse Nvidia’s Rubin platform, a revolutionary advancement in AI supercomputing, is designed to drastically cut the cost of running advanced AI models. This audacious claim, unveiled amidst much anticipation, directly challenges the economic foundations of crypto networks like Render, Akash, and Golem, which are meticulously built to monetize scarce GPU compute resources. Yet, far from undermining them, this very innovation is poised to fuel an unprecedented surge in demand, ironically bolstering the relevance and value proposition of these decentralized compute providers.

Officially launched Monday at CES 2026, the Rubin platform represents Nvidia’s newest computing architecture, engineered from the ground up to significantly improve the efficiency of both training and running complex AI models. Dubbed "Vera Rubin" in homage to the pioneering American astronomer Vera Florence Cooper Rubin, renowned for her groundbreaking work on dark matter, this platform is not merely an incremental upgrade. It is deployed as an intricately co-designed system of six specialized chips, meticulously integrated to function as a unified, hyper-efficient AI engine. Nvidia CEO Jensen Huang proudly announced its immediate availability, stating it was already in "full production," signaling its readiness to reshape the landscape of artificial intelligence.

For crypto projects that base their entire economic model on the fundamental assumption that GPU compute will remain a scarce and valuable resource, Nvidia’s efficiency gains initially appear to be a formidable threat. If each AI task requires fewer computational cycles and less energy, the perceived value of individual GPU units, and by extension, the tokens that represent access to them, could theoretically diminish. This line of reasoning suggests a potential erosion of the underlying economics for decentralized compute networks.

However, historical patterns in computing innovation offer a compelling counter-narrative. Past improvements in computing efficiency, from the advent of personal computers to the rise of cloud infrastructure, have consistently led to an increase in overall demand rather than a reduction. Cheaper, more accessible, and more capable compute resources have repeatedly unlocked entirely new workloads, applications, and use cases, pushing aggregate usage higher even as the cost per unit of computation plummeted. This phenomenon, often counter-intuitive, is a cornerstone of technological progress.

Astute investors appear to be keenly aware of this dynamic, betting that it will continue to apply to the AI compute sector. Over the past week, tokens associated with GPU-sharing networks such as Render (RENDER), Akash (AKT), and Golem (GLM) have experienced significant upward momentum, each climbing more than 20%. This market reaction suggests a collective confidence that Nvidia’s advancements, rather than stifling these networks, will instead catalyze a broader expansion of the compute market, creating new opportunities for decentralized providers.

Crucially, most of the Rubin platform’s efficiency gains are concentrated within the colossal, highly optimized environments of hyperscale data centers. These "AI factories" are built for massive, long-term contracts and highly synchronized, mission-critical AI training operations. This strategic focus leaves a substantial void in the market, one perfectly suited for blockchain-based compute networks. These decentralized platforms are well-positioned to compete effectively in servicing short-term jobs, burst workloads, and diverse computational tasks that fall outside the rigid, often exclusive, purview of hyperscale infrastructure.

The parallel with the evolution of cloud computing provides a modern, vivid illustration of how efficiency expands demand. The emergence of affordable and flexible access to compute resources through providers like Amazon Web Services (AWS) dramatically lowered barriers for developers and nascent companies. This democratization of access ignited an explosion of innovative new workloads and applications, which, in turn, ultimately consumed vastly more compute resources than previously imagined. It elegantly debunks the simplistic assumption that greater efficiency automatically translates to reduced demand. In the realm of computing, it almost never does. As costs decrease, a new wave of users enters the market, existing users scale up their operations with more workloads, and entirely novel applications, previously deemed too expensive or complex, suddenly become viable.

In economics, this phenomenon is famously known as the "Jevons Paradox," a concept first articulated by William Stanley Jevons in his seminal 1865 book, "The Coal Question." Jevons meticulously observed that improvements in the efficiency of coal-fired steam engines did not lead to a decrease in overall coal consumption. On the contrary, by making steam power cheaper and more accessible, these efficiencies spurred a massive expansion of industrial activity, ultimately leading to a significant increase in the total demand for coal. Applied to crypto-based compute networks and the broader AI revolution, Jevons’ Paradox suggests that a more efficient, and thus cheaper, AI ecosystem will not automatically slash GPU demand. Instead, it will likely unleash an even greater torrent of computational needs.

For decentralized compute networks, this translates into a strategic advantage: consumer demand can shift decisively toward short-term, flexible, and often highly specialized workloads that do not neatly fit the long-term, rigid contracts of hyperscale providers. In practice, this allows networks like Render, Akash, and Golem to compete not on raw processing power in a head-to-head battle with Nvidia’s most advanced systems, but on unparalleled flexibility, accessibility, and niche specialization. Their intrinsic value lies in their ability to aggregate and orchestrate idle or underused GPUs from a distributed network of providers, efficiently routing short-lived jobs to wherever capacity is readily available. This model thrives on rising overall demand for compute but does not depend on controlling the absolute bleeding edge of hardware or competing directly with hyperscalers for massive, sustained AI training contracts.

Render and Akash, for instance, function as decentralized GPU rendering platforms. They allow users to rent GPU power for a wide array of compute-intensive tasks, ranging from 3D rendering and visual effects to emerging AI inference and development workloads. They provide democratized access to powerful GPU compute without the prohibitive upfront investment in dedicated infrastructure or the complex, often opaque, pricing models of hyperscale cloud providers. Golem, similarly, operates as a decentralized marketplace for unused GPU resources, connecting those with surplus capacity to those in need of computational power for various tasks. While these decentralized GPU networks can deliver reliable performance for batch workloads and specific rendering tasks, it’s important to note they are not designed to directly replicate the predictability, tight synchronization, and long-duration availability that hyperscalers are meticulously engineered to guarantee for enterprise-grade AI training. They serve a different, yet equally vital, segment of the market.

Despite Nvidia’s advancements, a persistent and critical factor sustaining the demand for decentralized compute is the ongoing scarcity of GPUs. This scarcity is not merely a transient market fluctuation but a deep-seated issue stemming from fundamental constraints in the semiconductor supply chain. High-bandwidth memory (HBM), an absolutely critical component for modern AI GPUs, is expected to remain in severe shortage through at least 2026, according to insights from component distributors like Fusion Worldwide. HBM’s unique architecture provides the immense memory bandwidth necessary for efficiently training and running large, sophisticated AI models. Consequently, these shortages directly cap the total number of high-end AI GPUs that can be manufactured and shipped.

The constraint originates from the very apex of the semiconductor supply chain. SK Hynix and Micron, two of the world’s largest HBM producers, have both publicly stated that their entire output for 2026 is already completely sold out, indicating unprecedented demand. Furthermore, Samsung, another major player, has warned of double-digit price increases for HBM as demand continues to dramatically outpace supply. This is not a situation that can be easily resolved; scaling HBM production involves complex manufacturing processes and significant capital investment over long lead times.

In the past, crypto miners were often (and sometimes rightly) blamed for driving GPU shortages, particularly during the boom years of Ethereum mining. However, today, the overwhelming demand from the burgeoning AI industry is the primary force pushing the entire supply chain into this state of intense scarcity. Hyperscale cloud providers, tech giants, and leading AI research labs are locking up multi-year allocations of memory, advanced packaging services (like TSMC’s CoWoS), and cutting-edge silicon wafers to secure their future computational capacity. This aggressive procurement strategy leaves very little slack elsewhere in the market, making it exceedingly difficult for smaller enterprises, startups, and individual developers to access the GPUs they desperately need.

This persistent, systemic scarcity is a crucial reason why decentralized compute markets can continue to not only exist but thrive. Render, Akash, and Golem operate fundamentally outside the tightly controlled hyperscale supply chain. They aggregate underutilized GPUs from a diverse pool of providers—ranging from individual enthusiasts with powerful gaming rigs to small data centers with surplus capacity—and offer access on flexible, short-term terms. While they do not directly solve the global supply shortages of cutting-edge GPUs, they provide an invaluable alternative access point for developers and workloads that cannot secure long-term contracts or dedicated capacity within the exclusive "AI factories" of the tech giants.

The AI boom is also profoundly reshaping the crypto mining industry, particularly as Bitcoin (BTC) economics continue to evolve every four years with its programmed halving events, which reduce block rewards for miners. This economic shift is compelling many miners to reassess the optimal use of their existing infrastructure. Large-scale mining sites, meticulously built around robust access to reliable power, advanced cooling systems, and expansive physical space, bear a striking resemblance to the demanding requirements of modern AI data centers. As hyperscalers monopolize much of the available GPU supply, these strategically located and energy-intensive assets are becoming increasingly valuable for supporting AI and other high-performance computing (HPC) workloads.

This significant pivot is already clearly visible. In November, Bitfarms, a prominent Bitcoin miner, announced ambitious plans to convert a substantial portion of its Washington State mining facility into an AI and high-performance computing site. This facility is specifically being designed to support Nvidia’s Vera Rubin systems, illustrating a direct strategic alignment with the cutting edge of AI infrastructure. Several other rivals in the mining sector have similarly diversified into AI since the last Bitcoin halving, recognizing the lucrative opportunity to leverage their existing power and cooling infrastructure.

Nvidia’s Vera Rubin platform, while a monumental achievement in computational efficiency, does not eliminate the fundamental scarcity of high-end AI hardware. Instead, it makes the existing hardware significantly more productive inside hyperscale data centers, where access to GPUs, high-bandwidth memory, and advanced networking is already meticulously managed and tightly controlled. The underlying supply constraints, particularly around critical components like HBM, are widely expected to persist throughout 2026, creating a sustained bottleneck in the broader market.

For the crypto ecosystem, this continued GPU scarcity carves out an essential space for decentralized compute networks to fill crucial gaps in the market. These networks effectively serve a wide array of workloads that cannot secure long-term contracts or dedicated capacity within the exclusive confines of large AI factories. Render, Akash, and Golem are not intended as direct substitutes for hyperscale infrastructure; rather, they function as vital, flexible alternatives for short-term jobs, intermittent bursts of demand, and democratized compute access during this unprecedented AI boom. They represent a dynamic and resilient response to the evolving needs of a world increasingly reliant on computational power, ensuring that innovation isn’t solely concentrated in the hands of a few tech giants but remains accessible to a diverse and expanding ecosystem of developers and businesses.