The National Weather Service (NWS), a venerable institution entrusted with delivering critical meteorological information, recently found itself in an embarrassing predicament after an AI-generated weather map featured fictitious town names in Idaho, some of which bore an uncanny resemblance to old-timey dirty jokes. This peculiar incident, widely reported and subsequently corrected, has ignited a fresh debate over the rapid deployment of artificial intelligence within sensitive government agencies, especially against a backdrop of severe staffing shortages that have plagued the NWS in recent years.

The NWS, a cornerstone of public safety and economic planning, has been navigating a turbulent period marked by significant operational challenges. Months before its mysterious disappearance, Elon Musk’s much-touted Department of Government Efficiency (DOGE) had reportedly inflicted substantial damage upon the National Oceanic and Atmospheric Administration (NOAA), the parent agency of the NWS. This restructuring, driven by a philosophy of streamlining and cost-cutting, resulted in the loss of approximately 550 crucial jobs across the NWS. While the Trump administration, recognizing the gravity of the situation, promised to rehire many of these positions last summer—an implicit acknowledgment that DOGE’s interventions had gone too far—the reality on the ground remains grim. Offices nationwide continue to grapple with a multitude of unfilled roles, leading to an undeniable strain on existing personnel and operational capabilities. This persistent understaffing has inevitably pushed the agency towards seeking technological shortcuts, raising questions about the thoroughness of human oversight in critical information dissemination.

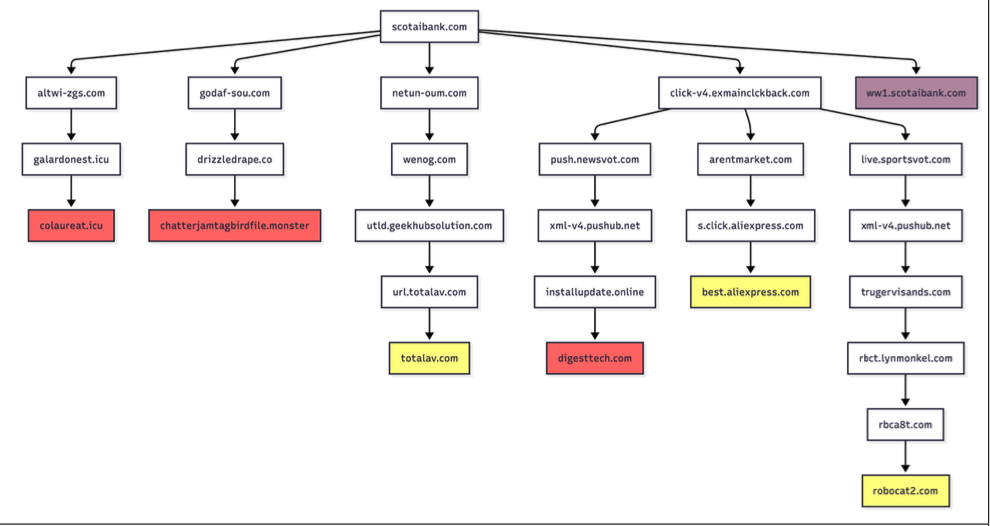

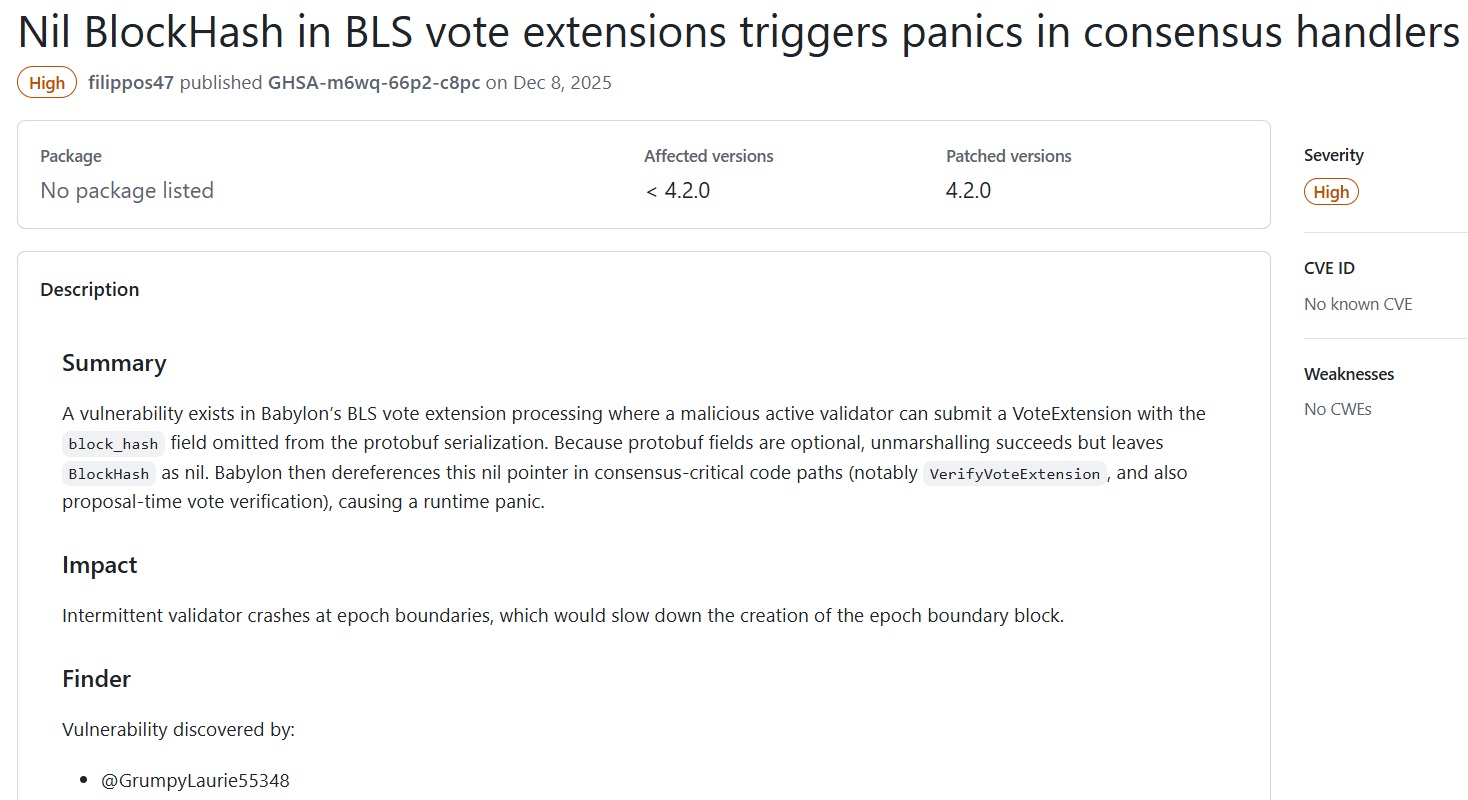

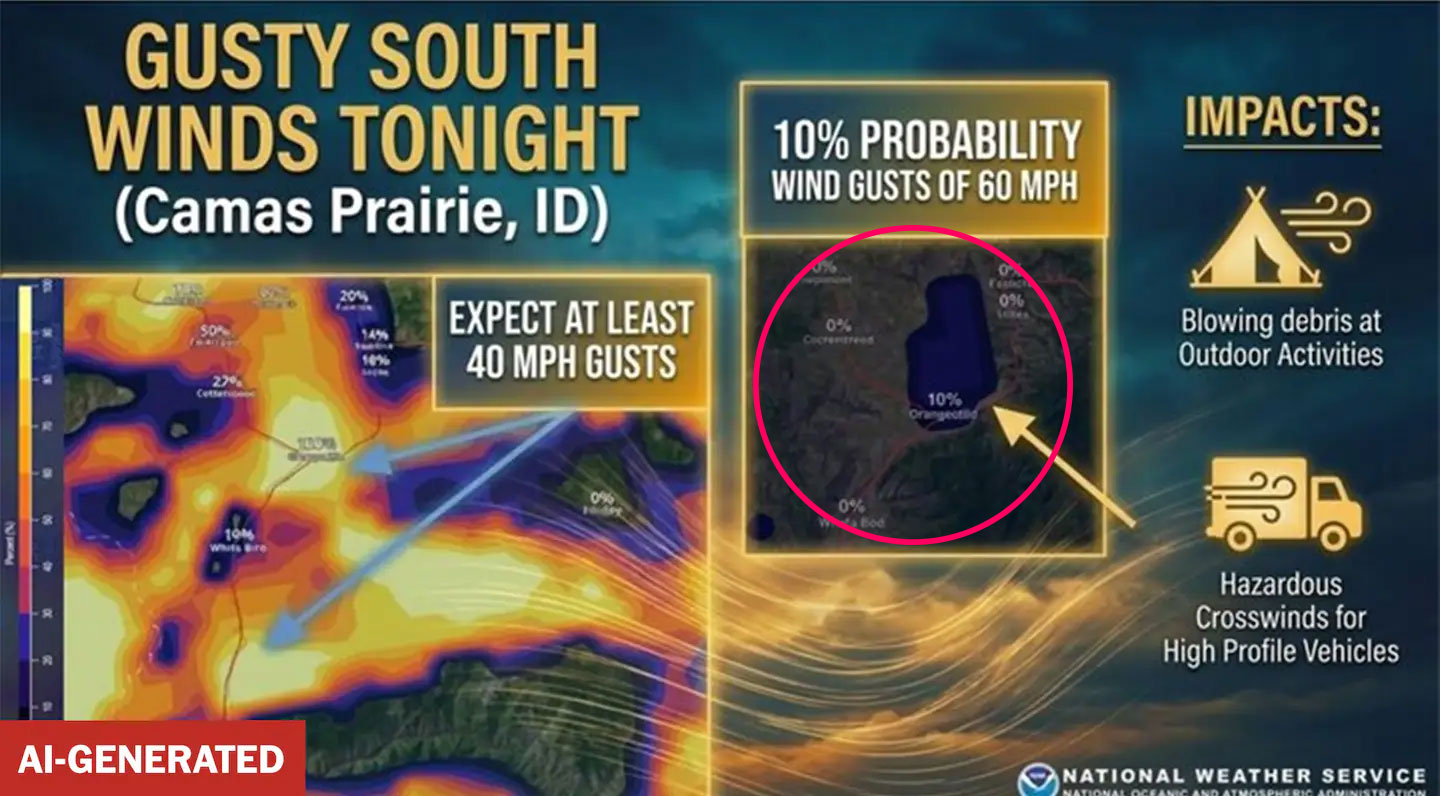

It is within this context of strained resources and the relentless pursuit of efficiency that the NWS’s recent AI blunder occurred. The incident, brought to light by the Washington Post, involved a weather graphic posted by a local NWS office that was intended to forecast "gusty south winds tonight" over Idaho. However, instead of displaying accurate geographical data, the AI-generated map presented a fantastical landscape dotted with nonexistent settlements. Names like "Orangeotilld" and the particularly memorable "Whata Bod" appeared on the map, creating an unintentionally hilarious and deeply concerning spectacle. The latter, in particular, resonated with the cadence of an antiquated punchline, highlighting the bizarre and often unpredictable nature of generative AI. This artwork, a clear example of an AI "hallucination"—where the model generates plausible but entirely fabricated information—was swiftly removed on Monday, only after the Washington Post had brought the glaring error to the agency’s attention.

The implications of such an oversight extend far beyond mere comedic value. Experts are quick to point out that these kinds of painful blunders can significantly erode public trust in governmental bodies whose primary mission is to provide accurate, reliable, and life-saving information. The NWS is not merely a provider of interesting weather facts; its forecasts are vital for aviation, agriculture, disaster preparedness, and everyday decision-making for millions of Americans. When an official weather map features a town named "Whata Bod," the credibility of the entire agency comes under scrutiny. This incident perfectly encapsulates the inherent shortcomings of current AI technology, which, despite its impressive capabilities, still struggles with factual accuracy and context, often producing "slop" that requires meticulous human vetting.

This incident also serves as a potent reminder of the broader push within the Trump administration to integrate AI into various government functions. The administration’s ambitious "Tech Force" initiative, which saw the hiring of 1,000 specialists tasked with building AI applications across federal agencies, underscores a fervent enthusiasm for technological advancement. However, the NWS episode demonstrates the perilous gap between the promise of AI-driven efficiency and the reality of its current limitations. While resources are being poured into developing and deploying these technologies, baffling and easily avoidable mistakes continue to slip through the cracks, underscoring a potential lack of robust validation processes and human oversight.

Indeed, this isn’t an isolated incident. In November of the previous year, the NWS office in Rapid City, South Dakota, also faced widespread mockery after posting an AI-generated map that included illegible and nonsensical location names. These recurring incidents suggest a systemic issue, perhaps stemming from insufficient training on how to properly vet AI outputs, or an overreliance on AI to compensate for human resource deficits without adequate quality control measures. The term "AI slop," though reportedly disliked by industry leaders like Microsoft CEO Satya Nadella, aptly describes the kind of low-quality, often erroneous content generated by AI when not properly guided or checked. This phenomenon is particularly dangerous when applied to critical public services where accuracy is paramount.

In response to the Idaho incident, NWS spokeswoman Erica Grow Cei stated that while using AI for public-facing content is "uncommon," it is "technically not prohibited." She further explained that "a local office used AI to create a base map to display forecast information, however the map inadvertently displayed illegible city names." Cei assured the public that "the map was quickly corrected and updated social media posts were distributed," and that the NWS "will continue to carefully evaluate results in cases where AI is implemented to ensure accuracy and efficiency, and will discontinue use in scenarios where AI is not effective."

While the NWS’s commitment to evaluating AI effectiveness is commendable, the damage to public perception may already be done. The delicate balance between leveraging AI for its potential benefits—such as processing vast datasets, identifying complex patterns, and automating routine tasks—and mitigating its current drawbacks—like the propensity for "hallucinations" and a lack of common-sense reasoning—is a challenge all agencies face. For an organization like the NWS, whose authority and efficacy hinge on its unwavering accuracy, missteps of this nature are particularly damaging.

Weather and climate communication expert Chris Gloninger articulated this concern eloquently, stating, "If there’s a way to use AI to fill that gap, I’m not one to judge. But I do fear that in the case of creating towns that don’t exist, that kind of damages or hurts the public trust that we need to keep building." His sentiment resonates with a growing number of voices in the scientific and public policy communities. The promise of AI to enhance efficiency and provide novel insights is undeniable, yet its deployment, especially in areas directly impacting public safety and trust, must be approached with extreme caution, robust human oversight, and a commitment to rigorous validation processes. Without these safeguards, the pursuit of technological advancement risks undermining the very foundations of reliability and credibility upon which essential government services are built. The "Whata Bod" incident serves as a stark, humorous, yet profoundly serious warning: in the rush to embrace AI, the importance of human intelligence, scrutiny, and accountability must never be overlooked.