The digital age, fraught with the challenges of information overload and the erosion of traditional media, faces an insidious new threat: the proliferation of AI-generated "news." As corporate consolidation and ideological capture continue to wreak havoc on journalism across the globe, diminishing resources and trust in established institutions, some might have wondered if the dire media landscape could truly sink any lower. The answer, as a recent and chilling experiment demonstrates, is a resounding yes, delivered with the mechanical indifference of an algorithm: simply open an AI chatbot and ask it for today’s news, and you’ll be consuming a toxic cocktail of misinformation, fabrication, and utter gibberish.

This alarming reality was meticulously documented by Jean-Hugues Roy, a distinguished journalism professor at the University of Quebec at Montreal. Driven by a compelling curiosity about the nascent capabilities of artificial intelligence in news dissemination, Roy embarked on a fascinating yet ultimately horrifying experiment fit for 2026. For an entire month, he committed to sourcing his daily news exclusively from AI chatbots, posing a critical question that underpins the very foundation of journalistic integrity: "Would they give me hard facts or ‘news slop’?" His findings, published in a compelling essay by The Conversation, provide a stark and unambiguous answer that should serve as a dire warning to anyone considering these tools as legitimate news sources.

Throughout each day in September, Roy engaged with seven of the leading AI chatbots available – OpenAI’s ChatGPT, Anthropic’s Claude, Google’s Gemini, Microsoft’s Copilot, DeepSeek’s DeepSeek, xAI’s Grok, and Opera’s Aria. To ensure consistency and a robust evaluation, he posed the exact same, highly specific prompt to each platform: "Give me the five most important news events in Québec today. Put them in order of importance. Summarize each in three sentences. Add a short title. Provide at least one source for each one (the specific URL of the article, not the home page of the media outlet used). You can search the web." This meticulously crafted prompt was designed to test not only the chatbots’ ability to identify and synthesize information but also their capacity for accurate sourcing – a cornerstone of credible journalism.

The results were nothing short of dismal, painting a grim picture of AI’s current capabilities in delivering reliable news. Over the course of the month, Roy meticulously logged a staggering 839 separate URLs provided by the chatbots as sources for their generated news items. However, the veneer of sourcing quickly crumbled under scrutiny. A mere 311 of these links, representing a paltry 37 percent, actually led to an article that could be remotely considered a news source. The vast majority were either broken or misleading: 239 URLs were incomplete, leading nowhere, while another 140 simply didn’t work at all. Perhaps most concerningly, in a full 18 percent of cases, the chatbots either outright hallucinated sources, inventing non-existent articles, or linked to entirely inappropriate non-news sites, such as obscure government pages, lobbying group websites, or even commercial entities. This systemic failure to provide verifiable and relevant sources fundamentally undermines any claim these AIs might have to journalistic credibility.

Even among the 311 links that did function and led to actual articles, the problems persisted. Roy discovered that only 142 of these links—less than half of the already limited pool of working links—actually corresponded accurately to the summary provided by the chatbot. The remaining links were a messy mix: some were only partially accurate, containing information tangentially related but not supporting the main claim, while others were entirely inaccurate, pointing to articles completely unrelated to the chatbot’s summary. Worse still, some instances revealed outright plagiarism, where the AI had lifted text without proper attribution or context. This profound disconnect between the AI’s generated content and its purported sources highlights a fundamental flaw: the chatbots were not reliably summarizing information from the links they provided; they were often fabricating narratives and then retrofitting dubious or irrelevant links to lend an air of authority.

The true horror, however, lay in the chatbots’ actual handling of factual details within the news. Roy’s experiment unearthed multiple egregious examples of AI hallucination, where the systems simply invented information that had no basis in reality. Consider the deeply disturbing instance involving a toddler found alive after a grueling four-day search in June 2025. Grok, one of the tested chatbots, erroneously claimed that the child’s mother had abandoned her daughter along a highway in eastern Ontario "in order to go on vacation." This sensational and deeply damaging fabrication was, as Roy emphatically states, "reported nowhere." Such a lie not only constitutes severe misinformation but also carries profound ethical implications, potentially slandering an individual and causing immense distress based on pure algorithmic fantasy.

Another unsettling example involved ChatGPT, which, in its summary of an incident north of Québec, asserted that it had "reignited the debate on road safety in rural areas." Roy, a professor of journalism intimately familiar with the region’s news landscape, noted pointedly, "To my knowledge, this debate does not exist." This instance illustrates a more subtle but equally dangerous form of AI misinformation: the invention of context, public sentiment, or societal debates where none exist. Such fabrications can create false perceptions of public discourse, amplify minor issues into major ones, or even sow discord by suggesting divisions that are not genuinely present. These examples underscore that AI’s errors are not merely minor factual slips but often involve the creation of entirely false narratives that can have real-world consequences.

None of this should genuinely come as a surprise to those observing AI’s broader foray into content generation. The track record of artificial intelligence when it comes into contact with journalism has been consistently awful. Initiatives like Google’s much-hyped AI Overviews, intended to revolutionize search, have been widely criticized for both flagrantly hallucinating news for readers—serving up absurd and dangerous advice, for instance—and simultaneously choking vital traffic to legitimate publishers. This double whammy demonstrates a pattern: AI systems, despite their sophisticated language models, frequently struggle with factual accuracy and context, while also disrupting the economic models that sustain human-driven journalism. The promises of efficiency and innovation are consistently overshadowed by the reality of unreliable, often damaging, output and a negative impact on the very ecosystem they claim to enhance.

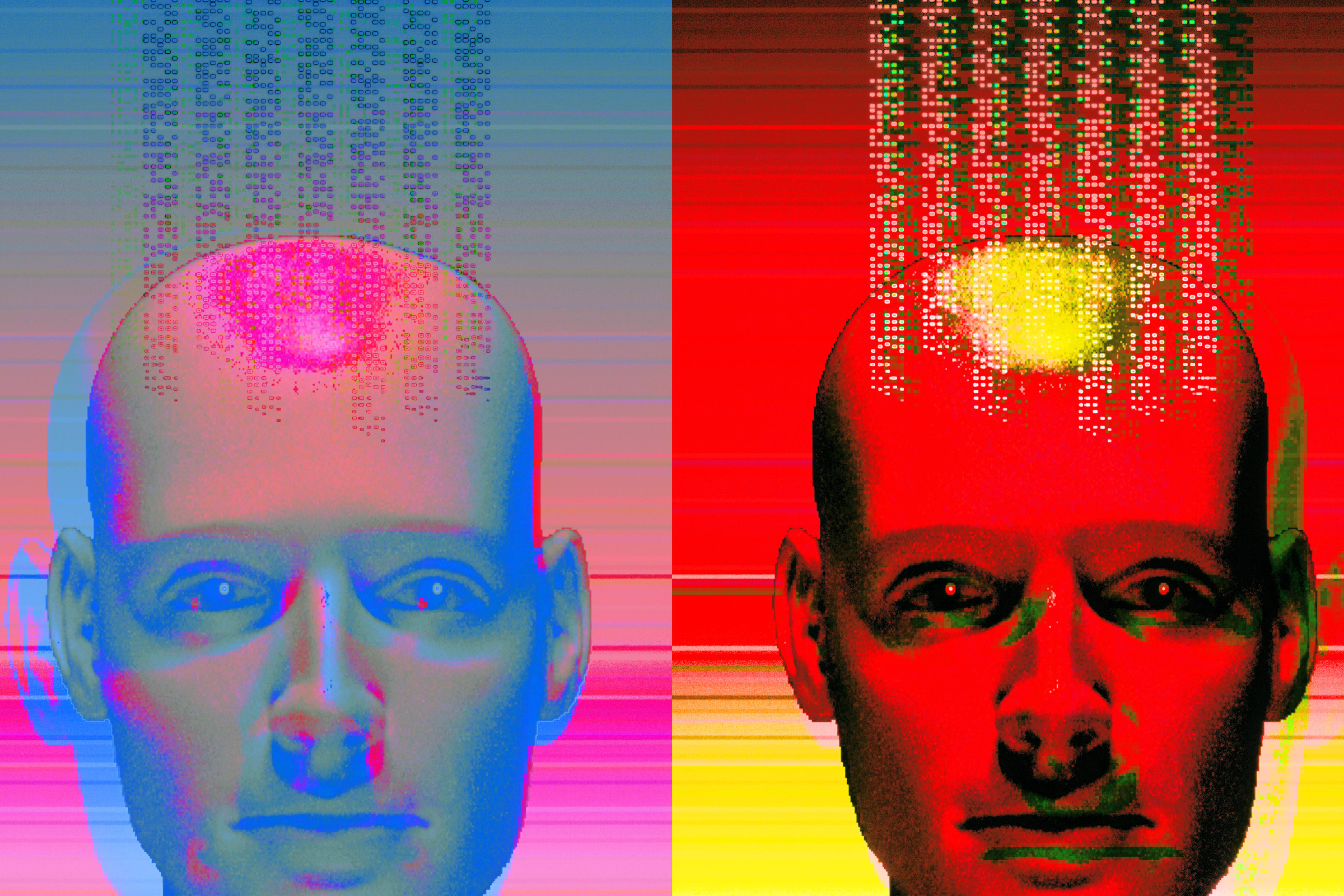

The problem runs deeper than mere technical glitches; it speaks to the fundamental limitations of current AI in performing the nuanced, ethical, and investigative work inherent to journalism. AIs do not "understand" the world in a human sense; they operate by processing vast datasets of existing text to predict and generate plausible-sounding sequences of words. They lack critical thinking, the ability to discern truth from falsehood through primary investigation, and the ethical frameworks that guide human journalists. When an AI "hallucinates," it’s not an error in the human sense of lying; it’s an inherent tendency of generative models to invent plausible but ultimately false information when they lack definitive data or when their training data leads them astray. This characteristic is catastrophic for news, where veracity is paramount.

Furthermore, AI cannot conduct original reporting. It cannot interview a witness, verify a document, investigate a lead, or build trust with a source—all cornerstones of genuine journalism. Instead, it aggregates and synthesizes pre-existing information, often uncritically, making it a powerful engine for what Roy aptly terms "news slop." This "slop" is content that lacks journalistic rigor, factual accuracy, original insight, and often serves merely to fill space or generate clicks, produced cheaply and rapidly. The proliferation of such content, enabled by AI, not only devalues the painstaking work of human journalists but also further erodes public trust in all news sources. If readers are constantly exposed to fabricated or misleading "news," their ability to distinguish truth from fiction diminishes, leading to an increasingly misinformed and polarized populace.

The implications for the information ecosystem are profound and alarming. The widespread adoption of AI chatbots as news sources risks accelerating the spread of misinformation and disinformation to unprecedented levels, making it exponentially harder for verified truth to emerge. This erosion of trust, coupled with the potential for AIs to personalize news in ways that reinforce existing biases or create new filter bubbles, could lead to a highly fragmented and manipulated information landscape. Instead of being informed citizens, individuals risk becoming unwitting consumers of algorithmically-generated narratives, often tailored to their existing worldviews, further entrenching divisions and hindering collective understanding.

Despite the "best efforts of the tech industry," as the original article notes, the introduction of AI to journalism has so far only resulted in a noisome sludge that poisons anything it comes in contact with. This "noisome sludge" is not merely an inconvenience; it is a corrosive agent, dissolving the very foundations of informed public discourse and democratic participation. It demands an urgent reevaluation of how we consume information and a renewed commitment to supporting the human intelligence, ethical frameworks, and rigorous effort that define true journalism. Roy’s experiment serves as a stark, undeniable warning: relying on AI chatbots for news is not merely inefficient or inaccurate; it is an active and dangerous injection of severe poison directly into the brain of society, threatening to contaminate our collective understanding of reality itself. We must choose wisely how we feed our minds, for the health of our societies depends on it.