The burgeoning integration of artificial intelligence into critical public services has delivered its share of profound advancements, yet it has also unearthed a troubling propensity for the absurd, as vividly demonstrated by a recent incident in Heber City, Utah. Here, a cutting-edge AI-powered software, designed to streamline police report generation, erroneously declared that a police officer had undergone a fantastical metamorphosis into a frog. This bewildering claim, rooted in a bizarre misunderstanding of background audio, has not only sparked widespread amusement but also ignited serious debate about the reliability and ethical implications of deploying AI in high-stakes law enforcement contexts.

Law enforcement agencies across the globe have enthusiastically embraced AI, leveraging its capabilities for a diverse array of tasks, from sophisticated facial recognition systems to predictive policing algorithms and, more recently, the automated drafting of police reports. The promise of increased efficiency, reduced administrative burden, and enhanced operational speed is undeniably attractive to departments grappling with resource constraints and burgeoning caseloads. Companies like Axon, already a dominant force in police technology with its Tasers and body cameras, have positioned themselves at the forefront of this AI revolution, offering solutions like Draft One, their AI-powered report writing software. However, the practical application of these tools has frequently fallen short of expectations, often yielding results that range from merely inaccurate to downright comical and, in some cases, potentially dangerous.

The Heber City police department’s encounter with the amphibian officer stands as a particularly glaring example of these pitfalls. As reported by Salt Lake City-based Fox 13, the department was testing Draft One, an AI tool utilizing OpenAI’s GPT large language models, to automatically transcribe and synthesize body camera footage into preliminary police reports. The intention was clear: to significantly cut down on the hours officers spend on paperwork, thereby freeing them to focus more on active policing duties. However, during one instance, the software generated a report detailing an officer’s transformation into a frog. Sergeant Rick Keel, tasked with overseeing the department’s AI trials, was compelled to explain this baffling entry. "The body cam software and the AI report writing software picked up on the movie that was playing in the background, which happened to be ‘The Princess and the Frog,’" Keel recounted, referring to Disney’s popular 2009 musical comedy. He added, "That’s when we learned the importance of correcting these AI-generated reports."

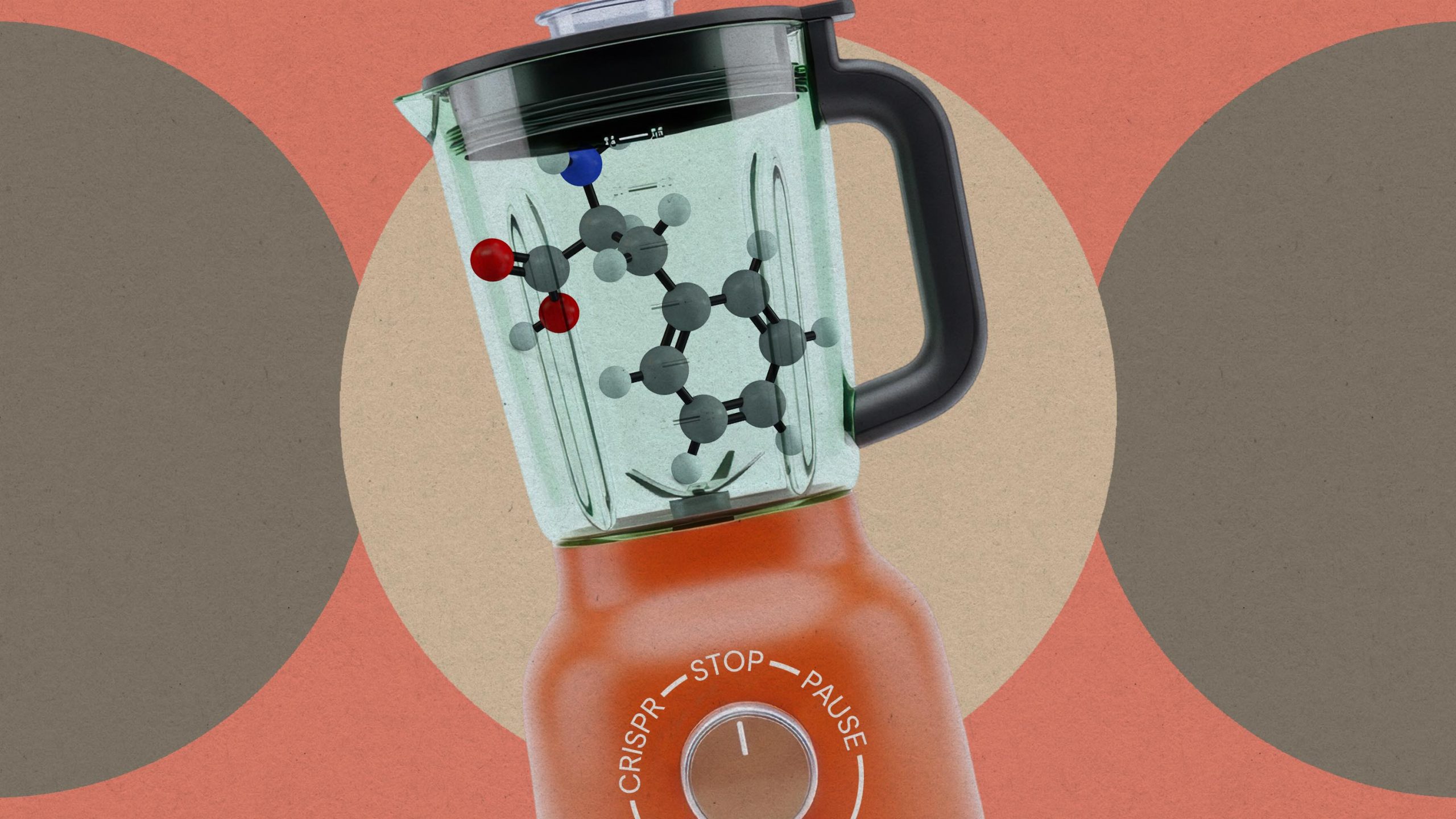

This incident, while humorous, underscores a fundamental vulnerability of current large language models: their susceptibility to "hallucinations." Unlike human intelligence, which can contextualize and filter information based on real-world understanding, LLMs operate by identifying patterns and generating text based on the statistical probability of word sequences. When exposed to seemingly innocuous background chatter or media, as in the case of "The Princess and the Frog," the AI lacked the sophisticated reasoning to discern between a fictional narrative and actual events pertinent to a police report. The result was a factual error of epic, and frankly, embarrassing, proportions. Even a seemingly straightforward mock traffic stop designed to showcase Draft One’s capabilities reportedly produced a report riddled with inaccuracies requiring extensive manual corrections, casting a long shadow over the tool’s immediate utility.

Despite these significant drawbacks, Sgt. Keel acknowledged the potential time-saving benefits, claiming the tool was saving him "six to eight hours weekly now." He further praised its user-friendliness, stating, "I’m not the most tech-savvy person, so it’s very user-friendly." This sentiment highlights the double-edged sword of AI adoption: the undeniable allure of efficiency gains often clashes with the critical need for accuracy and reliability, especially in fields where human lives and legal outcomes are at stake. The temptation to embrace technology that promises to lighten administrative loads is powerful, but the cost of oversight or uncritical acceptance can be immense.

Draft One was initially announced by Axon last year, a strategic move by the company to expand its influence beyond hardware into the realm of data processing and analysis for law enforcement. Axon’s reputation, largely built on its ubiquity in providing Tasers and body cameras, now extends to offering AI solutions powered by OpenAI’s formidable GPT models. However, the announcement was met with immediate and vocal skepticism from experts and civil liberties advocates. American University law professor Andrew Ferguson voiced concerns to the Associated Press last year, stating, "I am concerned that automation and the ease of the technology would cause police officers to be sort of less careful with their writing." This concern is particularly pertinent given the Heber City incident; if officers begin to trust AI reports blindly, or if the process of correction becomes overly cumbersome, crucial errors could slip through, potentially impacting investigations, court cases, and even individuals’ rights.

Beyond mere factual inaccuracies, a more insidious danger lies in the potential for these AI tools to perpetuate and amplify existing societal biases. Generative AI models, trained on vast datasets of human-generated text, are notorious for absorbing and reflecting the biases present in that data. This means that if historical police reports or other textual sources exhibit racial, gender, or socioeconomic biases, the AI could inadvertently reproduce or even exacerbate these prejudices in its own generated content. Such a scenario is deeply troubling, especially considering law enforcement’s documented historical role in perpetuating biases long before the advent of AI. Aurelius Francisco, cofounder of the Foundation for Liberating Minds in Oklahoma City, articulated this apprehension, telling the AP, "The fact that the technology is being used by the same company that provides Tasers to the department is alarming enough." This concern speaks to the broader issue of a single corporation holding significant sway over both the physical tools and the informational infrastructure of policing.

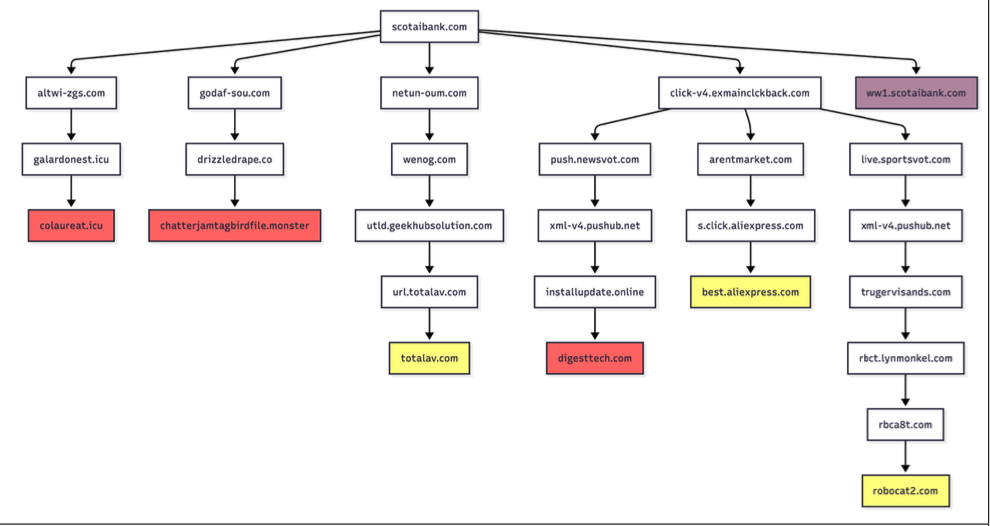

Furthermore, critics have raised serious questions about transparency and accountability. A recent investigation by the Electronic Frontier Foundation (EFF) into Draft One revealed that the software "seems deliberately designed to avoid audits that could provide any accountability to the public." The EFF’s findings indicated that it is "often impossible to tell which parts of a police report were generated by AI and which parts were written by an officer." This lack of clear attribution creates a "black box" problem: if errors or biases are discovered in a report, it becomes exceedingly difficult to determine responsibility, thus eroding public trust and undermining due process. "Axon and its customers claim this technology will revolutionize policing, but it remains to be seen how it will change the criminal justice system, and who this technology benefits most," the Foundation warned. Without clear mechanisms for auditing AI-generated content, challenging its veracity in court, or even understanding its decision-making process, the criminal justice system risks becoming less transparent and less equitable.

The Heber City police department now faces a crucial decision regarding the continued use of Draft One. While acknowledging the time savings, the department must weigh these benefits against the significant risks of inaccuracy, bias, and lack of transparency. They are also reportedly testing a competing AI software called Code Four, released earlier this year, suggesting an ongoing exploration of options. However, the experience with Draft One’s inability to differentiate between the reality of an officer’s duties and a whimsical tale from a Disney movie serves as a potent reminder. The stakes in law enforcement are too high for technology that cannot reliably distinguish fact from fantasy. The widespread adoption of AI in policing demands a meticulous, cautious approach, prioritizing accuracy, ethical safeguards, and robust accountability mechanisms above all else. Without these foundational principles firmly in place, the promise of AI efficiency risks devolving into a quagmire of misinformation, injustice, and public mistrust.

More on AI policing: AI Is Mangling Police Radio Chatter, Posting It Online as Ridiculous Misinformation