The year 2026 is poised to witness this confrontation escalate in the judicial system. While some states might reconsider their legislative agendas in the face of federal opposition, others, driven by intensifying public pressure to safeguard vulnerable populations, particularly children from the potential harms of advanced chatbots, and to address the escalating energy demands of AI-driven data centers, are expected to press forward with their regulatory initiatives. This divergence in state-level approaches will be amplified by the considerable financial influence of competing super PACs, bankrolled by both technology magnates and AI safety advocates. These organizations are expected to pour tens of millions of dollars into congressional and state elections, seeking to elect lawmakers who align with their distinct visions for AI governance.

President Trump’s executive order empowers the Department of Justice to establish a dedicated task force tasked with initiating legal challenges against states whose AI legislation is perceived to conflict with the administration’s preference for a light-touch regulatory framework. Furthermore, the order grants the Department of Commerce the authority to withhold federal broadband funding from states whose AI laws are deemed "onerous." According to James Grimmelmann, a law professor at Cornell Law School, this executive order is likely to be strategically employed to challenge a limited number of provisions, predominantly those concerning AI transparency and bias, which often fall within the purview of liberal policy initiatives. Grimmelmann posits that the administration will focus its legal efforts on these specific areas, rather than attempting a broad-brush preemption of all state-level AI legislation.

Despite the presidential directive, many states are showing no signs of capitulation. On December 19, New York Governor Kathy Hochul signed the Responsible AI Safety and Education (RAISE) Act, a landmark piece of legislation that mandates AI companies to publicly disclose their safety protocols for AI model development and to report significant safety incidents. Following suit, California, on January 1, enacted its own pioneering frontier AI safety law, SB 53. This law, which served as a model for the RAISE Act, is designed to mitigate catastrophic risks, including the potential for biological weapons development and sophisticated cyberattacks. Although both the RAISE Act and SB 53 underwent significant revisions to withstand intense industry lobbying efforts, they represent a hard-won, albeit delicate, compromise between the powerful tech sector and the burgeoning AI safety movement.

Should President Trump decide to challenge these carefully crafted laws, states like California and New York, along with potentially Republican-leaning states such as Florida that have vocal proponents of AI regulation, are expected to mount a robust legal defense. Margot Kaminski, a law professor at the University of Colorado Law School, suggests that the Trump administration may be overextending its executive authority, likening its attempts to preempt legislation via executive action to operating on "thin ice." The legal precedent for such broad federal overreach into state regulatory authority is considered uncertain, potentially leaving the administration vulnerable in court.

Conversely, Republican states that are either wary of drawing the President’s ire or are dependent on federal broadband funding for their rural communities might shy away from enacting or enforcing stringent AI laws. This potential retreat, irrespective of the ultimate court rulings, could foster an environment of chaos and uncertainty, thereby chilling further state-level legislative action. Paradoxically, the very Democratic states that the President seeks to regulate, possessing substantial financial resources and galvanized by the opportunity to challenge the administration, may prove to be the most resilient in their commitment to enacting and upholding AI safeguards.

In the absence of a federal legislative consensus, President Trump has pledged to collaborate with Congress to establish a national AI policy. However, the deeply divided and polarized nature of the legislative body makes the timely passage of such a bill highly improbable. Earlier in 2025, the Senate effectively blocked a moratorium on state AI laws that had been appended to a tax bill, and a subsequent attempt in the House of Representatives to include similar provisions in a defense bill was also abandoned in November. The President’s assertive use of an executive order to pressure Congress may, in fact, diminish any lingering inclination for a bipartisan agreement on AI regulation.

Brad Carson, a former Democratic congressman from Oklahoma and a key figure in building a network of super PACs supporting AI regulation candidates, contends that the executive order has exacerbated political divisions, transforming the issue into a more partisan debate. "It has hardened Democrats and created incredible fault lines among Republicans," Carson stated, highlighting how the order has solidified opposition on one side while creating significant internal disagreements within the Republican party. This partisan hardening makes constructive dialogue and the forging of common ground increasingly difficult.

Within the Trump administration’s sphere of influence, a spectrum of views on AI regulation exists. While proponents of rapid AI advancement, such as AI and crypto czar David Sacks, advocate for deregulation, populist figures like Steve Bannon express concerns about the existential risks posed by superintelligence and the potential for widespread job displacement. In a notable instance of bipartisan dissent, Republican state attorneys general joined forces with their Democratic counterparts to pen a letter urging the Federal Communications Commission (FCC) not to override existing state AI laws, underscoring a shared apprehension about unchecked federal preemption.

As public anxiety regarding the potential negative impacts of AI on mental health, employment, and the environment continues to grow, so too does the demand for governmental regulation. Surveys indicate a significant and increasing portion of the American populace supports government oversight of AI technologies. Should Congress remain gridlocked, states will likely emerge as the primary arbiters of AI industry standards. The National Conference of State Legislatures reported that in 2025 alone, state legislators introduced over 1,000 AI-related bills, with nearly 40 states enacting more than 100 such laws, demonstrating a robust and active state-level legislative response.

The issue of child safety in relation to AI technologies has become a particularly potent catalyst for legislative action, potentially fostering rare bipartisan consensus. On January 7, Google and Character Technologies, the developer behind the companion chatbot Character.AI, reached a settlement in several lawsuits brought by families of teenagers who died by suicide following interactions with their AI. Just one day later, the Kentucky attorney general filed a lawsuit against Character Technologies, alleging that its chatbots contributed to child suicides and other self-harming behaviors. OpenAI and Meta are also facing a considerable number of similar legal challenges, with expectations of further litigation mounting. The absence of clear AI-specific laws leaves the application of existing product liability statutes and free speech doctrines to these novel dangers in a state of profound uncertainty, leaving legal experts like Grimmelmann to acknowledge that "it’s an open question what the courts will do."

Amidst the ongoing legal battles, states are expected to prioritize the passage of child safety legislation, which may be exempt from the scope of President Trump’s proposed ban on state AI laws. On January 9, OpenAI announced a collaboration with Common Sense Media, a prominent child-safety advocacy group, to support a ballot initiative in California. This initiative, dubbed the Parents & Kids Safe AI Act, aims to establish critical guardrails for chatbot interactions with children. Proposed measures include mandatory age verification for AI users, the implementation of parental controls, and the requirement for independent child-safety audits. If successful, this initiative could serve as a template for other states seeking to implement stricter regulations on chatbot behavior.

Furthermore, a growing public backlash against the immense energy and water consumption of AI data centers is prompting states to consider regulations governing the resources essential for AI operations. This could manifest in legislation requiring data centers to disclose their power and water usage and to bear the full cost of their electricity consumption. In the event of widespread job displacement due to AI automation, labor organizations may advocate for AI bans within specific professional sectors. A limited number of states, acutely concerned about the potentially catastrophic risks associated with advanced AI, may pursue safety legislation that mirrors the frameworks established by SB 53 and the RAISE Act.

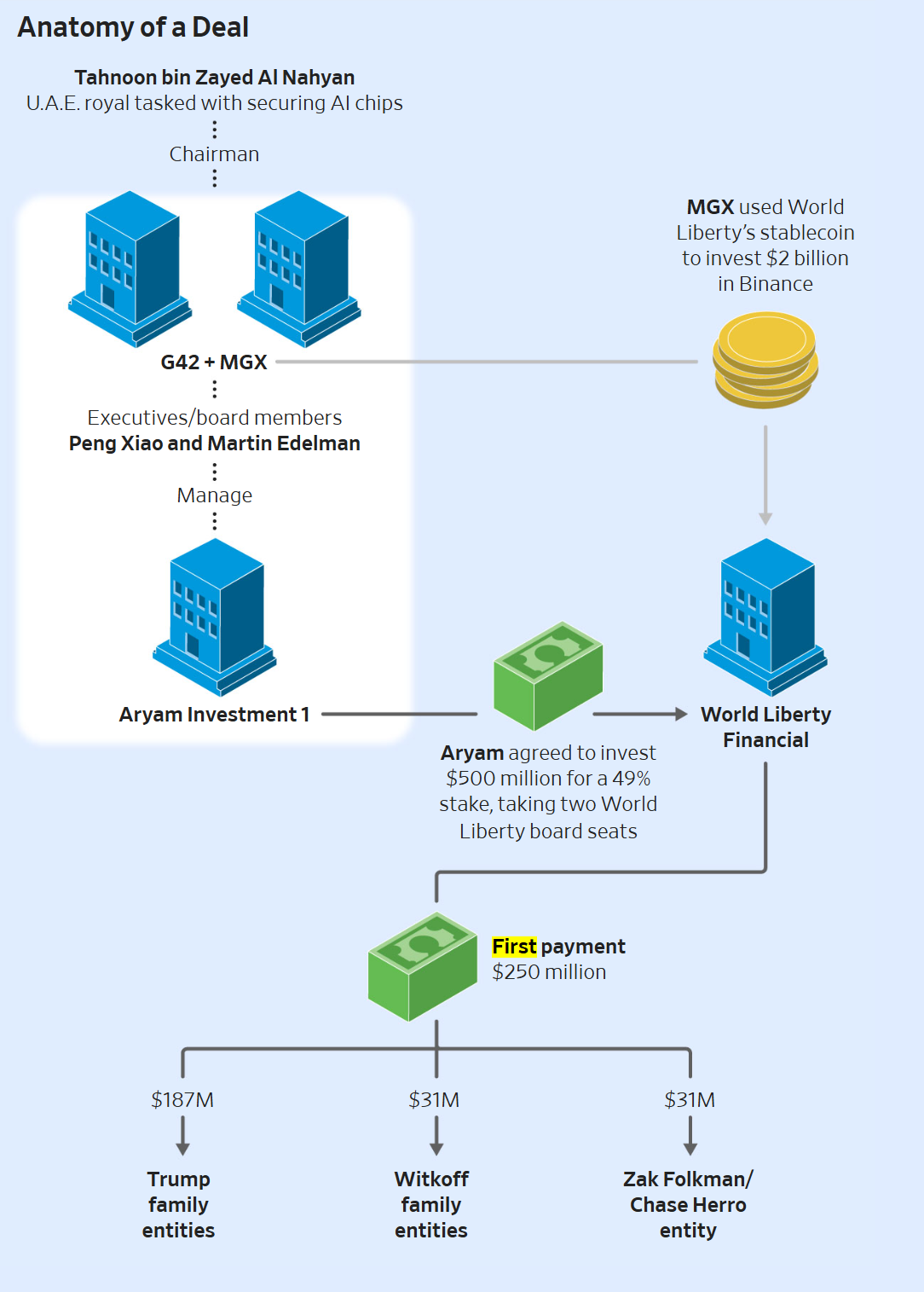

Concurrently, the tech industry’s considerable financial influence will continue to be leveraged in an effort to thwart regulatory measures. Leading the Future, a super PAC backed by prominent figures like OpenAI president Greg Brockman and the venture capital firm Andreessen Horowitz, is actively working to elect candidates who champion unrestricted AI development at both the federal and state levels, drawing parallels to the crypto industry’s successful strategies for influencing policy. In opposition, super PACs funded by Public First, an organization led by Carson and former Republican congressman Chris Stewart, will actively support candidates advocating for robust AI regulation. The political landscape may even see the emergence of candidates campaigning on populist platforms that are explicitly anti-AI, reflecting a segment of the electorate deeply concerned about the technology’s societal implications.

The year 2026 promises to be a period of slow, deliberate, and often contentious democratic deliberation as the United States grapples with the profound implications of artificial intelligence. The regulatory frameworks that are ultimately established in state capitals across the nation will play a pivotal role in shaping the trajectory of the most disruptive technology of our generation, with consequences that extend far beyond America’s borders and will resonate for years to come.