For decades, astrophysicists have harbored the ambition of constructing Milky Way simulations with the granularity to follow the trajectory and evolution of every single star. Such highly detailed models would provide an unprecedented opportunity for researchers to rigorously test and compare various theories concerning galactic evolution, structure, and the intricate processes of star formation, directly against observational data. However, the inherent complexity of accurately simulating a galaxy like the Milky Way presents a formidable challenge. It necessitates the precise calculation of gravitational forces, the dynamic behavior of interstellar fluids, the formation of chemical elements, and the explosive phenomena of supernovae, all across vast scales of both time and space. This multi-faceted computational demand has historically rendered such detailed simulations prohibitively difficult and resource-intensive.

Historically, scientists have been unable to accurately model a galaxy as immense as the Milky Way while simultaneously maintaining the fine-grained detail required to resolve individual stars. Current cutting-edge simulations, while impressive, typically represent systems with a total mass equivalent to about one billion suns. This figure falls significantly short of the Milky Way’s true stellar population, which numbers over 100 billion stars. Consequently, the smallest discrete unit, or "particle," within these existing models usually represents a collective group of approximately 100 stars. This averaging of stellar behavior inevitably obscures the nuances of individual stellar dynamics and significantly limits the fidelity of small-scale processes within the simulation. A primary contributor to this limitation is the required interval between computational time steps. To accurately capture transient and rapid events, such as the dramatic evolution of a supernova explosion, simulations must advance in extremely small increments of time.

Reducing the simulation’s time step drastically increases the computational burden. Even with the most advanced physics-based models available today, simulating the Milky Way on a star-by-star basis would demand approximately 315 hours of computation for every 1 million years of simulated galactic evolution. At this prohibitive rate, generating just 1 billion years of galactic activity would require over 36 years of real-world time. The seemingly straightforward solution of simply augmenting the number of supercomputer cores is not a practical or scalable approach. As the number of cores increases, energy consumption escalates excessively, and computational efficiency tends to diminish, creating an unsustainable bottleneck.

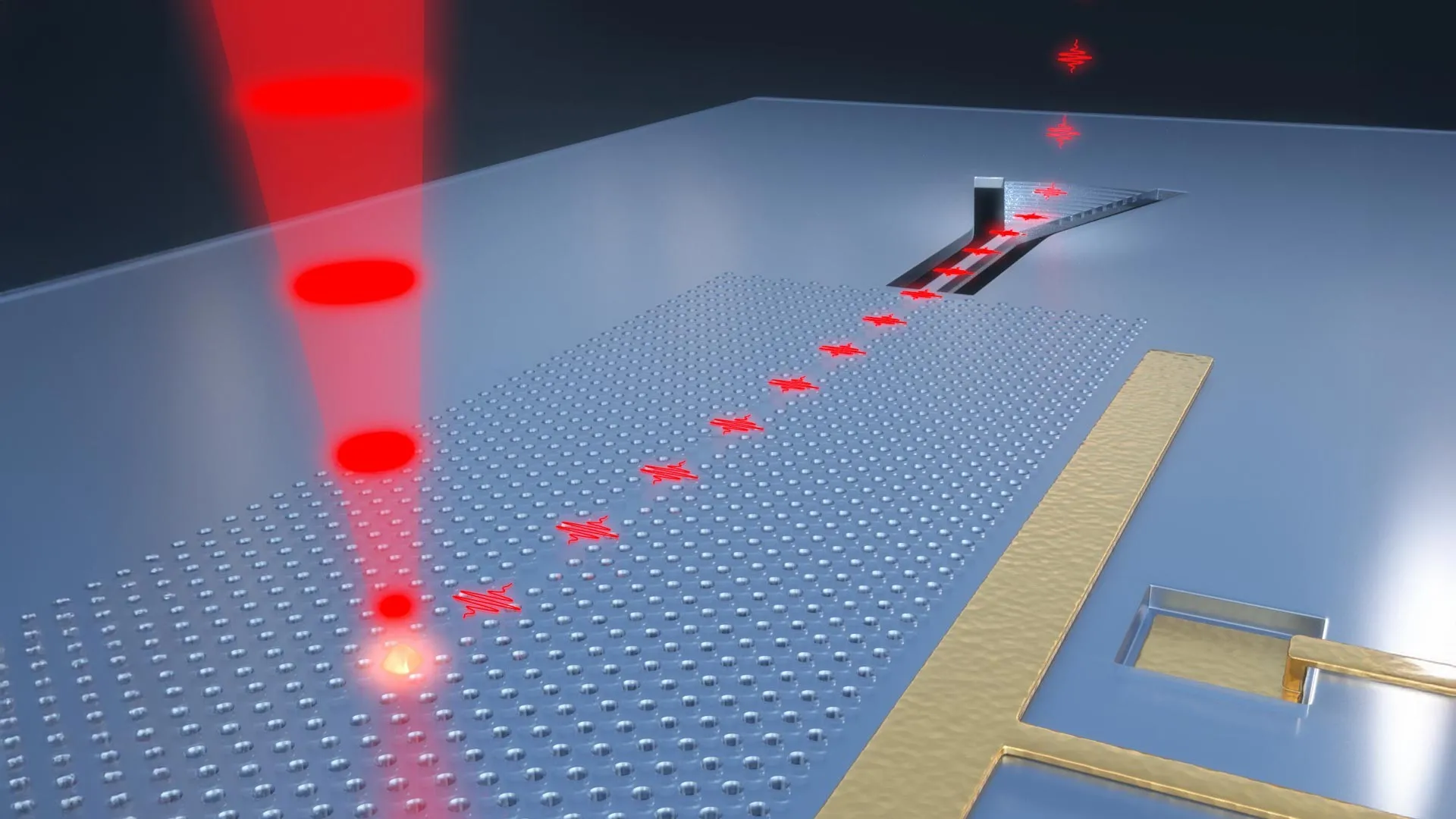

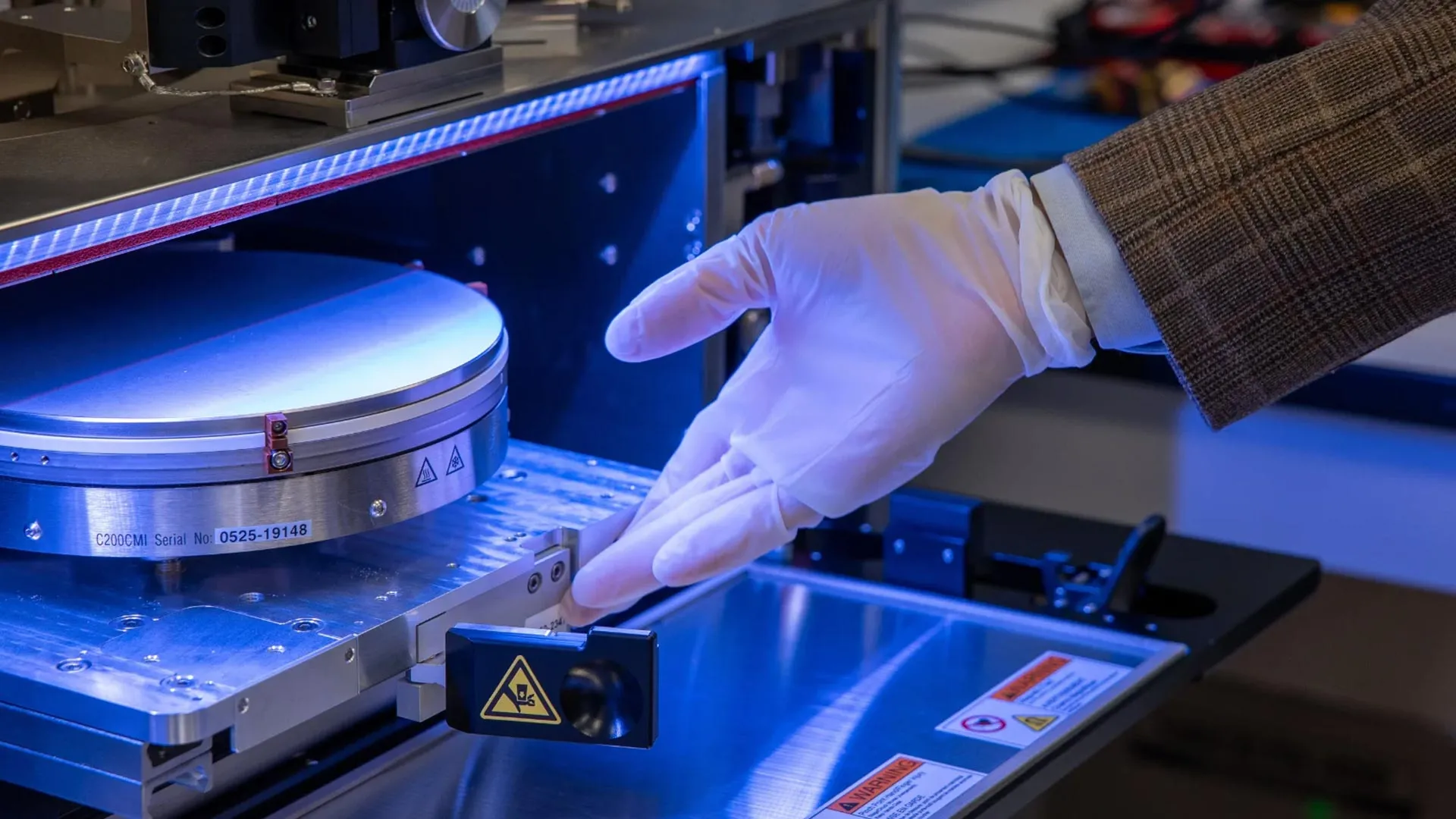

To surmount these significant computational barriers, Hirashima and his dedicated team devised an innovative strategy that synergistically combines a deep learning surrogate model with traditional physical simulation techniques. The surrogate model was meticulously trained on high-resolution supernova simulations. Through this training process, the AI learned to predict the complex behavior of gas dynamics in the 100,000 years following a supernova event, crucially without imposing any additional computational load on the main simulation. This AI-powered component enabled the researchers to effectively capture the overarching behavior of the galaxy while simultaneously modeling intricate small-scale events, including the detailed physics of individual supernovae. The team rigorously validated their novel approach by comparing its outputs against extensive, large-scale simulations conducted on RIKEN’s powerful Fugaku supercomputer and The University of Tokyo’s Miyabi Supercomputer System, confirming its accuracy and reliability.

This groundbreaking method provides true individual-star resolution for galaxies containing more than 100 billion stars, and it achieves this remarkable feat with unprecedented speed. The simulation of 1 million years of galactic evolution now takes a mere 2.78 hours, meaning that a full 1 billion years of activity can be simulated in approximately 115 days, a dramatic reduction from the previously estimated 36 years.

The implications of this hybrid AI approach extend far beyond galactic simulations. It holds the potential to fundamentally reshape numerous fields within computational science that require the intricate linking of small-scale physical phenomena with large-scale system behavior. Disciplines such as meteorology, oceanography, and climate modeling, which grapple with similar multi-scale complexities, stand to benefit immensely from tools that can dramatically accelerate their sophisticated simulations.

"I firmly believe that the integration of artificial intelligence with high-performance computing represents a fundamental paradigm shift in how we approach and solve multi-scale, multi-physics problems across the entire spectrum of computational sciences," states Hirashima. "This achievement not only demonstrates the power of AI-accelerated simulations but also signifies their evolution beyond mere pattern recognition. They are now becoming genuine instruments for scientific discovery, empowering us to unravel complex processes, such as tracing the very origins of the elements essential for life itself within our own galaxy." The successful application of this AI-driven simulation technique to model the Milky Way opens exciting avenues for understanding galactic evolution and also offers a powerful new toolkit for tackling some of the most pressing scientific challenges facing humanity, including predicting and mitigating climate change.