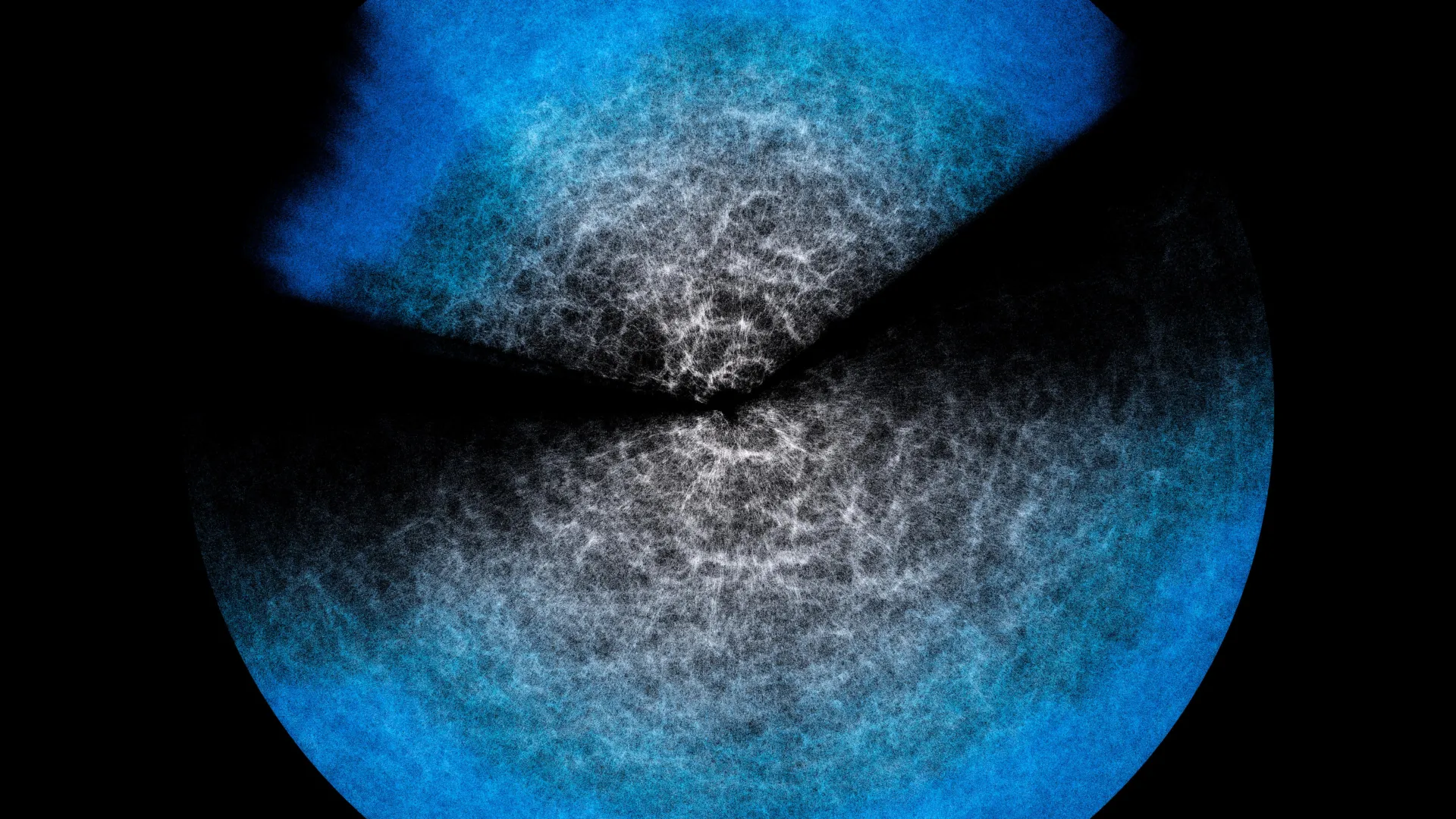

The quest to construct Milky Way simulations with the granularity to follow each individual star has long been a paramount objective for astrophysicists. Such meticulously detailed models would empower researchers to directly juxtapose theories of galactic evolution, structure, and star formation against empirical observational data, thereby accelerating our understanding of the cosmos. However, the accurate simulation of a galaxy of the Milky Way’s magnitude presents formidable computational challenges, necessitating the precise calculation of gravitational forces, fluid dynamics, the intricate processes of chemical element nucleosynthesis, and the cataclysmic events of supernova explosions across vast temporal and spatial scales. Historically, scientists have been stymied in their attempts to simulate a galaxy as expansive as the Milky Way while simultaneously preserving fine-grained detail at the level of individual stars. Existing cutting-edge simulations, while impressive, typically represent systems with a total mass equivalent to approximately one billion suns, a figure significantly dwarfed by the more than 100 billion stars that constitute our home galaxy. Consequently, the smallest discrete unit, or "particle," within these models usually represents a collective of roughly 100 stars. This inherent averaging process inevitably obscures the unique behaviors of individual stars and imposes limitations on the accuracy of simulating small-scale astrophysical phenomena. A core aspect of this difficulty lies in the computational timestep. To faithfully capture fleeting, rapid events such as the evolution of a supernova, a simulation must advance in exceedingly small increments of time.

The imperative to shrink these computational timesteps translates directly into a dramatically amplified demand for processing power. Even with the most advanced physics-based models available today, a simulation of the Milky Way at the individual star level would require an astonishing 315 hours to model merely 1 million years of galactic evolution. At this glacial pace, generating just 1 billion years of cosmic activity would necessitate over 36 years of real-time computation. The seemingly straightforward solution of simply augmenting the number of supercomputer cores is not a practically viable approach, as it leads to excessive energy consumption and a diminishing return in efficiency with each additional core. This computational bottleneck has historically prevented the detailed, star-by-star modeling of entire galaxies.

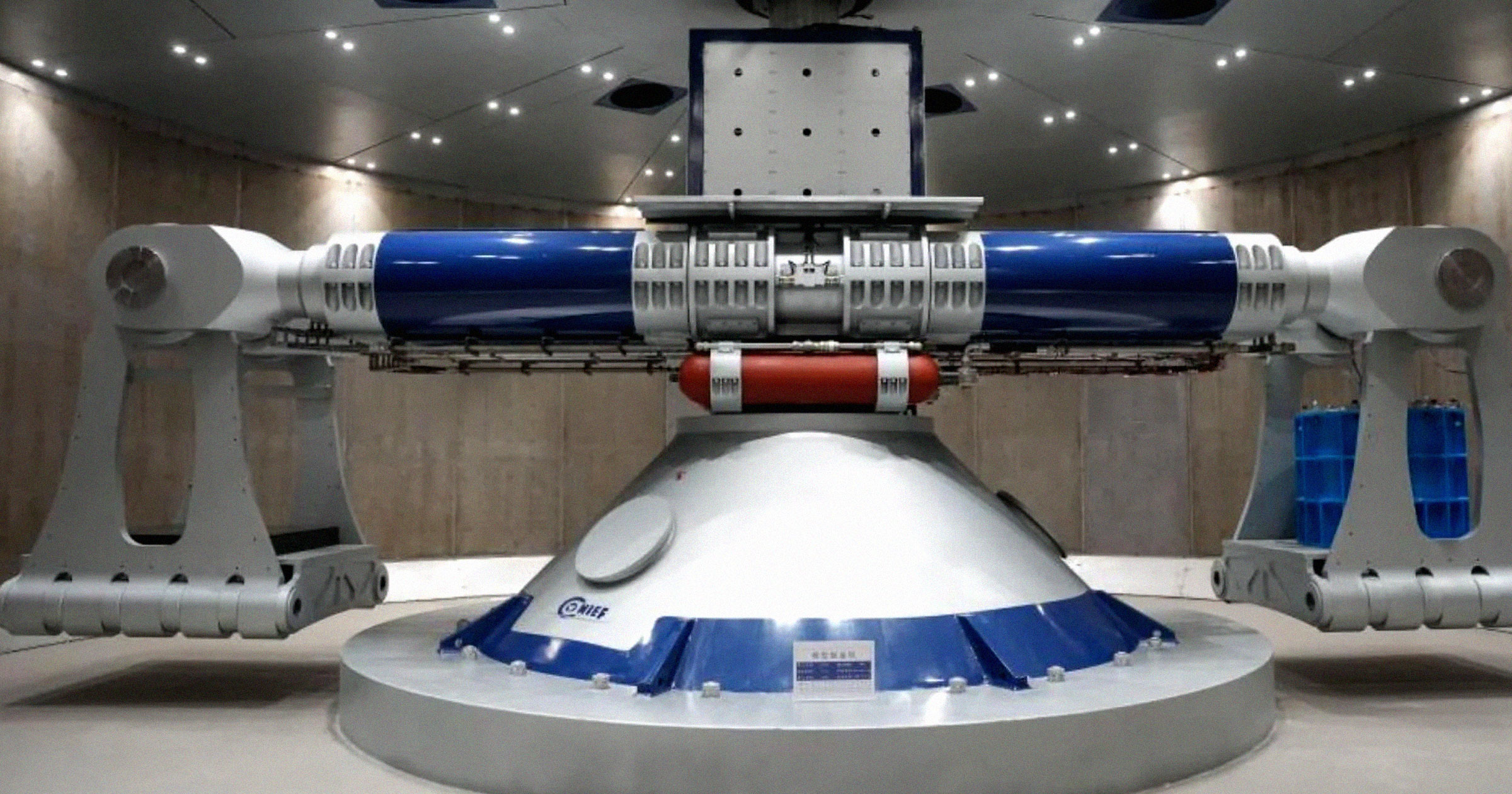

In a concerted effort to surmount these formidable barriers, Hirashima and his esteemed team have ingeniously devised a novel methodology that synergistically integrates a deep learning surrogate model with conventional physical simulations. This sophisticated surrogate model was meticulously trained on high-resolution supernova simulations, thereby acquiring the capability to predict the dispersal patterns of gas following a supernova explosion over a 100,000-year period, all without imposing additional computational burdens on the primary simulation. This AI-powered component has been instrumental in enabling the researchers to accurately capture the galaxy’s overarching behavior while concurrently modeling intricate small-scale events, including the detailed dynamics of individual supernovae. To rigorously validate their innovative approach, the team meticulously compared its outputs against large-scale simulations conducted on RIKEN’s Fugaku supercomputer and The University of Tokyo’s Miyabi Supercomputer System, confirming its remarkable accuracy and efficacy.

This groundbreaking hybrid approach delivers true individual-star resolution for galaxies comprising more than 100 billion stars, and it accomplishes this with an unprecedented level of speed. The simulation of 1 million years of galactic evolution, which previously would have taken many months, was completed in a mere 2.78 hours. This remarkable acceleration implies that simulating 1 billion years of cosmic history, an undertaking that would have spanned 36 years with traditional methods, can now be accomplished in approximately 115 days. This dramatic reduction in computational time opens up entirely new avenues for astrophysical research, allowing for more extensive and detailed investigations into galactic phenomena.

The implications of this hybrid AI approach extend far beyond the realm of astrophysics, offering transformative potential for numerous fields of computational science that necessitate the intricate linkage of small-scale physical processes with large-scale behavior. Disciplines such as meteorology, oceanography, and climate modeling grapple with analogous challenges, and they stand to benefit immensely from the development of tools that can accelerate complex, multi-scale simulations. Hirashima eloquently captures the significance of this breakthrough, stating, "I believe that integrating AI with high-performance computing marks a fundamental shift in how we tackle multi-scale, multi-physics problems across the computational sciences. This achievement also shows that AI-accelerated simulations can move beyond pattern recognition to become a genuine tool for scientific discovery — helping us trace how the elements that formed life itself emerged within our galaxy." This sentiment underscores the profound impact this research could have on our fundamental understanding of both the universe and our place within it, potentially revealing the cosmic origins of the very elements that underpin life on Earth. The ability to simulate such complex systems with unprecedented speed and detail promises to unlock new insights and accelerate discoveries across a wide spectrum of scientific inquiry.