The tech industry is currently grappling with a growing wave of public skepticism and outright backlash against the pervasive integration of Artificial Intelligence into almost every facet of the computing experience. What started as an exciting frontier of innovation for many is increasingly perceived as an intrusive, distracting, and often low-quality addition to essential software and services. This disillusionment is palpable across the digital landscape, with users expressing frustration over what they term “AI slop” — a deluge of AI-generated content and features that often feel unrefined, unnecessary, or even detrimental to their user experience. The sentiment is clear: for a significant portion of internet users, the promise of AI has begun to sour, turning into a source of anger and mistrust rather than excitement.

The Rising Tide of AI Disillusionment

The widespread integration of AI is not merely a niche concern for tech enthusiasts; it’s a mainstream issue causing considerable friction. Recent polls indicate a growing disdain among Americans for AI, particularly as it manifests in an onslaught of lazy AI-generated content that clogs news feeds, social media, and even search results. This dilution of quality and the feeling of being overwhelmed by automated, often soulless, output has led to a significant portion of the internet feeling disillusioned or even furious.

This sentiment has already translated into tangible user resistance. A prominent example is the reaction to Microsoft’s push to transform its operating system. A vast number of Windows users reportedly refused to upgrade to Windows 11, particularly after Microsoft announced its intention to evolve the OS into an “agentic operating system.” This term, implying a system where AI agents proactively manage and interact with user tasks, sparked fears of reduced user control, increased data collection, and an overly automated computing environment. The backlash against Windows 11’s AI ambitions served as an early warning shot for the tech industry, signaling that users are wary of mandatory AI integration, especially when it encroaches on core system functionality and personal autonomy.

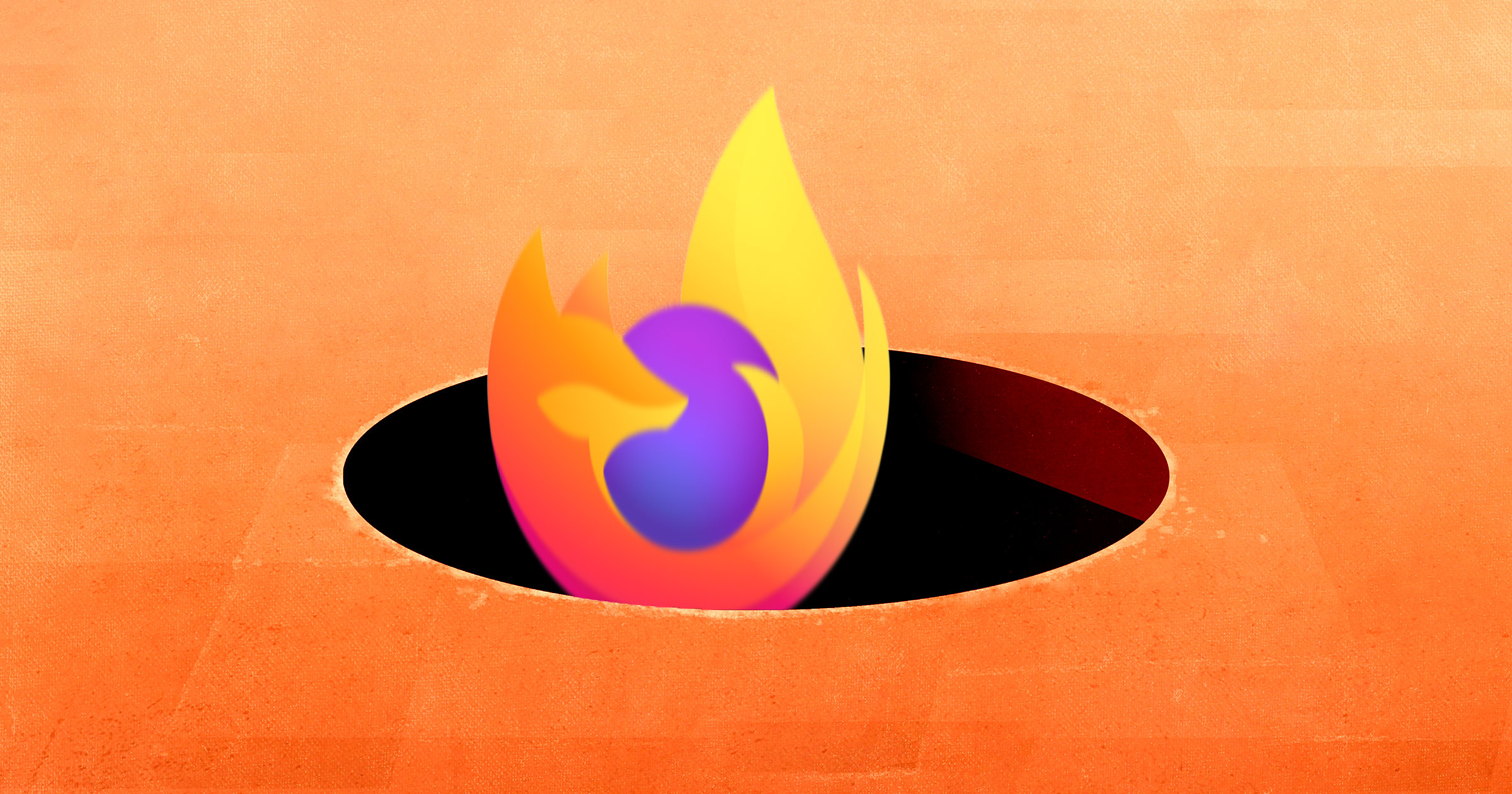

Mozilla’s Controversial Pivot Under New Leadership

Even organizations deeply rooted in the principles of user control, privacy, and open-source ethos are not immune to the allure – or pressure – of AI integration. Mozilla, the non-profit organization behind the Firefox browser, long hailed as a champion of an open and user-centric web, recently found itself at the epicenter of this growing anti-AI sentiment. Firefox has historically been lauded as a compelling, privacy-focused alternative to dominant browsers like Google Chrome and Apple Safari, building a loyal user base precisely because it stood apart from the commercial interests and data-hungry practices of its larger competitors.

However, a significant shift in direction was signaled with the appointment of Anthony Enzor-DeMeo as Mozilla’s new CEO. In a blog post dated December 16, Enzor-DeMeo outlined his vision for Mozilla’s “next chapter.” The announcement, intended to be forward-looking and ambitious, declared that Firefox would become a “modern AI browser and support a portfolio of new and trusted software additions.” This statement, interpreted by many as an aggressive “tripling down on AI,” immediately sent shockwaves through the Firefox community. For users who had chosen Firefox precisely for its perceived resistance to Silicon Valley trends and its commitment to an independent, non-AI-driven browsing experience, this declaration felt like a profound betrayal of the browser’s core identity and values.

The Immediate and Fierce User Backlash

The reaction from Firefox’s dedicated user base was swift, vocal, and overwhelmingly negative. Social media platforms, particularly X (formerly Twitter) and Reddit, became hotbeds of protest as users expressed their dismay, anger, and feelings of betrayal. The community, which often views itself as a custodian of the open web and digital privacy, felt that Mozilla was abandoning its principles to chase a trend that many perceived as antithetical to user empowerment.

One disillusioned user tweeted, “I’ve never seen a company so astoundingly out of touch with the people who want to use its software.” This sentiment encapsulated the feeling that Mozilla was ignoring the very reasons its users chose Firefox. Another user lamented, “I switched back to Firefox late last year BECAUSE it was the last AI-free browser. I shoulda known.” This comment highlighted the specific expectation many users held for Firefox: a sanctuary from the AI inundation seen elsewhere. The pleas continued, with users like Michael Swengel begging, “Please don’t turn Firefox into an AI browser. That’s a great way to push us to alternatives.” The message was clear: embracing AI without clear opt-out mechanisms or a nuanced understanding of user preferences was a direct threat to Firefox’s user retention and its unique market position.

Beyond social media, the community also organized more formal expressions of discontent. An open letter posted to the Firefox subreddit articulated a broader critique of Mozilla’s leadership and its perceived disconnect from its user base. The letter pointed out the irony that “in a post announcing this new direction and highlighting ‘agency and choice,’ there was little mention of user input or feedback.” It further criticized Mozilla’s historical “pattern of struggling to implement and support basic features” while often failing “to even acknowledge serious user feedback.” The core of the argument was that Firefox didn’t need to mimic tech giants to succeed: “Firefox doesn’t need to become Google or Microsoft to succeed by both business and user standards. It’s beloved precisely because it’s not. I hope that distinction isn’t lost as Mozilla enters its ‘next chapter’ as part of a ‘broader ecosystem of trusted software.’” This letter powerfully summarized the community’s desire for Firefox to remain true to its identity as an independent, user-focused browser, rather than chasing fleeting industry trends.

Mozilla’s Damage Control: The “AI Kill Switch” Emerges

The sheer volume and intensity of the outcry were formidable enough to force Mozilla into immediate damage control. Recognizing the severe blow to user trust, the company quickly moved to clarify its new CEO’s comments and reassure its agitated community. The initial response came via an update on Mastodon, a platform often favored by privacy-conscious users.

In this update, Mozilla attempted to mitigate fears by stating, “Something that hasn’t been made clear: Firefox will have an option to completely disable all AI features.” To emphasize the seriousness of this commitment, the company added, “We’ve been calling it the AI kill switch internally. I’m sure it’ll ship with a less murderous name, but that’s how seriously and absolutely we’re taking this.” The introduction of an “AI kill switch” was clearly an attempt to address the core concern of user control and choice, acknowledging that a significant portion of their user base simply did not want AI integrated into their browsing experience. This move aimed to position future AI features as optional, rather than mandatory, offering a pathway for users to maintain their preferred “AI-free” environment within Firefox.

Further Clarification Fails to Quell the Storm

Despite the initial damage control efforts, the controversy was far from over. In an apparent attempt to further reassure the company’s most diehard fans, CEO Anthony Enzor-DeMeo directly engaged with the community, taking to the comments section of the aforementioned open letter on Reddit. There, he reiterated Mozilla’s commitment to user control, stating, “Rest assured, Firefox will always remain a browser built around user control. That includes AI. You will have a clear way to turn AI features off. A real kill switch is coming in Q1 of 2026.”

However, rather than calming the situation, Enzor-DeMeo’s comment inadvertently fanned the flames even further. The mention of a “kill switch” coming in “Q1 of 2026” immediately raised concerns. For many, a timeline stretching over a year into the future felt too distant, implying that users would have to endure unwanted AI features for an extended period. More critically, the language used sparked a debate about the fundamental definition of “opt-in” versus “opt-out.” One user responded pointedly, “If a ‘kill switch’ is the official control for this, then the entire organization needs to stop referring to your ‘AI’ features as ‘opt-in.’ This is clearly opt-out.” This distinction is crucial for privacy-conscious users; “opt-in” implies features are off by default and require explicit user action to enable them, while “opt-out” means features are on by default and require explicit user action to disable them. The perception that Mozilla was misleadingly labeling an “opt-out” mechanism as “opt-in” further eroded trust. As the user added, “If Mozilla can’t agree to that basic definition, I don’t see how users are supposed to trust it’ll actually work.” This semantic disagreement underscored a deeper fracture in communication and trust between Mozilla’s leadership and its community.

A Contrasting Vision: Vivaldi’s Anti-AI Stance

In stark contrast to Mozilla’s internal struggles with AI integration, another browser company, Vivaldi, has taken a dramatically different and explicitly anti-AI stance, particularly regarding intrusive generative AI features. Vivaldi, known for its highly customizable browser built on Google’s open-source Chromium project, has positioned itself as a champion of user autonomy and an alternative to the increasingly automated web experience offered by tech giants.

In an August blog post, Vivaldi CEO Jon von Tetzchner directly criticized the trend of AI integration, accusing companies like Google and Microsoft of “reshaping the address bar into an assistant prompt, turning the joy of exploring into inactive spectatorship.” Von Tetzchner articulated a philosophy rooted in empowering users to actively engage with the internet, rather than being passively served by algorithms. He affirmed Vivaldi’s commitment to “continue building a browser for curious minds, power users, researchers, and anyone who values autonomy.”

Crucially, von Tetzchner outlined clear ethical boundaries for AI use within Vivaldi: “If AI contributes to that goal without stealing intellectual property, compromising privacy or the open web, we will use it. If it turns people into passive consumers, we will not.” This statement provides a clear framework, reassuring Vivaldi users that AI will only be considered if it genuinely enhances the user’s active browsing experience, respects intellectual property, and upholds privacy principles. This approach directly contrasts with the perception of AI as a default, often intrusive, component in other browsers, and positions Vivaldi as a haven for users seeking a more traditional, control-oriented web experience.

The Broader Implications for Open-Source and User Trust

The Firefox AI controversy highlights a critical dilemma facing open-source projects and companies that have historically championed user freedom and privacy. In an era dominated by large tech corporations with vast resources, the pressure to adopt emerging technologies like AI to remain competitive is immense. However, for organizations like Mozilla, whose brand identity is deeply intertwined with user trust, open standards, and an alternative vision for the internet, this embrace of new trends can be a double-edged sword. Users who gravitate towards Firefox often do so precisely to escape the perceived excesses and data harvesting practices of the mainstream. Introducing AI, especially with a perceived “opt-out” rather than “opt-in” approach and a distant implementation timeline for a “kill switch,” risks alienating the very community that forms the backbone of the browser’s support.

This incident also underscores the broader challenge of defining “innovation” in the current tech landscape. Is innovation always about adding the latest features, or is it sometimes about steadfastly upholding core values and providing a distinct, un-bloated experience? The open letter from the Firefox subreddit eloquently argued that Firefox’s success stemmed from its difference, not its similarity, to Google or Microsoft. For many, the “trusted software additions” envisioned by Mozilla’s new CEO could easily become “untrusted” if they infringe upon the principles that made Firefox beloved in the first place.

Conclusion: A Crossroads for Firefox

Mozilla and Firefox find themselves at a significant crossroads. The intense user backlash against the proposed AI pivot serves as a potent reminder of the power of community sentiment and the importance of transparent, user-centric decision-making, especially for an open-source project. While the promise of an “AI kill switch” aims to address immediate concerns about user control, the controversy surrounding its implementation — particularly the “opt-out” vs. “opt-in” debate and the distant Q1 2026 timeline — indicates that trust, once fractured, is difficult to rebuild quickly.

The path forward for Firefox will require a delicate balancing act: innovating to remain relevant in a rapidly evolving tech landscape while simultaneously staying true to the core values of user privacy, control, and an open web that have defined its identity for decades. The contrasting approach of Vivaldi offers a clear alternative vision, suggesting that there is still a significant market for browsers that prioritize user autonomy above all else. How Mozilla navigates this challenge will not only determine the future of Firefox but also send a powerful message about the viability of user-centric principles in an increasingly AI-driven world.

More on AI slop software: Vast Number of Windows Users Refusing to Upgrade After Microsoft’s Embrace of AI Slop