Tensor operations, a sophisticated branch of mathematics, are the bedrock of many modern technological marvels, particularly in the realm of artificial intelligence. These operations extend far beyond the basic arithmetic most individuals are familiar with. To visualize their complexity, imagine simultaneously manipulating a Rubik’s cube in multiple dimensions, performing rotations, slices, and rearrangements all at once. While humans and conventional digital computers are forced to break down such tasks into sequential steps, light possesses the inherent ability to execute all these operations concurrently.

Today, tensor operations are indispensable for AI systems engaged in a vast array of applications, including sophisticated image processing, nuanced language understanding, and countless other intricate tasks. As the volume of data continues its relentless growth, conventional digital hardware, such as Graphics Processing Units (GPUs), finds itself under increasing strain in terms of speed, energy efficiency, and scalability. The sheer computational demands of modern AI are pushing the boundaries of what current silicon-based architectures can achieve.

To confront these escalating challenges, an international consortium of researchers, spearheaded by Dr. Yufeng Zhang from the esteemed Photonics Group at Aalto University’s Department of Electronics and Nanoengineering, has conceptualized and developed a fundamentally new computational paradigm. Their innovative method empowers the completion of intricate tensor calculations within a single, instantaneous traversal of light through a meticulously designed optical system. This revolutionary process, aptly described as single-shot tensor computing, operates at the fundamental speed limit of the universe: the speed of light.

"Our method performs the same kinds of operations that today’s GPUs handle, like convolutions and attention layers, but does them all at the speed of light," explained Dr. Zhang in a statement. "Instead of relying on electronic circuits, which are inherently sequential and energy-intensive, we leverage the inherent physical properties of light to perform many computations simultaneously. This is a paradigm shift in how we approach AI computation." The physical properties of light, such as its wave-like nature and its ability to propagate without resistance, are being ingeniously exploited to achieve parallelism on a scale previously unimaginable for AI tasks.

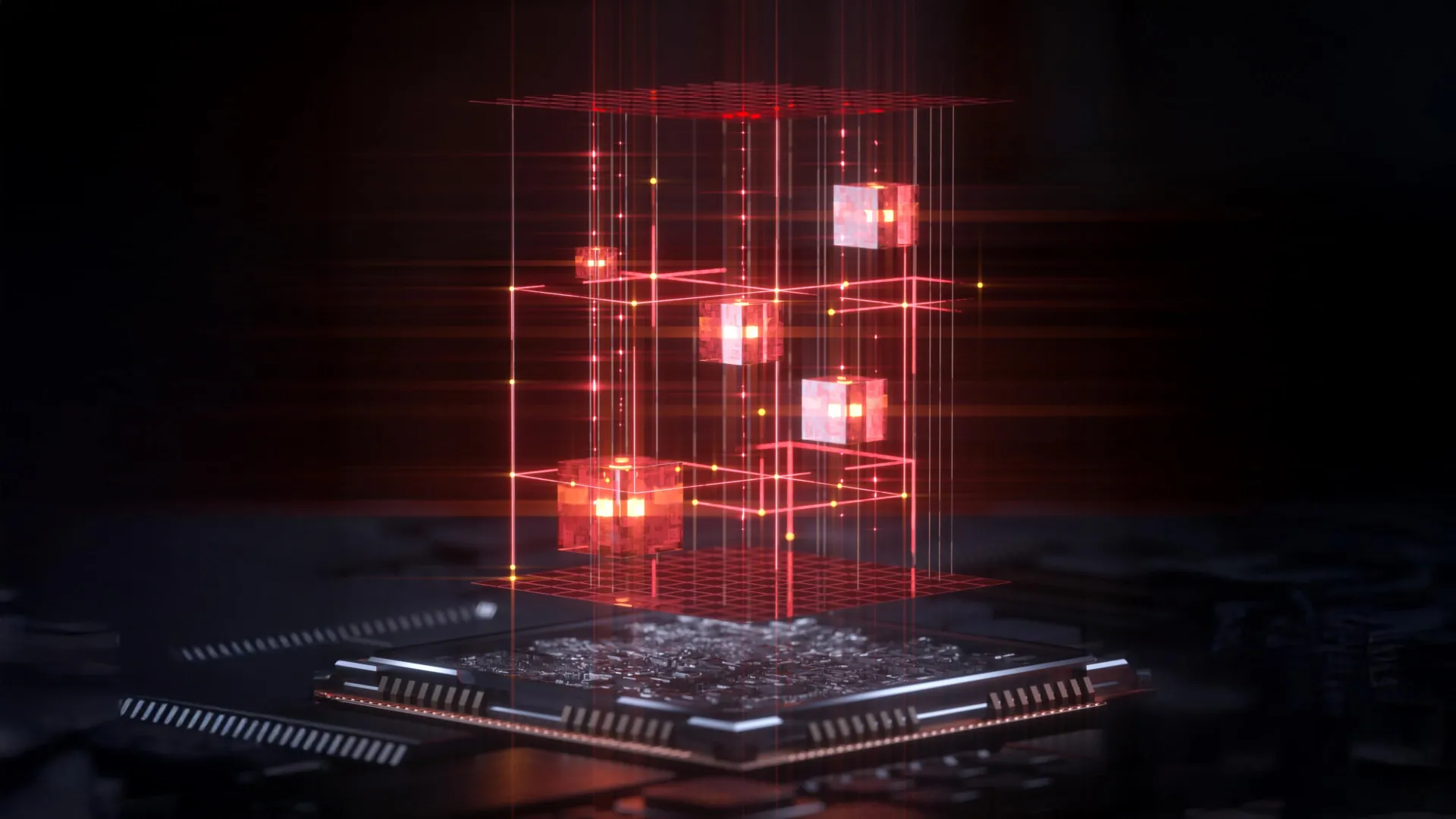

The core of this innovation lies in the team’s ability to encode digital information directly into the amplitude and phase of light waves. This process effectively transforms numerical data into tangible physical variations within the optical field. As these light waves propagate and interact within the optical system, they spontaneously execute the complex mathematical procedures that underpin deep learning, such as matrix and tensor multiplication. The brilliance of this approach is that these computations occur as a natural consequence of light’s interaction, rather than through programmed electronic gates. Furthermore, by ingeniously working with multiple wavelengths of light simultaneously, the researchers have ingeniously expanded their technique’s capability to support even more intricate and higher-order tensor operations, paving the way for processing even more complex AI models.

Dr. Zhang offered a vivid analogy to illustrate the transformative nature of their optical computing method: "Imagine you’re a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins. Normally, you’d process each parcel one by one, a time-consuming and sequential process. Our optical computing method merges all parcels and all machines together – we create multiple ‘optical hooks’ that connect each input to its correct output. With just one operation, one pass of light, all inspections and sorting happen instantly and in parallel." This analogy powerfully conveys the elimination of sequential bottlenecks and the achievement of massive parallelism inherent in their light-based system.

One of the most compelling and significant advantages of this new method is its remarkable simplicity and the minimal intervention it requires. The necessary mathematical operations occur autonomously as the light traverses the optical path. This means the system does not necessitate active electronic control or complex switching mechanisms during the computational process, drastically reducing complexity and potential points of failure. This passive optical processing is a key factor in its potential for high efficiency and scalability.

Professor Zhipei Sun, the esteemed leader of Aalto University’s Photonics Group, elaborated on the broad applicability of their approach: "This approach can be implemented on almost any optical platform. The fundamental principles are versatile and not tied to a specific, proprietary technology. In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption. This integration onto chips will be crucial for practical deployment." The ability to adapt this technology to various optical platforms suggests a rapid path towards widespread adoption.

The ultimate objective driving Dr. Zhang and his team is to seamlessly adapt this groundbreaking technique to the existing hardware and established platforms currently utilized by major technology companies. He estimates that, with focused development and collaborative efforts, the method could be effectively incorporated into such large-scale systems within a timeframe of 3 to 5 years. This optimistic projection highlights the maturity of the core concept and its potential for near-term impact.

"This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields," Dr. Zhang concluded with considerable enthusiasm. The implications of this advancement are far-reaching, promising to revolutionize sectors such as autonomous driving, drug discovery, climate modeling, advanced robotics, and natural language processing, all of which are heavily reliant on the computational power of AI. The research, a testament to the ingenuity of human innovation, was formally published in the prestigious journal Nature Photonics on November 14th, 2025, marking a significant milestone in the ongoing quest for more powerful and efficient artificial intelligence. The study represents a pivotal step towards a future where the speed and efficiency of light are harnessed to unlock the full potential of artificial intelligence.