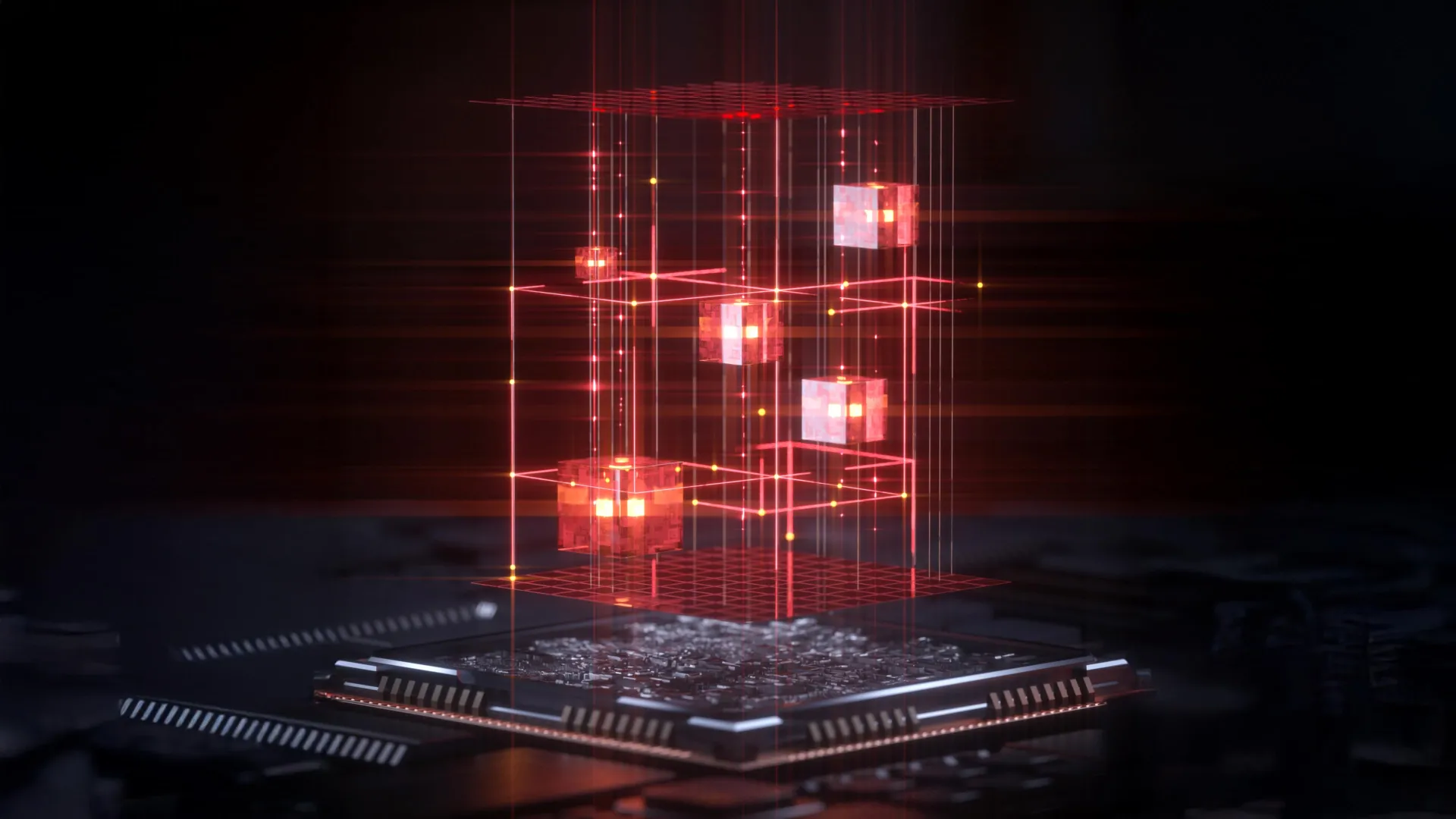

Tensor operations can be conceptually visualized as manipulating a multi-dimensional Rubik’s cube, involving rotations, slices, and rearrangements of its layers. Humans and traditional silicon-based processors must meticulously plan and execute each of these manipulations one after another. This sequential processing, while effective for simpler tasks, becomes a significant bottleneck for the massive datasets and intricate models that define cutting-edge AI. The sheer volume of data generated today, from social media feeds to scientific experiments, places immense pressure on existing hardware like Graphics Processing Units (GPUs). These GPUs, while powerful, still grapple with limitations in speed, energy consumption, and the ability to scale efficiently to meet the ever-growing demands of AI.

The challenge of efficiently handling tensor operations has spurred an international research effort, spearheaded by Dr. Yufeng Zhang and his team at Aalto University’s Photonics Group within the Department of Electronics and Nanoengineering. Their innovative solution, termed "single-shot tensor computing," achieves a monumental feat: completing complex tensor calculations in a single pass of light through an optical system. This fundamentally new paradigm operates at the very limit of physical speed – the speed of light itself.

"Our method performs the same kinds of operations that today’s GPUs handle, like convolutions and attention layers, but does them all at the speed of light," Dr. Zhang explained, highlighting the direct relevance of their work to current AI hardware. "Instead of relying on electronic circuits, we use the physical properties of light to perform many computations simultaneously." This shift from electronic to optical processing is key to overcoming the inherent speed limitations of traditional computing.

The ingenious mechanism behind this advancement lies in how the researchers encode digital information into light. They manipulate the amplitude and phase of light waves, effectively transforming numerical data into physical variations within the optical field. As these modulated light waves propagate and interact, they automatically execute the required mathematical procedures, such as matrix and tensor multiplication – the bedrock of deep learning algorithms. To further enhance their capabilities, the team ingeniously employed multiple wavelengths of light. This multi-wavelength approach allows their technique to handle even more intricate and higher-order tensor operations, expanding the scope of problems that can be addressed with this optical method.

Dr. Zhang offered a vivid analogy to illustrate the transformative power of their approach: "Imagine you’re a customs officer who must inspect every parcel through multiple machines with different functions and then sort them into the right bins. Normally, you’d process each parcel one by one. Our optical computing method merges all parcels and all machines together — we create multiple ‘optical hooks’ that connect each input to its correct output. With just one operation, one pass of light, all inspections and sorting happen instantly and in parallel." This analogy effectively conveys the parallel processing capabilities and the elimination of sequential bottlenecks that define their optical computing.

A particularly compelling advantage of this optical tensor computing method is its inherent simplicity and minimal need for external control. The complex mathematical operations occur naturally as the light traverses the optical system, a phenomenon known as passive optical processing. This means the system does not require active electronic switching or continuous real-time control during the computation phase, further contributing to its efficiency and reducing energy consumption.

Professor Zhipei Sun, the esteemed leader of Aalto University’s Photonics Group, underscored the broad applicability of their discovery. "This approach can be implemented on almost any optical platform," he stated, emphasizing its versatility. The future vision for this technology is ambitious: "In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption." Integration onto photonic chips would pave the way for miniaturized, highly efficient AI accelerators.

The ultimate goal, as articulated by Dr. Zhang, is to seamlessly adapt this technique to the existing hardware and platforms currently utilized by major technology companies. He projects that the successful integration of this method into such systems could be realized within a timeframe of three to five years, signaling a rapid transition from laboratory research to practical application.

"This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields," Dr. Zhang concluded with optimism, envisioning a future where AI is not only more powerful but also more accessible and energy-efficient. The study detailing this breakthrough was published in the prestigious journal Nature Photonics on November 14th, 2025, marking a significant milestone in the ongoing quest for next-generation computing. This advancement has the potential to unlock new frontiers in AI research and development, enabling breakthroughs in scientific discovery, personalized medicine, autonomous systems, and countless other applications that rely on the rapid and efficient processing of complex data. The implications for supercomputing power being harnessed by a single beam of light are profound, suggesting a paradigm shift in how we approach computation for artificial intelligence. The move from electronic to optical computing promises not only increased speed but also a dramatic reduction in energy consumption, addressing one of the most significant sustainability challenges in the field of artificial intelligence today. The ability to perform tensor operations at the speed of light means that AI models, which are currently constrained by computational bottlenecks, could be trained faster, made more complex, and deployed more widely, leading to a democratized access to advanced AI capabilities. The passive nature of the optical processing further simplifies hardware design and reduces operational costs, making this technology a compelling prospect for widespread adoption. As the research progresses towards integration with existing photonic chips, the vision of AI running on light-based processors moves closer to reality, heralding an era of unparalleled computational power for artificial intelligence.