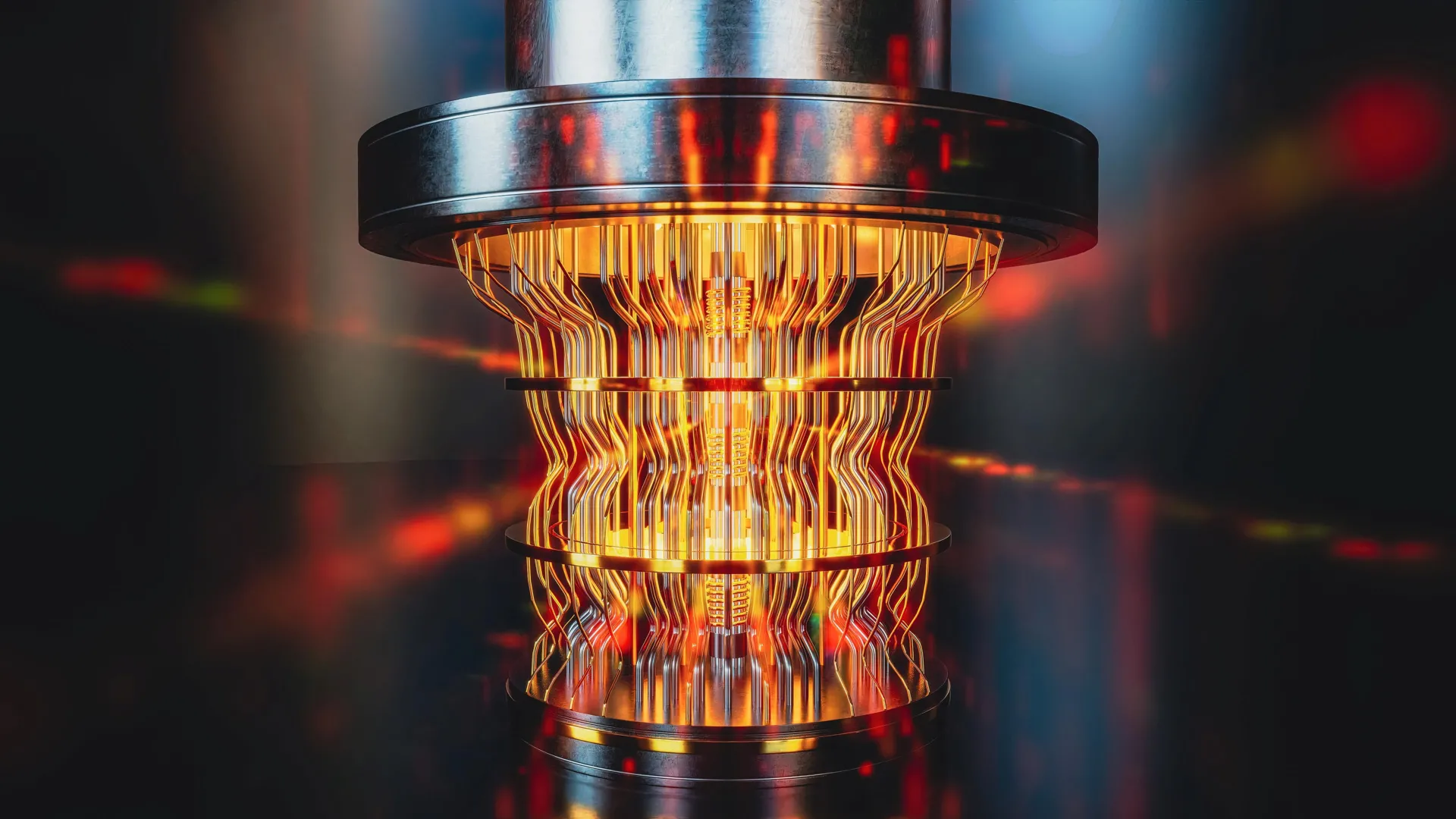

Quantum computing, a revolutionary paradigm poised to tackle challenges far beyond the reach of even the most powerful traditional supercomputers, is no longer a distant dream but a rapidly advancing reality. Its potential to unlock profound breakthroughs in fields as diverse as fundamental physics, personalized medicine, advanced cryptography, and artificial intelligence is fueling an intense global race to develop the first truly reliable, large-scale commercial quantum computers. However, as these sophisticated machines inch closer to widespread adoption, a critical and previously daunting hurdle has emerged: how can we definitively confirm the accuracy of the answers produced by quantum computers, especially when those answers pertain to problems so complex that verifying them with classical methods would take an astronomically long time? Addressing this fundamental question, a groundbreaking study from Swinburne University has unveiled innovative techniques that promise to provide a robust and efficient solution to the quantum verification dilemma.

The inherent difficulty in verifying quantum computations stems directly from the very nature of the problems they are designed to solve. As Dr. Alexander Dellios, the lead author of the study and a Postdoctoral Research Fellow at Swinburne’s Centre for Quantum Science and Technology Theory, eloquently explains, "There exists a range of problems that even the world’s fastest supercomputer cannot solve, unless one is willing to wait millions, or even billions, of years for an answer." This immense computational gap means that for problems where quantum computers offer a significant speedup, classical computers are simply not a viable tool for validation. Therefore, to build trust and enable the practical deployment of quantum technology, "methods are needed to compare theory and result without waiting years for a supercomputer to perform the same task."

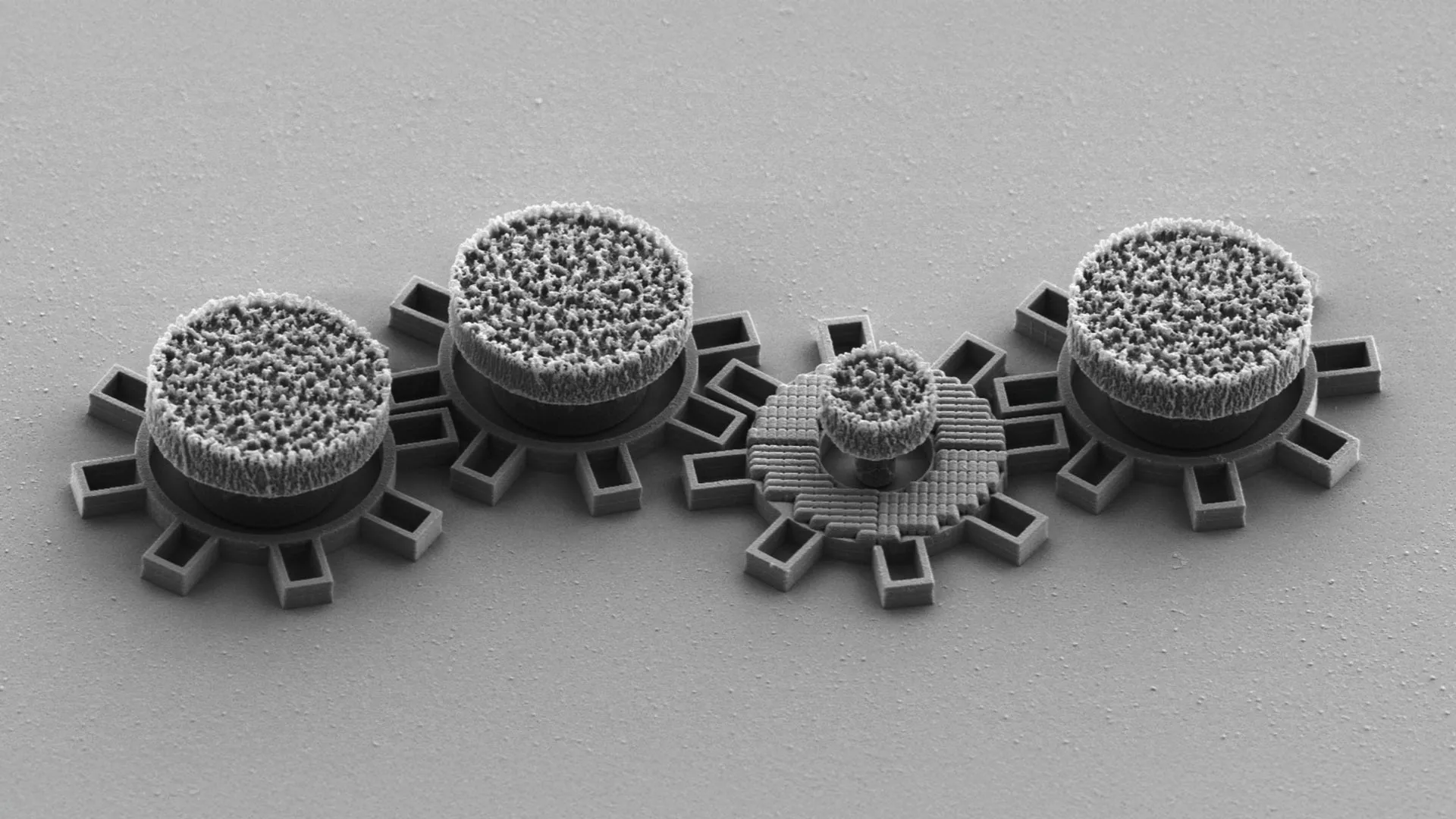

The Swinburne University research team has directly confronted this challenge by developing novel techniques specifically tailored for a particular class of quantum devices known as Gaussian Boson Samplers (GBS). GBS machines operate by manipulating photons, the fundamental particles of light, to generate complex probability distributions. These distributions represent solutions to specific types of computational problems that would take thousands of years for the most powerful conventional supercomputers to simulate and calculate. The accuracy of these probability distributions is paramount for the GBS to be considered a reliable quantum computation.

The newly developed techniques by Dellios and his colleagues offer a remarkable solution. "In just a few minutes on a laptop," Dr. Dellios states with evident excitement, "the methods developed allow us to determine whether a GBS experiment is outputting the correct answer and what errors, if any, are present." This dramatic reduction in verification time, from millennia to mere minutes, represents a monumental leap forward in making quantum computing accessible and trustworthy.

To rigorously test their innovative approach, the researchers applied it to a recently published GBS experiment. This experiment, by its very nature, would have required a staggering 9,000 years to reproduce and verify using the most advanced supercomputers available today. The results of their analysis were both revealing and impactful. The team’s verification method demonstrated that the probability distribution generated by the experiment did not align with the intended theoretical target. Furthermore, their analysis uncovered a previously unacknowledged source of "extra noise" within the experiment. Noise, in the context of quantum computing, refers to unwanted disturbances that can lead to errors and compromise the integrity of the computation. Identifying and quantifying this noise is crucial for understanding and mitigating its effects.

The discovery of this discrepancy and the identification of unexpected noise raise further intriguing questions. The next critical step for the researchers is to determine whether the reproduction of this unexpected probability distribution is, in itself, a computationally difficult task. If it is, it could imply that the observed deviation is a genuine, complex quantum phenomenon. Alternatively, it is possible that the observed errors, stemming from the identified noise, have caused the device to lose its inherent quantum properties, a state often referred to as "quantumness." Understanding this distinction is vital for distinguishing between genuine quantum computational power and the artifacts of faulty hardware or experimental conditions.

The implications of this research extend far beyond the specific GBS platform. The outcome of this investigation has the potential to profoundly shape the future development of large-scale, error-free quantum computers that are robust enough for commercial deployment. Dr. Dellios harbors a clear vision for his role in this endeavor: "Developing large-scale, error-free quantum computers is a herculean task that, if achieved, will revolutionize fields such as drug development, AI, cyber security, and allow us to deepen our understanding of the physical universe."

He further emphasizes the indispensable role of scalable validation methods in this ambitious pursuit. "A vital component of this task is scalable methods of validating quantum computers," he asserts, "which increase our understanding of what errors are affecting these systems and how to correct for them, ensuring they retain their ‘quantumness’." This focus on understanding and correcting errors is at the heart of building reliable quantum machines. By providing a means to swiftly and accurately assess the output of quantum experiments, the Swinburne team’s work equips researchers and developers with a powerful tool to diagnose problems, refine algorithms, and ultimately engineer quantum computers that are not only powerful but also dependable.

The development of GBS machines is particularly relevant to the broader field of quantum computing. GBS is a crucial testbed for exploring fundamental quantum phenomena and for developing error correction techniques. The ability to reliably verify GBS outputs is a significant step towards demonstrating the practical utility of these devices for specific computational tasks, such as the simulation of complex molecular interactions for drug discovery or the optimization of complex logistical networks.

The validation techniques developed by Dellios and his team offer a multi-faceted benefit. Firstly, they provide immediate assurance of computational integrity, allowing researchers to trust the results of their quantum experiments. This accelerates the pace of scientific discovery by reducing the time spent on debugging and re-running experiments due to unverified outputs. Secondly, by pinpointing the presence and nature of errors, these methods enable targeted improvements in quantum hardware design and fabrication. Understanding how noise affects quantum computations is key to developing more robust qubits and more resilient quantum circuits.

The journey towards fault-tolerant quantum computing, where errors can be effectively managed and corrected, is a long and arduous one. However, the work at Swinburne University represents a crucial stride in this direction. By demystifying the verification process, they are not only building confidence in current quantum technologies but also laying the groundwork for future advancements. The ability to quickly and reliably check quantum computations means that developers can more effectively iterate on their designs, experiment with new quantum algorithms, and ultimately accelerate the timeline for realizing the transformative potential of quantum computing across a vast array of scientific and industrial sectors. This breakthrough signifies a pivotal moment, moving us closer to a future where the answers from quantum computers can be trusted, unlocking a new era of scientific exploration and technological innovation. The days of questioning the validity of quantum computations are numbered, thanks to this ingenious approach.