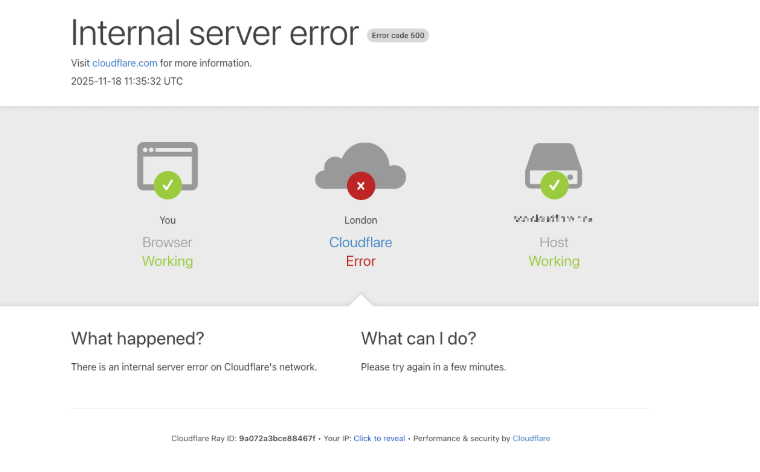

The incident, which began around 6:30 AM EST/11:30 AM UTC on November 18th, saw Cloudflare’s status page acknowledging an "internal service degradation." The ensuing hours were marked by a frustrating cycle of services intermittently returning and then failing again. Many websites that relied on Cloudflare for their web application firewall (WAF), bot management, and even Domain Name System (DNS) services found themselves in a precarious position. Their ability to migrate away from Cloudflare was severely hampered, if not entirely prevented, by the inaccessibility of the Cloudflare portal itself and the intertwined dependency on their DNS services.

However, a subset of Cloudflare’s clientele managed to successfully reroute their domains away from the beleaguered platform during the outage. According to Aaron Turner, a faculty member at IANS Research, these organizations are now presented with a critical opportunity to thoroughly scrutinize their Web Application Firewall (WAF) logs from the period of the outage. Turner highlighted that Cloudflare’s WAF is exceptionally adept at filtering out a wide spectrum of malicious traffic, including those that align with the top ten types of application-layer attacks as defined by OWASP, such as credential stuffing, cross-site scripting (XSS), SQL injection, bot attacks, and API abuse. The outage, in his view, serves as a stark reminder for Cloudflare customers to reassess their own intrinsic application and website defenses, particularly how they might falter without the protective buffer of Cloudflare.

Turner elaborated on this point, suggesting that developers may have inadvertently become complacent regarding certain security vulnerabilities, such as SQL injection, precisely because Cloudflare was effectively blocking these threats at the network’s edge. "Your developers could have been lazy in the past for SQL injection because Cloudflare stopped that stuff at the edge," Turner stated. "Maybe you didn’t have the best security QA [quality assurance] for certain things because Cloudflare was the control layer to compensate for that."

He shared an anecdote about a company he was assisting, which experienced a dramatic surge in log volume during the outage. This organization was still in the process of sifting through the data to differentiate between genuinely malicious activity and mere background noise. "It looks like there was about an eight-hour window when several high-profile sites decided to bypass Cloudflare for the sake of availability," Turner remarked. "Many companies have essentially relied on Cloudflare for the OWASP Top Ten [web application vulnerabilities] and a whole range of bot blocking. How much badness could have happened in that window? Any organization that made that decision needs to look closely at any exposed infrastructure to see if they have someone persisting after they’ve switched back to Cloudflare protections."

The implications for cybercriminals were not lost on Turner. He posited that threat actors likely noticed when specific online merchants, whom they habitually targeted, temporarily disconnected from Cloudflare’s services. "Let’s say you were an attacker, trying to grind your way into a target, but you felt that Cloudflare was in the way in the past," he explained. "Then you see through DNS changes that the target has eliminated Cloudflare from their web stack due to the outage. You’re now going to launch a whole bunch of new attacks because the protective layer is no longer in place."

Nicole Scott, Senior Product Marketing Manager at Replica Cyber, echoed this sentiment, characterizing the Cloudflare outage as an "unplanned, but highly valuable, live security exercise." She elaborated in a LinkedIn post: "That few-hour window was a live stress test of how your organization routes around its own control plane and shadow IT blossoms under the sunlamp of time pressure. Yes, look at the traffic that hit you while protections were weakened. But also look hard at the behavior inside your org."

Scott outlined a series of incisive questions that organizations should be asking themselves to glean security insights from the Cloudflare disruption:

- What protections were disabled or bypassed, and for how long? This includes WAF rules, bot mitigation, and geo-blocking capabilities.

- What emergency DNS or routing changes were implemented, and who authorized them? Understanding the decision-making process and approval workflows is crucial.

- Were there instances of employees resorting to personal devices, unsecured home Wi-Fi, or unsanctioned Software-as-a-Service (SaaS) providers to circumvent the outage? This reveals potential shadow IT and data exfiltration risks.

- Were any new services, secure tunnels, or vendor accounts hastily established "just for now" during the crisis? Identifying temporary solutions that may have become permanent is vital.

- Is there a clear plan to dismantle these emergency workarounds, or are they likely to remain in place indefinitely? This addresses the risk of long-term security drift.

- For future incidents, what is the deliberate fallback plan, as opposed to decentralized, ad-hoc improvisation? Establishing a pre-defined incident response strategy is paramount.

In a postmortem analysis released Tuesday evening, Cloudflare clarified that the disruption was not the result of any cyberattack or malicious activity, directly or indirectly. Cloudflare CEO Matthew Prince explained that the issue was triggered by a change in permissions within one of their database systems. This alteration caused the database to generate numerous duplicate entries into a "feature file" utilized by their Bot Management system. Consequently, this feature file doubled in size, and the oversized file was then distributed across all machines constituting their network, leading to the widespread degradation of services.

Cloudflare estimates that approximately 20% of websites leverage its services. Coupled with the extensive reliance on other major cloud providers like AWS and Azure, even a brief outage at a single platform can create a critical single point of failure for a vast number of organizations.

Martin Greenfield, CEO of IT consultancy Quod Orbis, views Tuesday’s outage as a stark reminder for many organizations that they may be overly concentrated in a single vendor’s ecosystem. He offered several practical and long-overdue recommendations: "Split your estate. Spread WAF and DDoS protection across multiple zones. Use multi-vendor DNS. Segment applications so a single provider outage doesn’t cascade. And continuously monitor controls to detect single-vendor dependency."

The Cloudflare outage, while disruptive, has inadvertently served as a powerful catalyst for a much-needed re-evaluation of cybersecurity strategies. It has underscored the importance of not only robust external defenses but also the internal preparedness, resilience, and diversification of critical infrastructure to mitigate the impact of unforeseen failures. Organizations that diligently analyze the fallout and implement the lessons learned from this incident will undoubtedly emerge with a more robust and adaptable security posture, transforming a potential crisis into a strategic advantage.