Navigating this complex and often bewildering AI ecosystem is further complicated by the internal schisms and public feuds erupting within the very companies spearheading its development. Instead of offering a unified front or clear guidance, the AI industry appears to be devolving into a chaotic free-for-all, reminiscent of the frantic final act of a zombie apocalypse, where alliances are fragile and betrayal is rampant. This internecine conflict is not confined to hushed whispers; it has spilled into the public arena, with prominent figures like Yann LeCun, Meta’s former chief AI scientist, openly sharing contentious opinions and internal insights, effectively "spilling the tea" on the industry’s inner workings. The most dramatic manifestation of this corporate warfare, however, is the impending legal showdown between tech titans Elon Musk and OpenAI. This high-profile lawsuit, born from a bitter fallout between former collaborators, promises to be a spectacle of unprecedented scale, a public airing of grievances and technological disputes that is sure to captivate the world and provide ample "popcorn" for observers.

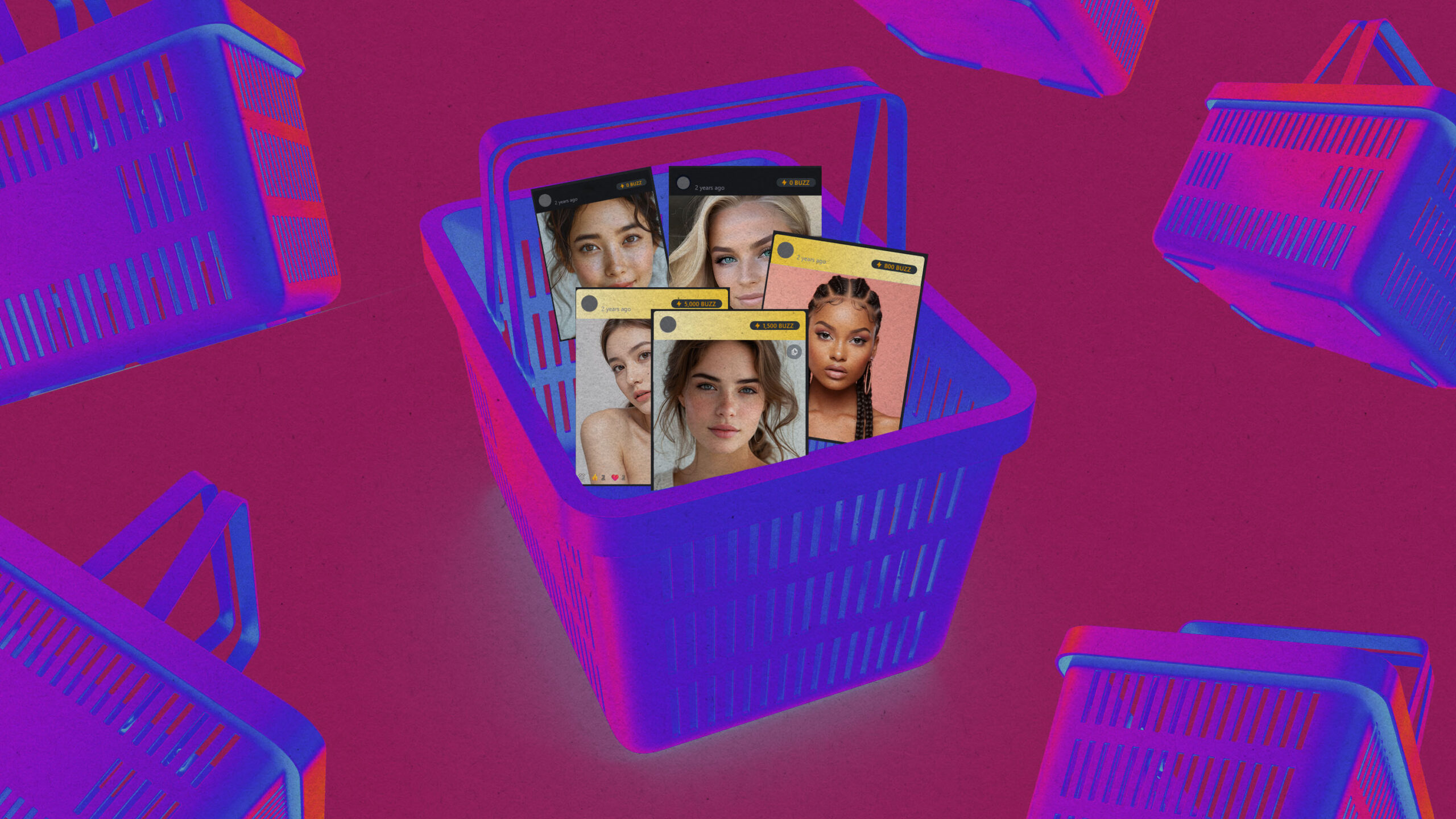

The divergence in AI capabilities, from the creation of adult content to the execution of highly specialized professional tasks, underscores the profound ethical and societal challenges we face. Grok’s ability to generate pornography, while alarming to many, highlights the unsupervised potential of AI to delve into areas that push the boundaries of societal norms and raise serious questions about content moderation, exploitation, and the very definition of creative expression. This capability, if unchecked, could lead to the proliferation of harmful and inappropriate material, requiring robust regulatory frameworks and ethical guidelines. On the other hand, Claude Code’s proficiency in tasks ranging from building complex websites to analyzing medical scans like MRIs, showcases AI’s immense potential to augment human capabilities, drive innovation, and solve some of humanity’s most pressing problems. This dual nature—the capacity for both the trivial and the transformative—is at the heart of the current AI panic.

The concern among Gen Z is particularly acute because this demographic is entering the workforce at a time when AI is poised to automate a significant portion of existing jobs. The skills and training that were once considered essential may rapidly become obsolete, necessitating a fundamental rethinking of education and career development. The research pointing to a "seismic impact" on the labor market this year is not an exaggeration; it is a stark warning. This impact could manifest in various ways: increased unemployment in sectors highly susceptible to automation, a widening skills gap between those who can leverage AI and those who cannot, and a potential restructuring of the employer-employee relationship. The question is not if AI will change the job market, but how profoundly and how quickly.

The internal conflicts within the AI industry only serve to exacerbate public confusion and mistrust. When the pioneers and leaders of AI are engaged in public spats, it creates an environment of uncertainty and makes it difficult for the public to gain a clear understanding of the technology’s risks and benefits. Yann LeCun’s commentary, for instance, can be interpreted as a critique of the current trajectory of AI development, perhaps hinting at a focus on flashy, attention-grabbing capabilities over responsible and ethical advancement. The Musk vs. OpenAI lawsuit, on the other hand, speaks to the intense competition and potential for intellectual property disputes that characterize the fast-paced AI race. This is not merely a matter of corporate rivalry; it reflects fundamental disagreements about the future of AI, its commercialization, and its ultimate purpose.

The "zombie movie" analogy is apt because the AI industry, in its current state, seems to be driven by a relentless pursuit of progress, sometimes at the expense of careful consideration of the consequences. The rapid release of new models and features, often with limited public understanding of their underlying mechanisms or potential societal impacts, creates a sense of unease. The "messy exes" description of Musk and OpenAI highlights the deeply personal and often acrimonious nature of these disputes, suggesting that they are not just about business but also about ego, vision, and perhaps even a sense of betrayal. The impending trial will likely involve revelations about the early days of OpenAI, its mission, and the intentions of its key figures, potentially shedding light on the very foundations of modern AI development.

The current situation demands a more nuanced and informed public discourse. Instead of succumbing to either unbridled optimism or paralyzing fear, we need to engage in a critical examination of AI’s capabilities and its potential consequences. This requires a concerted effort from researchers, policymakers, industry leaders, and the public to foster transparency, establish ethical guidelines, and invest in education and retraining programs. The very fact that AI can simultaneously be a tool for generating explicit content and a sophisticated medical diagnostic aid underscores the urgent need for a balanced approach. We must harness AI’s transformative power for good while diligently mitigating its potential harms.

The AI Hype Index, therefore, is not a static measure but a dynamic reflection of our collective anxieties and aspirations surrounding this revolutionary technology. It is a testament to the fact that AI is no longer a futuristic concept but a present reality, shaping our lives in profound and often unexpected ways. The stories of Grok’s controversial outputs and Claude Code’s impressive functionalities, juxtaposed with the internal turmoil of the AI industry, paint a vivid picture of a field at a critical juncture. As we move forward, it is imperative that we approach AI with a combination of informed curiosity, critical skepticism, and a steadfast commitment to ensuring that its development and deployment serve the best interests of humanity. The "popcorn" may be plentiful, but the stakes are far too high to simply be passive observers. We must actively participate in shaping the future of AI, ensuring that its power is wielded responsibly and ethically for the betterment of all. The current state of affairs, with its contradictory narratives and public feuds, is a clear signal that the time for thoughtful deliberation and decisive action is now.