OpenAI Embarks on Ambitious Scientific Endeavor, Chatbots Grapple with Age Verification

In a significant strategic pivot, artificial intelligence leader OpenAI, widely recognized for the transformative impact of its ChatGPT since its 2022 debut, is now setting its sights on a new frontier: scientific advancement. The company has formally announced the establishment of "OpenAI for Science," a dedicated team tasked with exploring and enhancing the capabilities of its large language models (LLMs) to support and accelerate scientific research. This initiative marks a deliberate move to leverage AI’s potential beyond everyday applications and into the complex realm of discovery. Kevin Weil, a vice president at OpenAI and the leader of this new venture, elaborated on the strategic rationale, the alignment with OpenAI’s overarching mission, and the specific goals of this scientific push in an exclusive interview. The implications of integrating advanced AI into the scientific process are vast, potentially revolutionizing fields from drug discovery and materials science to climate modeling and fundamental physics. By tailoring its powerful LLMs to the unique demands of scientific inquiry, OpenAI aims to unlock new avenues of research, expedite hypothesis generation, and streamline complex data analysis, ultimately aiming to democratize access to cutting-edge research tools and foster unprecedented breakthroughs. The move also signals a growing recognition within the AI industry of the profound societal impact that can be achieved by applying these technologies to address humanity’s most pressing challenges.

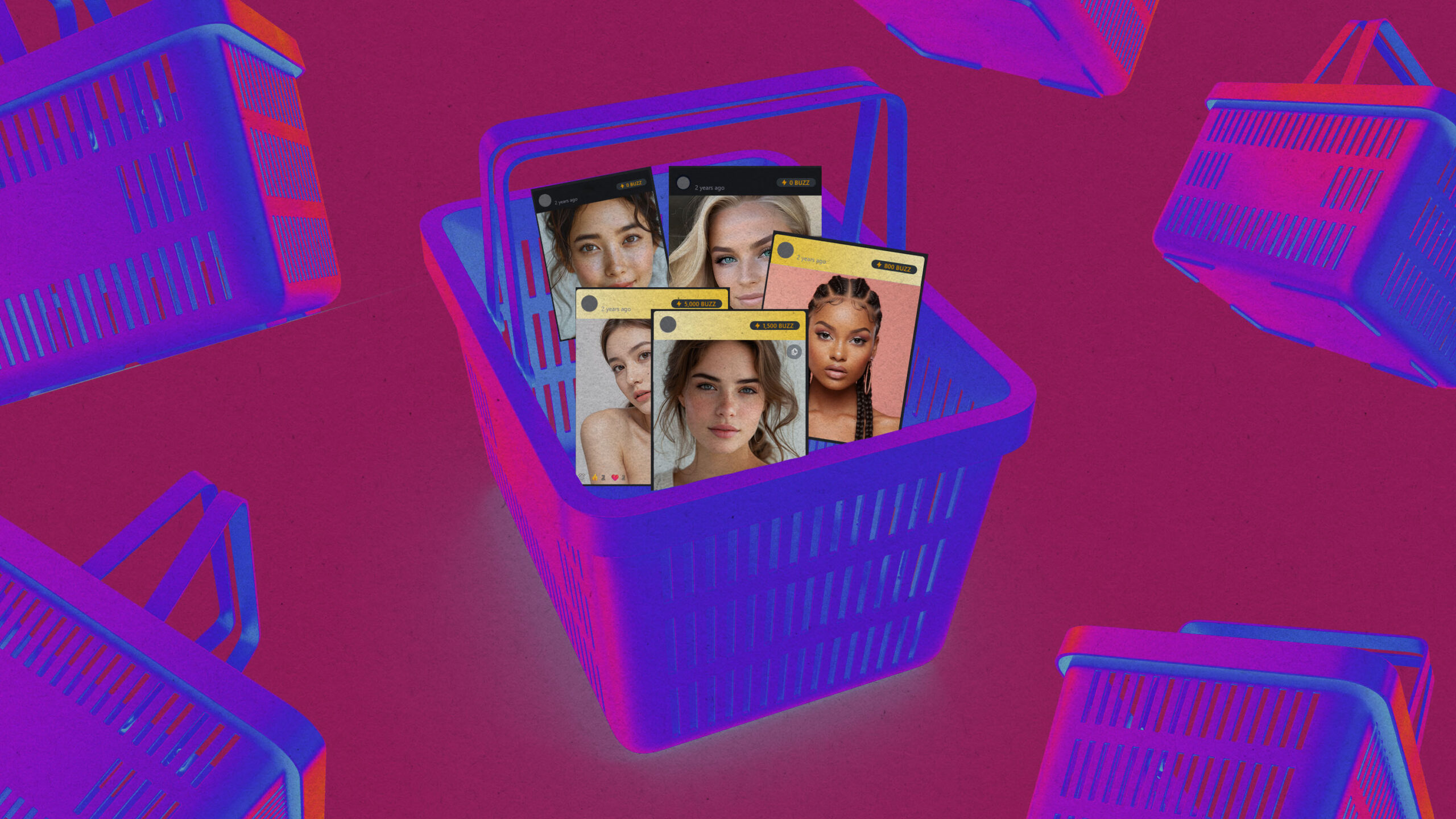

Concurrently, the burgeoning landscape of AI chatbots is facing increased scrutiny regarding child safety, prompting a rapid evolution in age verification protocols. The growing concern over potential dangers that children might encounter when interacting with AI chatbots has pushed technology companies to re-evaluate their existing measures. While previously, companies often relied on self-reported birthdays – easily falsifiable information – to comply with child privacy laws without mandating content moderation based on age, this approach is rapidly becoming insufficient. Recent developments in the United States highlight a swift shift in regulatory expectations and industry practices. These changes signal a new battleground for child safety advocates and parents alike, as the ethical implications of AI interactions with minors come to the forefront. The urgency stems from the inherent vulnerabilities of children and the potential for AI chatbots, if not properly safeguarded, to expose them to inappropriate content, predatory behavior, or even harmful misinformation. The technological challenge lies in developing robust, yet user-friendly, age verification systems that can effectively distinguish between adult and minor users, thereby enabling age-appropriate content moderation and safeguarding vulnerable populations. This evolving regulatory environment and public concern are likely to drive significant innovation in both AI safety features and the methods used to ensure responsible deployment of these powerful technologies.

The Dawn of Commercial Space Stations and the Shifting Landscape of Tech and Policy

A new era in space exploration is dawning with the imminent transition from government-led orbital outposts to privately operated commercial space stations. For over two decades, the International Space Station (ISS) has served as humanity’s primary foothold in orbit, hosting astronauts and facilitating invaluable scientific research. However, with the ISS projected for decommissioning in 2031, NASA is actively fostering the development of private space stations, having already allocated substantial funding to several companies for this purpose. This strategic investment signifies a monumental shift, promising to dramatically increase access to space and unlock new commercial opportunities. These new orbital platforms are envisioned to not only continue scientific research but also to serve as hubs for in-space manufacturing, tourism, and potentially even resource utilization. The move towards commercialization is driven by the inherent limitations of government-funded projects, including escalating costs and slower innovation cycles. By empowering private entities, the aim is to create a more dynamic and accessible space economy, fostering competition and driving down the cost of accessing and operating in orbit. This transition is expected to accelerate the pace of innovation and expand the scope of human activity beyond Earth, opening up possibilities that were once confined to science fiction. The development of these commercial space stations is considered a breakthrough technology, poised to redefine humanity’s relationship with space and usher in an era of unprecedented orbital enterprise.

In a parallel development, tech workers are intensifying their calls for corporate leaders to publicly condemn the actions of U.S. Immigration and Customs Enforcement (ICE). This advocacy underscores a growing sentiment among employees for their companies to take more active stances on social and political issues, particularly those involving human rights and civil liberties. The pressure from within the tech industry reflects a broader societal debate about corporate responsibility and the role of large technology firms in public discourse. Many employees feel that the silence from major tech companies on sensitive issues like ICE’s enforcement practices is a tacit endorsement of policies they find ethically objectionable. The urgency of this movement is amplified by reports of hundreds of employees signing open letters and engaging in internal discussions to push their CEOs to speak out. This internal pressure is often a response to the perceived lack of public commitment from corporate leadership, which employees argue has a significant platform and influence. The growing willingness of tech workers to voice their dissent and demand action from their employers signifies a maturing of the tech workforce’s social conscience and a desire for their companies to align with their personal values. This trend suggests a potential shift in the power dynamics within corporations, where employee activism is increasingly becoming a force that influences corporate decision-making and public positioning on contentious issues.

Furthermore, the U.S. Department of Transportation’s proposal to utilize artificial intelligence (AI) for drafting new safety regulations has ignited significant concern and criticism. Critics argue that delegating such a critical task to AI, particularly in areas with direct implications for public safety, is inherently risky and potentially dangerous. The core apprehension revolves around the possibility of AI systems failing to identify critical errors or nuances that a human regulator would recognize, leading to potentially fatal consequences for civilians. The inherent limitations of current AI, including its susceptibility to biases present in training data and its lack of true contextual understanding, make its application in drafting safety regulations a subject of intense debate. Proponents of AI integration often highlight its potential for efficiency and speed, but the gravity of ensuring safety in transportation necessitates a level of human oversight and judgment that AI may not yet be capable of replicating. The debate underscores the need for careful consideration and robust safeguards when deploying AI in high-stakes decision-making processes, especially those that directly impact human lives. The potential for AI to overlook critical safety considerations or introduce unforeseen risks necessitates a cautious and human-centric approach to its implementation in regulatory frameworks.

The escalating tensions surrounding immigration policy and its intersection with technology are further evidenced by the FBI’s investigation into Minnesota Signal chats that allegedly tracked federal agents. This development highlights the complex interplay between law enforcement, privacy concerns, and the use of digital communication tools. While the FBI’s investigation aims to address potential threats to federal agents, civil liberties advocates contend that the information being shared is legally obtained and falls under the purview of free speech protections. This situation underscores the ongoing societal debate about the balance between national security and individual freedoms, particularly in the context of digital communication. The use of encrypted messaging platforms like Signal by activist groups for organizing and information sharing presents a challenge for law enforcement agencies seeking to monitor activities deemed potentially harmful. The legal ramifications of such investigations and the interpretation of free speech in the digital age are likely to remain contentious, shaping future policies and legal precedents concerning online communication and activism.

In a peculiar turn of events, TikTok users have reported an inability to send the term "Epstein" in direct messages, a phenomenon the company claims to be unaware of the cause for. This alleged censorship, coupled with reports of users experiencing difficulties uploading anti-ICE videos, has raised questions about TikTok’s content moderation practices, especially in the context of its recent ownership changes. The timing of these issues, particularly following its first weekend under new ownership, has led to speculation about potential glitches or deliberate content filtering. The situation has drawn the attention of government officials, with California Governor Gavin Newsom expressing intent to investigate whether TikTok is censoring content critical of former President Trump. These developments collectively point to a heightened level of scrutiny regarding platform moderation, political content, and the influence of social media companies on public discourse, particularly in politically charged environments. The perceived inconsistencies in content moderation and the potential for censorship on large social media platforms remain a significant concern for users, policymakers, and researchers alike, highlighting the ongoing challenges in ensuring transparency and fairness in online content governance.

The safety of AI-generated content, particularly concerning its impact on minors, is a growing area of concern, with a recent report labeling XAI’s Grok chatbot as "not safe for children or teens." This finding underscores the critical need for robust safety measures and ethical considerations in the development and deployment of conversational AI. The report’s findings suggest that Grok may be disseminating inappropriate or harmful content, raising questions about its suitability for younger users. This situation is further compounded by the European Union’s investigation into whether Grok disseminates illegal content, indicating a broader regulatory concern across different jurisdictions. The potential for AI chatbots to generate or facilitate the spread of harmful or illegal material necessitates stringent oversight and a commitment to developing AI systems that prioritize user safety and adhere to legal and ethical standards. The ongoing investigations and reports highlight the urgent need for greater transparency and accountability from AI developers regarding the safety and ethical implications of their creations, especially when they interact with vulnerable populations.

The resurgence of measles in the United States, leading to the nation being on the verge of losing its measles-free status, highlights a critical public health challenge. This outbreak, following a year of extensive outbreaks, underscores the fragility of herd immunity and the consequences of declining vaccination rates. The resurgence of a once-controlled disease serves as a stark reminder of the importance of widespread vaccination campaigns and public health infrastructure. The effectiveness of wastewater tracking as a tool to monitor and potentially mitigate the spread of infectious diseases like measles is also being explored, offering a potential avenue for early detection and intervention. This situation underscores the ongoing importance of public health initiatives and the need for sustained vigilance in combating infectious diseases, particularly in an era where misinformation and vaccine hesitancy can undermine critical public health efforts. The ability to accurately track and predict disease outbreaks through innovative methods like wastewater analysis could prove invaluable in future public health crises.

Georgia has emerged as the latest U.S. state to consider banning data centers, joining Maryland and Oklahoma in expressing concerns about the burgeoning industry. This trend reflects a growing unease among some communities regarding the environmental impact and resource demands of data centers, particularly in the context of the increasing energy consumption driven by AI and digital technologies. While data centers are essential for the functioning of the digital economy, their substantial energy and water requirements, as well as their land use, are prompting a re-evaluation of their placement and expansion. The contradiction between the perceived necessity of data centers for technological advancement and the growing public opposition to their proliferation highlights a complex societal challenge. This dilemma underscores the need for sustainable data center development practices, efficient resource management, and a careful consideration of their environmental footprint. The debate also raises questions about the future of digital infrastructure and the potential for localized opposition to impact the scalability of technologies reliant on large-scale data processing.

The ambitious futuristic city project, "The Line," in Saudi Arabia, faces significant uncertainty, with projections suggesting it might be repurposed as a data center hub instead of its envisioned residential capacity for nine million people. This potential shift underscores the immense challenges and evolving priorities associated with large-scale, visionary urban development projects. Originally conceived as a groundbreaking model for future living, The Line’s altered trajectory suggests a reevaluation of its feasibility or a pivot towards more immediate, perhaps technologically driven, applications. The prospect of it becoming a data center hub, while potentially less aspirational than a megacity, reflects the escalating demand for computing infrastructure driven by AI and other digital advancements. This development also raises questions about the long-term viability of mega-projects and the adaptive strategies employed when initial visions encounter unforeseen obstacles or shifting economic landscapes. The original vision of The Line, which MIT Technology Review exclusively covered in 2022, was characterized by its radical design and ambitious goals, making its potential transformation a significant indicator of the pragmatic realities that can shape even the most forward-thinking urban planning initiatives.

A recent scientific discovery suggests that Earth’s lighter elements might be hidden deep within its core, offering a new perspective on the planet’s internal composition. This research challenges previous understandings of planetary formation and the distribution of elements within Earth’s structure. The implication that lighter elements, such as hydrogen and helium, could be sequestered in the planet’s deepest layers opens up new avenues of inquiry for geophysicists and chemists. Understanding the precise composition of Earth’s core is crucial for comprehending the planet’s magnetic field, seismic activity, and overall geological evolution. This finding could revolutionize our models of planetary interiors and provide insights into the processes that govern the Earth’s dynamic systems. The quest to understand the Earth’s core continues to be a frontier of scientific exploration, with each new discovery potentially reshaping our fundamental knowledge of our own planet.

The realm of AI-generated influencers is becoming increasingly surreal, featuring virtual beings with unconventional attributes such as conjoined twins and triple-breasted women. This trend highlights the rapid advancement and, at times, unsettling nature of AI’s creative capabilities in generating hyper-realistic yet fantastical imagery. The emergence of such surreal AI-generated content raises questions about the boundaries of artificial creativity and the potential for these virtual personas to influence cultural perceptions and aesthetics. This development also intersects with broader concerns about the manipulation of visual content and the blurring lines between reality and artificiality in the digital age. The increasing sophistication of AI in generating these novel and sometimes bizarre figures underscores the need for critical engagement with digital media and a heightened awareness of the artificial origins of certain visual narratives. The potential for AI-generated content to challenge traditional notions of beauty, identity, and representation is significant, prompting ongoing discussions about its societal impact and ethical implications.

Quote of the Day and a Developer’s Unwavering Vision for Grid Connectivity

“Humanity is about to be handed almost unimaginable power, and it is deeply unclear whether our social, political, and technological systems possess the maturity to wield it.” This stark warning comes from Anthropic CEO Dario Amodei, who in a recent essay, expresses grave concerns about the imminent dangers of AI superintelligence. Amodei’s statement underscores the profound ethical and societal challenges posed by the rapid advancement of artificial intelligence. The potential for AI to surpass human cognitive abilities raises critical questions about control, safety, and the responsible stewardship of such transformative power. The essay, reported by Axios, highlights a growing sentiment among AI leaders about the need for careful consideration and proactive measures to ensure that humanity can safely navigate the era of advanced AI. The implications of this impending power, as Amodei suggests, necessitate a deep introspection into our societal structures and our collective capacity to manage technologies that could fundamentally alter the course of human civilization.

In a testament to persistent vision, energy entrepreneur Michael Skelly continues his fight to connect the U.S. power grids, despite years of setbacks. For over fifteen years, Skelly has been dedicated to developing long-haul transmission lines designed to transport wind power across vast regions of the United States. His unwavering belief in the necessity of linking the nation’s grids to accelerate the transition from fossil fuels to renewable energy sources has driven his relentless pursuit. Despite previous business failures and the halting of several projects, Skelly remains convinced that his vision was simply ahead of its time. His contention is gaining traction as markets and policymakers increasingly recognize the critical importance of robust and interconnected energy grids for achieving climate goals and ensuring energy security. Skelly’s story exemplifies the tenacity required to drive large-scale infrastructure change, highlighting the long and often arduous path to realizing transformative technological and societal shifts. His commitment serves as an inspiration for those striving to implement ambitious solutions to complex global challenges, underscoring the idea that persistence, even in the face of adversity, can ultimately lead to progress.