The urgent question of how to protect children interacting with artificial intelligence has escalated dramatically, forcing Big Tech and policymakers to confront the potential dangers of AI chatbots and the content they might expose to young users. While companies have historically asked for birthdays to comply with child privacy laws, this information was often not used to moderate content, leaving a critical gap in protection. Recent developments, particularly over the last week, underscore a rapid shift in the United States, transforming the issue of AI child safety into a significant political and societal battleground, even sparking debate among parents and child safety advocates.

At the forefront of this evolving landscape is the Republican Party, which has championed legislation in several states mandating age verification for websites featuring adult content. Critics, however, argue that these measures can serve as a pretext to censor a broader spectrum of content deemed "harmful to minors," potentially including vital sex education resources. In parallel, states like California are taking a more direct approach, targeting AI companies with legislation designed to safeguard children conversing with chatbots, requiring them to identify underage users. Meanwhile, President Trump is advocating for a unified national approach to AI regulation, aiming to prevent a patchwork of state-specific rules. The level of support for various federal bills in Congress remains fluid, reflecting the complexity and sensitivity of the issue.

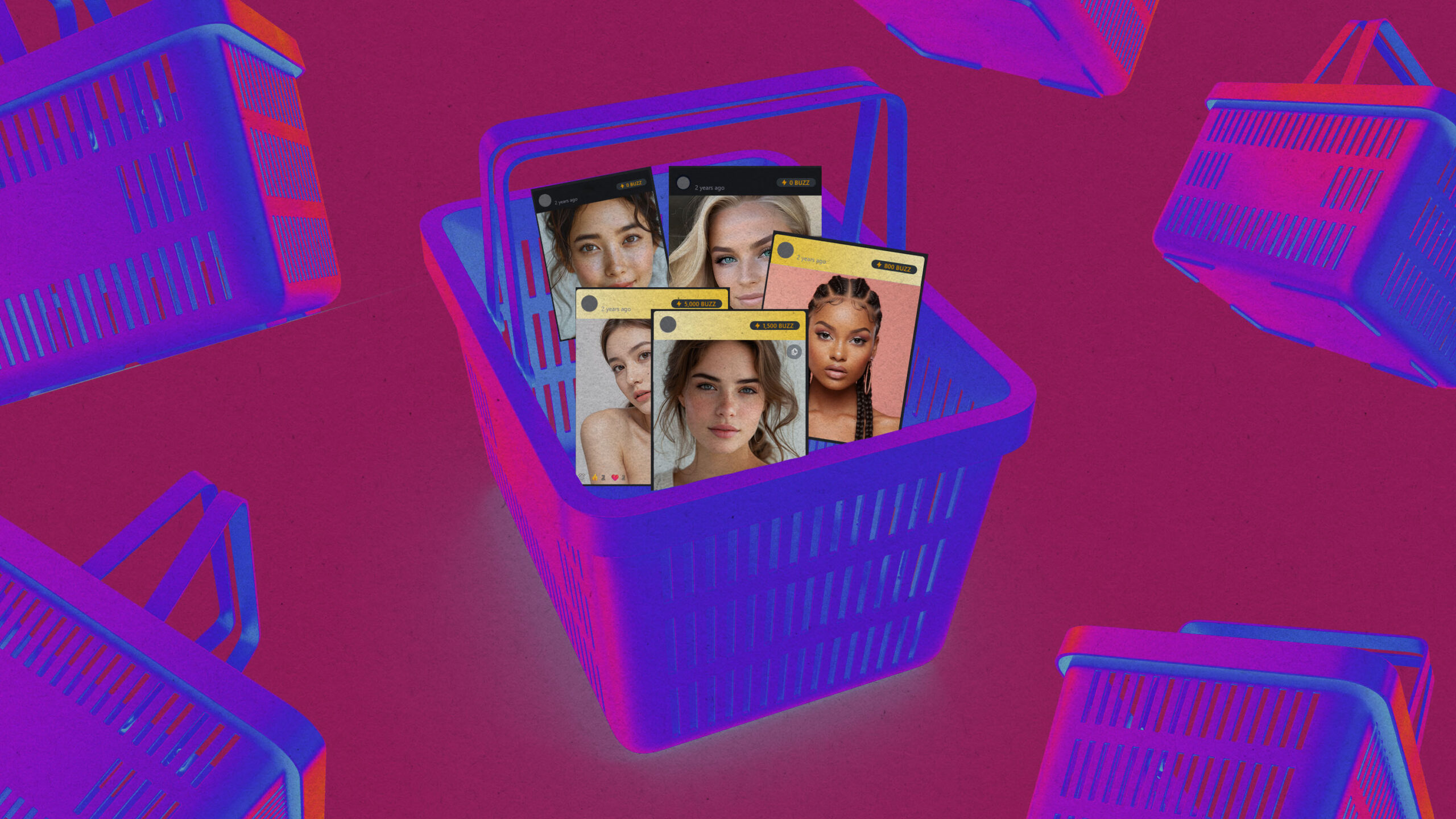

The central debate is rapidly moving beyond the necessity of age verification and focusing on a far more contentious point: who will bear the responsibility for its implementation. This responsibility has become a highly undesirable burden, a "hot potato" that no company is eager to hold.

In a significant move, OpenAI announced last Tuesday its intention to implement automatic age prediction. This innovative approach involves deploying a predictive model that analyzes various factors, including the time of day, to estimate whether a user is under 18. For individuals identified as minors, ChatGPT will then enforce content filters designed to "reduce exposure" to potentially harmful material, such as graphic violence or sexual role-play scenarios. This initiative follows a similar rollout by YouTube last year, which introduced age estimation technology to identify U.S. teens and apply enhanced protections.

While this development might seem like a victory for those advocating for age verification while also valuing privacy, a significant caveat exists. The age prediction system, by its nature, is not infallible and can misclassify individuals, potentially labeling a child as an adult or vice versa. To address these inaccuracies, users who are incorrectly identified as under 18 can opt to verify their identity through methods such as submitting a selfie or a government ID to a third-party company, Persona.

However, selfie verification presents its own set of challenges. Studies have shown that these systems can be less accurate for individuals with darker skin tones and for those with certain disabilities. Furthermore, Sameer Hinduja, co-director of the Cyberbullying Research Center, highlights a critical vulnerability: Persona’s necessity to store millions of government IDs and vast amounts of biometric data. "When those get breached, we’ve exposed massive populations all at once," he warns, underscoring the significant privacy and security risks associated with such data aggregation.

As an alternative, Hinduja champions device-level verification. This model proposes that parents would specify a child’s age when initially setting up a child’s phone. This age information would then be securely stored on the device and shared with applications and websites only as needed, offering a more privacy-preserving approach.

This device-centric model aligns with the recent lobbying efforts of Apple CEO Tim Cook. Cook has reportedly urged U.S. lawmakers to consider such an approach, pushing back against proposals that would require app stores to conduct age verification, a responsibility that would place significant liability on Apple.

Further insights into the direction of these regulatory efforts are expected on Wednesday, when the Federal Trade Commission (FTC), the agency tasked with enforcing these potential new laws, is hosting an all-day workshop on age verification. The workshop will feature a range of stakeholders, including Nick Rossi, Apple’s head of government affairs, alongside senior child safety executives from Google and Meta, and representatives from a company specializing in marketing to children.

The FTC’s role in AI regulation has become increasingly politicized under President Trump. A prior federal court ruling that struck down the dismissal of the sole Democratic commissioner is currently pending review by the U.S. Supreme Court. In July, there were indications that the agency was softening its stance towards AI companies. This trend was further evidenced in December when the FTC overturned a Biden-era ruling against an AI company that had facilitated the proliferation of fake product reviews, stating that the ruling conflicted with President Trump’s AI Action Plan.

Wednesday’s workshop is anticipated to illuminate the partisan divisions surrounding the FTC’s approach to age verification. States with Republican leadership generally favor laws requiring age verification for adult content websites, though critics express concern that such mandates could be exploited to restrict access to a wider array of content. Bethany Soye, a Republican state representative leading an effort to pass such a bill in South Dakota, is slated to speak at the FTC meeting. The American Civil Liberties Union (ACLU), conversely, generally opposes laws that mandate identification for website access and instead advocates for the expansion of existing parental control tools.

While these legislative and regulatory debates unfold, the rapid advancement of AI has already profoundly impacted the landscape of child safety. The increased generation of child sexual abuse material, coupled with alarming reports of suicides and self-harm following chatbot interactions, and the unsettling evidence of children forming deep attachments to AI companions, all highlight the pressing nature of this issue. The intricate interplay of competing interests regarding privacy, political ideologies, freedom of expression, and surveillance will undoubtedly complicate any efforts to forge effective solutions. Readers are invited to share their perspectives on this critical and rapidly evolving challenge.