However, as the global race intensifies to develop the first truly robust and commercially viable large-scale quantum computer, a fundamental and increasingly pressing challenge has emerged: if these machines can solve problems that are demonstrably intractable for classical computers, how can we ever be certain that their answers are correct? This question, once a theoretical curiosity, is now a critical bottleneck in the advancement of quantum technology. The very nature of quantum computation, which leverages principles like superposition and entanglement to explore vast computational spaces simultaneously, means that direct comparison with classical simulations for complex problems becomes prohibitively time-consuming, often stretching into millennia.

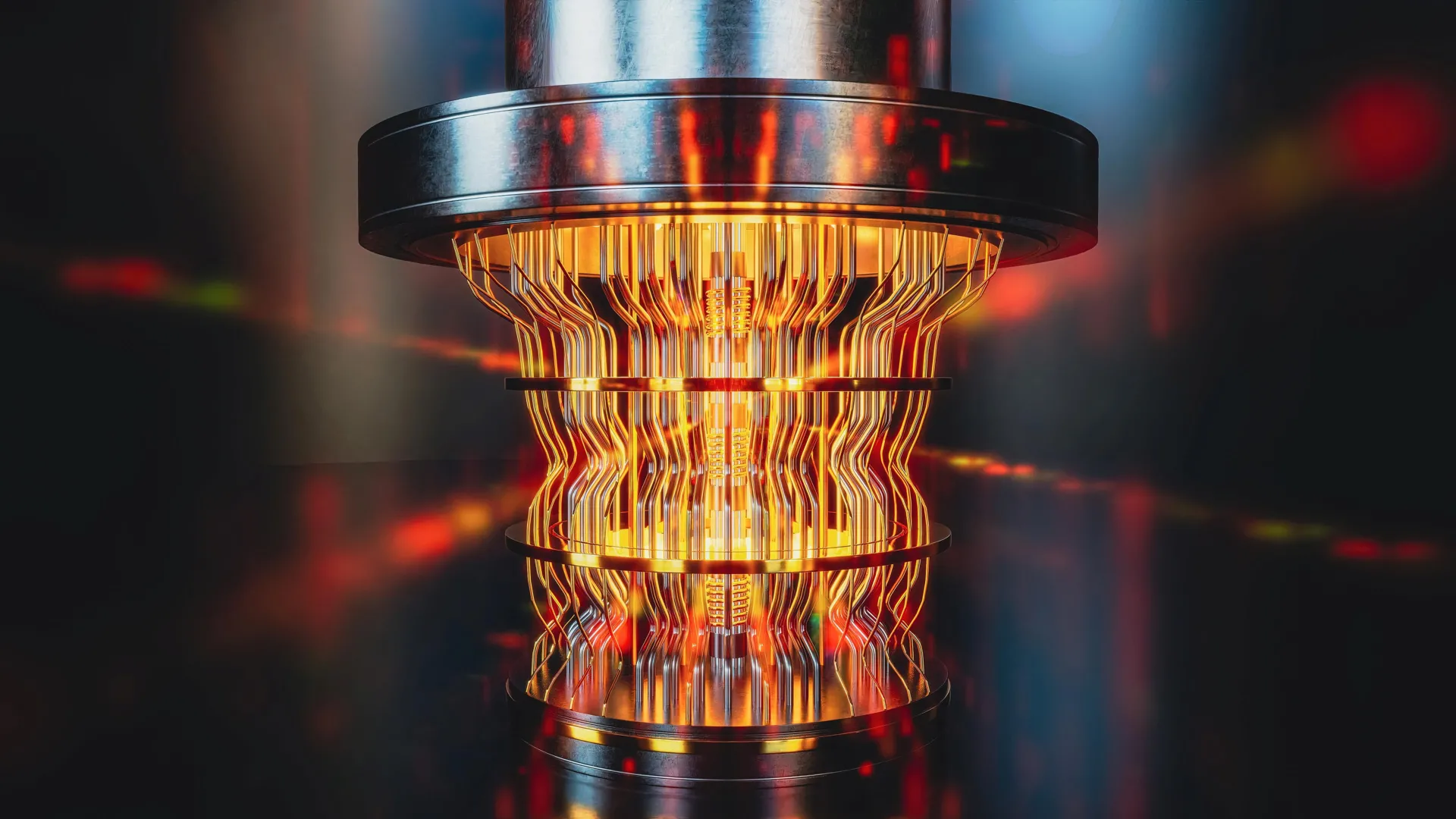

A pivotal recent study, emanating from the esteemed Swinburne University of Technology, has taken a significant stride towards resolving this critical dilemma. The research team, led by Postdoctoral Research Fellow Alexander Dellios from Swinburne’s Centre for Quantum Science and Technology Theory, has developed novel techniques that provide an unprecedented ability to ascertain the correctness of results produced by a specific class of quantum devices, known as Gaussian Boson Samplers (GBS). These GBS machines operate by manipulating photons, the fundamental particles of light, to generate intricate probability distributions. The sheer complexity of these calculations means that even the most advanced classical supercomputers would require thousands of years to replicate them, rendering direct verification an impractical endeavor.

The core of the problem, as articulated by Dellios, lies in the inherent scalability of quantum computational advantages. "There exists a range of problems that even the world’s fastest supercomputer cannot solve, unless one is willing to wait millions, or even billions, of years for an answer," he explains. This immense time disparity creates a paradox for validation: to confirm a quantum computer’s answer to a problem that takes billions of years classically, one would need an equally impossibly long time to check it. "Therefore, in order to validate quantum computers, methods are needed to compare theory and result without waiting years for a supercomputer to perform the same task." This necessity has spurred the development of ingenious indirect validation strategies.

The breakthrough from the Swinburne team lies in the creation of these new, efficient validation tools. These techniques enable researchers to assess the accuracy of a GBS experiment’s output in a remarkably short timeframe. The implications are profound: what once required an insurmountable computational burden can now be evaluated with relative ease. "In just a few minutes on a laptop, the methods developed allow us to determine whether a GBS experiment is outputting the correct answer and what errors, if any, are present," Dellios states, highlighting the dramatic reduction in verification time and resources. This development is not merely an academic exercise; it is a crucial step towards building trust and confidence in the burgeoning field of quantum computing.

To concretely demonstrate the power and utility of their novel approach, the researchers applied it to a recently published GBS experiment. This experiment, chosen for its complexity, would have demanded an estimated 9,000 years for even the most powerful current supercomputers to replicate. The results of their analysis were illuminating and, in some respects, surprising. The team’s validation method revealed that the probability distribution generated by the GBS experiment did not align with the precisely intended theoretical target. Furthermore, their analysis uncovered the presence of additional noise within the experimental setup that had previously gone unnoticed and unquantified. This hidden noise could have been subtly influencing the quantum device’s output, potentially leading to inaccuracies without detection.

The discovery of these discrepancies and previously uncharacterized noise opens up new avenues for scientific inquiry. The next critical phase of research, according to the study, involves delving deeper into the nature of these findings. A key question is whether the unexpected probability distribution observed in the experiment is itself computationally difficult to reproduce using classical means. If it is, it could represent a new type of quantum phenomenon or a subtle challenge to existing theoretical models. Alternatively, and perhaps more immediately relevant to the development of reliable quantum computers, the researchers need to determine if the observed errors are significant enough to cause the device to lose its crucial "quantumness" – the quantum mechanical properties that give it its computational power. Understanding the interplay between errors and quantum coherence is paramount for building fault-tolerant quantum systems.

The implications of this investigation extend far beyond the immediate validation of a single experiment. The development of scalable methods for verifying quantum computations is an indispensable prerequisite for the realization of large-scale, error-free quantum computers that are suitable for commercial deployment. This is a long-term vision that Dellios is deeply committed to pursuing. "Developing large-scale, error-free quantum computers is a herculean task that, if achieved, will revolutionize fields such as drug development, AI, cyber security, and allow us to deepen our understanding of the physical universe," he passionately asserts, underscoring the transformative potential of this technology.

Dellios further emphasizes the foundational role of validation in this ambitious endeavor. "A vital component of this task is scalable methods of validating quantum computers, which increase our understanding of what errors are affecting these systems and how to correct for them, ensuring they retain their ‘quantumness’," he concludes. By providing tools that can efficiently detect and quantify errors, this research not only builds confidence in current quantum experiments but also offers crucial insights for improving quantum hardware design and error correction protocols. This ability to scrutinize and understand the inner workings of quantum devices is fundamental to their progression from experimental curiosities to reliable, world-changing tools. The work at Swinburne University represents a significant leap forward, addressing a core challenge and paving the way for a future where the extraordinary power of quantum computing can be harnessed with confidence and certainty.